ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

In my first month at Doyensec I had the opportunity to bring together both my work and my spare time hobbies. I used the 25% research time offered by Doyensec to integrate the LibreSSL library into OSS-Fuzz. LibreSSL is an API compatible replacement for OpenSSL, and after the heartbleed attack, it is considered as a full-fledged replacement of OpenSSL on OpenBSD, macOS and VoidLinux.

Contextually to this research, we were awarded by Google a $10,000 bounty, 100% of which was donated to the Cancer Research Institute. The fuzzer also discovered 14+ new vulnerabilities and four of these were directly related to memory corruption.

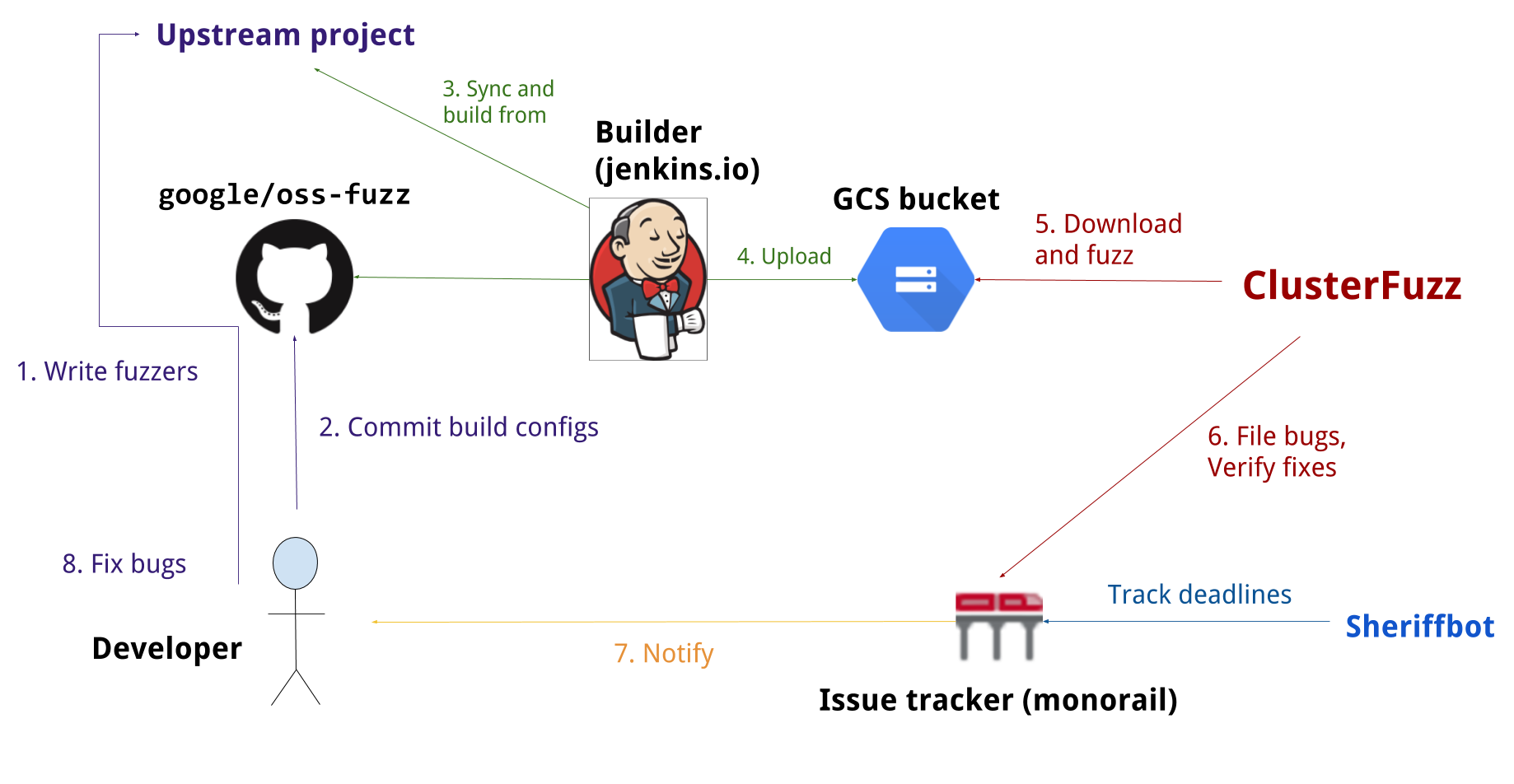

In the following paragraphs we will walk through the process of porting a new project over to OSS-Fuzz

from following the community provided steps all the way to the actual code porting and we will also show a vulnerability fixed in 136e6c997f476cc65e614e514ac3bf6ee54fc4b4.

commit 136e6c997f476cc65e614e514ac3bf6ee54fc4b4

Author: beck <>

Date: Sat Mar 23 18:48:15 2019 +0000

Add range checks to varios ASN1_INTEGER functions to ensure the

sizes used remain a positive integer. Should address issue

13799 from oss-fuzz

ok tb@ jsing@

src/lib/libcrypto/asn1/a_int.c | 56 +++++++++++++++++++++++++++++++++++++++++++++++++++++---

src/lib/libcrypto/asn1/tasn_prn.c | 8 ++++++--

src/lib/libcrypto/bn/bn_lib.c | 4 +++-

3 files changed, 62 insertions(+), 6 deletions(-)

As a voidlinux maintainer, I’m a long time LibreSSL user and proponent. LibreSSL is a version of the TLS/crypto stack forked from OpenSSL in 2014 with the goals of modernizing the codebase, improving security, and applying best practice development procedures. The motivation for this kind of fork arose after the discovery of the Heartbleed vulnerability.

LibreSSL’s efforts are aimed at removing code considered useless for the target platforms, removing code smells and including additional secure defaults at the cost of compatibility. The LibreSSL codebase is now nearly 70% the size of OpenSSL (237558 cloc vs 335485 cloc), while implementing a similar API on all the major modern operating systems.

Forking is considered a Bad Thing not merely because it implies a lot of wasted effort in the future, but because forks tend to be accompanied by a great deal of strife and acrimony between the successor groups over issues of legitimacy, succession, and design direction. There is serious social pressure against forking. As a result, major forks (such as the Gnu-Emacs/XEmacs split, the fissioning of the 386BSD group into three daughter projects, and the short-lived GCC/EGCS split) are rare enough that they are remembered individually in hacker folklore.

Eric Raymond Homesteading the Noosphere

The LibreSSL effort was generally well received and it now replaces OpenSSL on OpenBSD, macOS since 10.11 and on many other Linux distributions. In the first few years 6 critical vulnerabilities were found in OpenSSL and none of them affected LibreSSL.

Historically, these kinds of forks tend to spawn competing projects which cannot later exchange code, splitting the potential pool of developers between them. However, the LibreSSL team has largely demonstrated of being able to merge and implement new OpenSSL code and bug fixes, all the while slimming down the original source code and cutting down on rarely used or dangerous features.

While the development of LibreSSL appears to be a story with an happy ending, the integration of fuzzing and security auditing into the project was much less so. The Heartbleed vulnerability was like a wakeup call to the industry for tackling the security of libraries that make up the core of the internet. In particular, Google opened up OSS-Fuzz project. OSS-Fuzz is an effort to provide, for free, Google infrastructure to perform fuzzing against the most popular open source libraries. One of the first projects performing these tests was in fact Openssl.

Fuzz testing is a well-known technique for uncovering programming errors in software.

Many of these detectable errors, like buffer overflows, can have serious security implications.

OpenSSL included fuzzers in c38bb72797916f2a0ab9906aad29162ca8d53546 and was integrated into OSS-Fuzz later in 2016.

commit c38bb72797916f2a0ab9906aad29162ca8d53546

Refs: OpenSSL_1_1_0-pre5-217-gc38bb72797

Author: Ben Laurie <ben@links.org>

AuthorDate: Sat Mar 26 17:19:14 2016 +0000

Commit: Ben Laurie <ben@links.org>

CommitDate: Sat May 7 18:13:54 2016 +0100

Add fuzzing!

Since both LibreSSL and OpenSSL share most of their codebase, with LibreSSL mainly implementing a secure subset of OpenSSL, we thought porting the OpenSSL fuzzers to LibreSSL would have been a fun and useful project. Moreover, this resulted in the discovery of several memory related corruption bugs.

To be noted, the following details won’t replace the official OSS-Fuzz guide but will instead help in selecting a good target project for OSS-Fuzz integration. Generally speaking applying for a new OSS-Fuzz integration proceeds in four logical steps:

We were awarded a bounty, and we helped to protect the Internet just a little bit more. You should do it too!

After a crash was found, OSS-Fuzz infrastructure provides a minimized test case which can be inspected by an analyst. The issue was found in the ASN1 parser. ASN1 is a formal notation used for describing data transmitted by telecommunications protocols, regardless of language implementation and physical representation of these data, whether complex or very simple. Coincidentally, it is employed for x.509 certificates, which represents the technical base for building public-key infrastructure.

Passing our testcase 0202 ff25 through dumpasn1 it’s possible to see how it errors out saying that the integer of length 2 (bytes) is encoded with a negative value.

This is not allowed in ASN1, and it should not even be allowed in LibreSSL. However, as discovered by OSS-Fuzz, this test crashes the Libressl parser.

$ xxd ./test

xxd ../test

00000000: 0202 ff25 ...%

$ dumpasn1 ./test

0 2: INTEGER 65317

: Error: Integer is encoded as a negative value.

0 warnings, 1 error.

Since the LibreSSL implementation was not guarded against negative integers, trying to covert the ASN1 integer crafted a negative to an internal representation of BIGNUM and causes an uncontrolled over-read.

AddressSanitizer:DEADLYSIGNAL

=================================================================

==1==ERROR: AddressSanitizer: SEGV on unknown address 0x00009fff8000 (pc 0x00000058a308 bp 0x7ffd3e8b7bb0 sp 0x7ffd3e8b7b40 T0)

==1==The signal is caused by a READ memory access.

SCARINESS: 20 (wild-addr-read)

#0 0x58a307 in BN_bin2bn libressl/crypto/bn/bn_lib.c:601:19

#1 0x6cd5ac in ASN1_INTEGER_to_BN libressl/crypto/asn1/a_int.c:456:13

#2 0x6a39dd in i2s_ASN1_INTEGER libressl/crypto/x509v3/v3_utl.c:175:16

#3 0x571827 in asn1_print_integer_ctx libressl/crypto/asn1/tasn_prn.c:457:6

#4 0x571827 in asn1_primitive_print libressl/crypto/asn1/tasn_prn.c:556

#5 0x571827 in asn1_item_print_ctx libressl/crypto/asn1/tasn_prn.c:239

#6 0x57069a in ASN1_item_print libressl/crypto/asn1/tasn_prn.c:195:9

#7 0x4f4db0 in FuzzerTestOneInput libressl.fuzzers/asn1.c:282:13

#8 0x7fd3f5 in fuzzer::Fuzzer::ExecuteCallback(unsigned char const*, unsigned long) /src/libfuzzer/FuzzerLoop.cpp:529:15

#9 0x7bd746 in fuzzer::RunOneTest(fuzzer::Fuzzer*, char const*, unsigned long) /src/libfuzzer/FuzzerDriver.cpp:286:6

#10 0x7c9273 in fuzzer::FuzzerDriver(int*, char***, int (*)(unsigned char const*, unsigned long)) /src/libfuzzer/FuzzerDriver.cpp:715:9

#11 0x7bcdbc in main /src/libfuzzer/FuzzerMain.cpp:19:10

#12 0x7fa873b8282f in __libc_start_main /build/glibc-Cl5G7W/glibc-2.23/csu/libc-start.c:291

#13 0x41db18 in _start

This “wild” address read may be employed by malicious actors to perform leaks in security sensitive context. The Libressl maintainers team not only addressed the vulnerability promptly but also included an ulterior protection in order to guard against missing ASN1_PRIMITIVE_FUNCS in 46e7ab1b335b012d6a1ce84e4d3a9eaa3a3355d9.

commit 46e7ab1b335b012d6a1ce84e4d3a9eaa3a3355d9

Author: jsing <>

Date: Mon Apr 1 15:48:04 2019 +0000

Require all ASN1_PRIMITIVE_FUNCS functions to be provided.

If an ASN.1 item provides its own ASN1_PRIMITIVE_FUNCS functions, require

all functions to be provided (currently excluding prim_clear). This avoids

situations such as having a custom allocator that returns a specific struct

but then is then printed using the default primative print functions, which

interpret the memory as a different struct.

Fuzzing, despite being seen as one of the easiest ways to discover security vulnerabilities, still works very well. Even if OSS-Fuzz is especially tailored to open source projects, it can also be

adapted to closed source projects. In fact, at the cost of implementing the LLVMFuzzerOneInput interface, it integrates all the latest and greatest clang/llvm fuzzer technology.

As Dockerfile language improves enormously on the devops side, we strongly believe that the OSS-Fuzz fuzzing

interface definition language should be employed in every non-trivial closed source project too. If you need help, contact us for your security automation projects!

As always, this research was funded thanks to the 25% research time offered at Doyensec. Tune in again for new episodes!

As a part of our continuing security research journey, we started developing an internal tool to speed-up GraphQL security testing efforts. We’re excited to announce that InQL is available on Github.

InQL can be used as a stand-alone script, or as a Burp Suite extension (available for both Professional and Community editions). The tool leverages GraphQL built-in introspection query to dump queries, mutations, subscriptions, fields, arguments and retrieve default and custom objects. This information is collected and then processed to construct API endpoints documentation in the form of HTML and JSON schema. InQL is also able to generate query templates for all the known types. The scanner has the ability to identify basic query types and replace them with placeholders that will render the query ready to be ingested by a remote API endpoint.

We believe this feature, combined with the ability to send query templates to Burp’s Repeater, will decrease the time to exploit vulnerabilities in GraphQL endpoints and drastically lower the bar for security research against GraphQL tech stacks.

Using the inql extension for Burp Suite, you can:

To use inql in Burp Suite, import the Python extension:

inql_burp.py > NextInQL Scanner Started!In the next future, we might consider integrating the extension within Burp’s BApp Store.

We completely revamped the command line interface in light of InQL’s public release. This interface retains most of the Burp plugin functionalities.

It is now possible to install the tool with pip and run it through your favorite CLI.

pip install inql

For all supported options, check the command line help:

usage: inql [-h] [-t TARGET] [-f SCHEMA_JSON_FILE] [-k KEY] [-p PROXY]

[--header HEADERS HEADERS] [-d] [--generate-html]

[--generate-schema] [--generate-queries] [--insecure]

[-o OUTPUT_DIRECTORY]

InQL Scanner

optional arguments:

-h, --help show this help message and exit

-t TARGET Remote GraphQL Endpoint (https://<Target_IP>/graphql)

-f SCHEMA_JSON_FILE Schema file in JSON format

-k KEY API Authentication Key

-p PROXY IP of web proxy to go through (http://127.0.0.1:8080)

--header HEADERS HEADERS

-d Replace known GraphQL arguments types with placeholder

values (useful for Burp Suite)

--generate-html Generate HTML Documentation

--generate-schema Generate JSON Schema Documentation

--generate-queries Generate Queries

--insecure Accept any SSL/TLS certificate

-o OUTPUT_DIRECTORY Output Directory

An example query can be performed on one of the numerous exposed APIs, e.g anilist.co endpoints:

$ $ inql -t https://anilist.co/graphql

[+] Writing Queries Templates

| Page

| Media

| MediaTrend

| AiringSchedule

| Character

| Staff

| MediaList

| MediaListCollection

| GenreCollection

| MediaTagCollection

| User

| Viewer

| Notification

| Studio

| Review

| Activity

| ActivityReply

| Following

| Follower

| Thread

| ThreadComment

| Recommendation

| Like

| Markdown

| AniChartUser

| SiteStatistics

[+] Writing Queries Templates

| UpdateUser

| SaveMediaListEntry

| UpdateMediaListEntries

| DeleteMediaListEntry

| DeleteCustomList

| SaveTextActivity

| SaveMessageActivity

| SaveListActivity

| DeleteActivity

| ToggleActivitySubscription

| SaveActivityReply

| DeleteActivityReply

| ToggleLike

| ToggleLikeV2

| ToggleFollow

| ToggleFavourite

| UpdateFavouriteOrder

| SaveReview

| DeleteReview

| RateReview

| SaveRecommendation

| SaveThread

| DeleteThread

| ToggleThreadSubscription

| SaveThreadComment

| DeleteThreadComment

| UpdateAniChartSettings

| UpdateAniChartHighlights

[+] Writing Queries Templates

[+] Writing Queries Templates

The resulting HTML documentation page will contain details for all available queries, mutations, and subscriptions.

Back in May 2018, we published a blog post on GraphQL security where we focused on vulnerabilities and misconfigurations. As part of that research effort, we developed a simple script to query GraphQL endpoints. After the publication, we received a lot of positive feedbacks that sparked even more interest in further developing the concept. Since then, we have refined our GraphQL testing methodologies and tooling. As part of our standard customer engagements, we often perform testing against GraphQL technologies, hence we expect to continue our research efforts in this space. Going forward, we will keep improving detection and make the tool more stable.

This project was made with love in the Doyensec Research island.

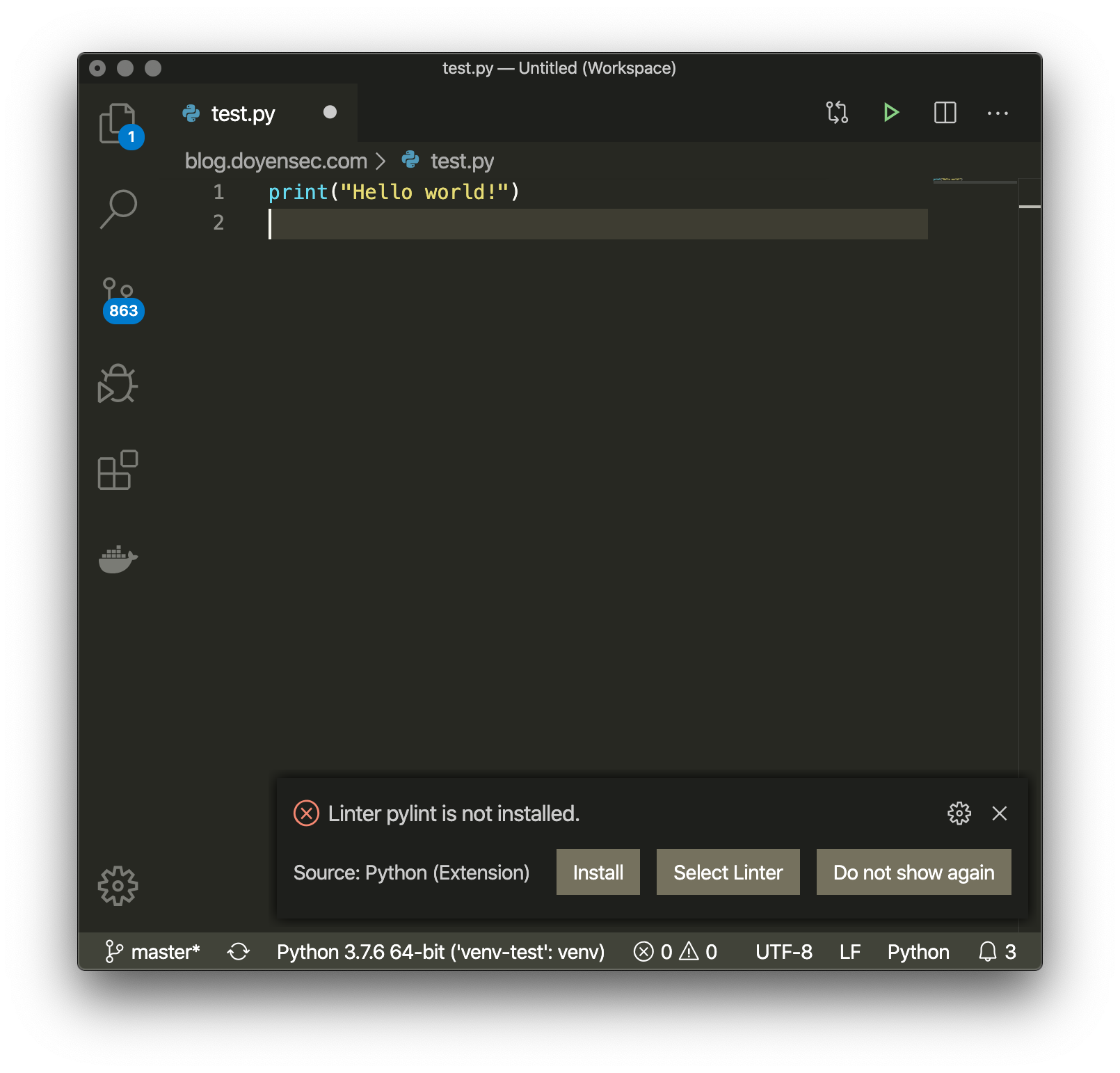

This is the story of how I stumbled upon a code execution vulnerability in the Visual Studio Code Python extension. It currently has 16.5M+ installs reported in the extension marketplace.

Some time ago I was reviewing a client’s Python web application when I noticed a warning

Fair enough, I thought, I just need to install pylint.

To my surprise, after running pip install --user pylint the warning was still there.

Then I noticed venv-test displayed on the lower-left of the editor window. Did VSCode just automatically select the Python environment from the project folder?! To confirm my hypothesis, I installed pylint inside that virtualenv and the warning disappeared.

This seemed sketchy, so I added os.exec("/Applications/Calculator.app") to one of pylint sources and a calculator spawned. Easiest code execution ever!

VSCode behaviour is dangerous since the virtualenv found in a project folder is activated without user interaction. Adding a malicious folder to the workspace and opening a python file inside the project is sufficient to trigger the vulnerability.

Once a virtualenv is found, VSCode saves its path in .vscode/settings.json. If found in the cloned repo, this value is loaded and trusted without asking the user. In practice, it is possible to hide the virtualenv in any repository.

The behavior is not in VSCode core, but rather in the Python extension. We contacted Microsoft on the 2nd October 2019, however the vulnerability is still not patched at the time of writing. Given that the industry-standard 90 days expired and the issue is exposed in a GitHub issue, we have decided to disclose the vulnerability.

You can try for yourself! This innocuous PoC repo opens Calculator.app on macOS:

git clone git@github.com:doyensec/VSCode_PoC_Oct2019.gittest.py in VScodeThis repo contains a “malicious” settings.json which selects the virtualenv in totally_innocuous_folder/no_seriously_nothing_to_see_here.

In case of a bare-bone repo like this noticing the virtualenv might be easy, but it’s clear to see how one might miss it in a real-life codebase. Moreover, it is certainly undesirable that VSCode executes code from a folder by just opening a Python file in the editor.

This is a re-post of the original blogpost published by Gravitational on the 2019 security audit results for their two products: Teleport and Gravity.

You can download the security testing deliverables for Teleport and Gravity from our research page.

We would like to take this opportunity to thank the Gravitational engineering team for choosing Doyensec and working with us to ensure a successful project execution.

We now live in an era where the security of all layers of the software stack are immensely important, and simply open sourcing a code base is not enough to ensure that security vulnerabilities surface and are addressed. At Gravitational, we see it as a necessity to engage a third party that specializes in acting as an adversary, and provide an independent analysis of our sources.

This year, we had an opportunity to work with Doyensec, which provided the most thorough independent analysis of Gravity and Teleport to date. The Doyensec team did an amazing job at finding areas where we are weak in the Gravity code base. Here is the full report for Teleport and Gravity; and you can find all of our security audits here.

Gravity has a lot of moving components. As a Kubernetes distribution and distributed system for delivering Kubernetes in many unique environments, the product’s attack surface isn’t small.

All flaws considered medium or higher except for one were patched and released as they were reported by the Doyensec team, and we’ve also been working towards addressing the more minor and informational issues as part of our normal release process. Out of the four vulnerabilities rated as high by Doyensec, we’ve managed to patch three of them, and the fourth relies on a significant investment in design and tooling change which we’ll go into in a moment.

Part of what Gravity does is package applications into an installer that can be taken to on-prem and air-gapped environments, installing a fully working Kubernetes cluster and application without dependencies. As such, we build our artifacts as a tar file - a virtually universally supported archive format.

Along with this, our own tooling is able to process and accept these tar archives, which is where we run into problems. Golang’s tar handling code is extremely basic and this allows very old tar handling problems to surface, granting specially crafted tar files the ability to overwrite arbitrary system files and allowing for remote code execution. Our tar handling has now been hardened against such vulnerabilities, and we’ll write a post digging into just this topic soon.

When using our cli tools to do single sign on, we launch a browser for the user to the single sign on page. This was done by passing a url from the server to the client to tell it where the SSO page is located.

Someone with access to the server is able to change the url to be a non http(s) url and execute programs locally on the cli host. We’ve implemented sanitization of the url passed by the server to enforce http(s), and also changed the design of some new features to not require trusting data from a server.

Perhaps the most embarrassing issue in this list - the API endpoints responsible for managing API tokens were missing authorization ACLs. This allowed for any authenticated user, even those with empty permissions, to access, edit, and create tokens for other users. This would allow for user impersonation and privilege escalation. This vulnerability was quickly addressed by implementing the correct ACLs, and the team is working hard to ensure these types of vulnerabilities do not reoccur.

This is the vulnerability we haven’t been able to address so far, as it was never a design objective to protect against this particular vulnerability.

Gravity includes a hub product for enterprise customers that allows for the storage and download of application assets, either for installation or upgrade. In essence, part of the hub product is to act as a file server where a company can store their application, and internally or publically connect deployed clusters for updates.

The weakness in the model, as has been seen by many public artifact repositories, is that this security model relies on the integrity of the system storing those assets.

While not necessarily a vulnerability on its own, this is a design weakness that doesn’t match the capabilities the security community expects. The security is roughly equivalent to posting a binary build to Github - anyone with the correct access can modify or post malicious assets, and anyone who trusts Github when downloading that asset could be getting a malicious asset. Instead, packages should be signed in some way before being posted to a public download server, and the software should have a method for trusting that updates and installs come from a trusted source.

This is a really difficult problem that many companies have gotten wrong, so it’s not something that Gravitational as an organization is willing to rush a solution for. There are several well known models that we are evaluating, but we’re not at a stage where we have a solution that we’re completely happy with.

In this realm, we’re also going to end-of-life the hub product as the asset storage functionality is not widely used. We’re also going move the remote access functionality that our customers do care about over to our Teleport product.

As we mentioned in the Teleport 4.2 release notes, the most serious issues were centered around the incorrect handling of session data. If an attacker was able to gain valid x509 credentials of a Teleport node, they could use the session recording facility to read/write arbitrary files on the Auth Server or potentially corrupt recorded session data.

These vulnerabilities could be only exploited using credentials from a previously authenticated client. There was no known way to exploit this vulnerability outside the cluster by non-authenticated clients.

After the re-assessment, all issues with any direct security impact were addressed. From the report:

In January 2020, Doyensec performed a retesting of the Teleport platform and confirmed the effectiveness of the applied mitigations. All issues with direct security impact have been addressed by Gravitational.

Even though all direct issues were mitigated, there was one issue in the report that continued to bother us and we felt we could do better on: “#6: Session Recording Bypasses”. This is something we had known about for quite some time and something we have been upfront with to users and customers. Session recording is a great feature, however due to the inherent complexity of the problem being solved, bypasses do exist.

Teleport 4.2 introduced a new feature called Enhanced Session Recording that uses eBPF tooling to substantially reduce the bypass gaps that can exist. We’ll have more to share on that soon in the form of another blog post that will go into the technical implementation details for that feature.