ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

This is the first in a series of non-technical blog posts aiming at discussing the opportunities and challenges that arise when running a small information security consulting company. After all, day to day life at Doyensec is not only about computers and stories of breaking bits.

The pandemic has deeply affected standard office work and forced us to immediately change our habits. In all probability, no one could have predicted that suddenly the office was going to be “moved”, and the new location is a living room. Remote work has been a hot topic for many years, however the current situation has certainly accelerated the adoption and forced companies to make a change.

At Doyensec, we’ve been a 100% remote company since day one. In this blog post, we’d like to present our best practices and also list some of the myths which surround the idea of remote work. This article is based on our personal experience and will hopefully help the reader to work at home more efficiently. There are no magic recipes here, just a collection of things that work for us.

The most effective solution is to work in a separate and dedicated room, which automatically becomes your office. It is important to physically separate somehow the workplace from the rest of the house, e.g. a screen, small bookcase or curtain. The worst thing you can do is work on the couch or bed where you usually rest. We try not to review source code from the place where we normally eat snacks, or debug an application in the same place we sleep. If possible, work at a desk. It will also be easier for you to mobilize yourself for a specific activity. Also, make sure that your household, especially your young children, do not play in your “office area”. It will be best if this “home office space” belongs exclusively to you.

Prepare a desk with adequate lighting and a comfortable chair. We emphasize the need for a functional, ergonomic chair, and not simply an armchair. It’s about working effectively. The time to relax will come later. Arrange everything so that you work with ease. Notebooks and other materials should be tidied up on the desk and kept neat. This will be a clear, distinguishing feature of the work place. Family members should know that this is a work area from the way it looks. It will be easier for them to get used to the fact that instead of “going to work,” work related responsibilities will be performed at home. Also, this setup gives an opportunity to make security testing more efficient - for example by setting up bigger screens and ready to use testing equipment.

A flexible working time can be treacherous. There are times when an eight hour working day is sufficient to complete an important project. On the other hand, there are situations where various distractions can take attention away from an assigned task. In order to avoid this type of scenario, fixed working hours must be established. For example, some Doyensec employees use BeFocused and Timing apps to regulate their time. Intuitive and user friendly applications will help you balance your private and professional life and will also remind you when it’s time to take a break. Working long hours with no breaks is the main source of burnout.

Traditional work is usually based on a structured day spent in an office environment. The day is organized into work sessions and breaks. When working at home, on the other hand, time must be allotted for non-work related responsibilities on a more subjective basis. It is important for the routine to be elastic enough to include breaks for everything from physical activity (walks) to shopping (groceries) and social interaction. Leaving the house regularly is very beneficial. A break will bring on a refreshed perspective. The current pandemic is obviously the reason why people spend more time inside. Outside physical activities are very important to keep our minds fresh and a set of new endorphins is always welcome. As proof of evidence, our best bugs are usually discovered after a run or a walk outside!

While this sounds like simple and intuitive advice, avoiding distractions is actually really difficult! In general it’s good to turn off notifications on your computer or phone, especially while working. We trust our people and they don’t have to be immediately 100% reachable when working. As long as our consultants provide updates and results when needed, it is perfectly fine to shutdown email and other communication channels. Depending on personal preference, some individuals require complete silence, while others can accomplish their work while listening to music. If you belong to that category of people who cannot work in absolute silence and normal music levels are too intense, consider using white noise. There are applications available that you can use to create a neutral soundtrack that helps you to concentrate. You can easily follow our recommendation on Spotify: something calm, maybe jazz style or classy.

Let’s now talk about some myths related to remote work:

At Doyensec, we have successfully delivered hundreds of projects that were done exclusively remotely. If we are delivering a small project, we usually allocate one security researcher who is able to start the project from scratch and deliver a high quality deliverable, but sometimes we have 2-3 consultants working on the same engagement and the outcome is of the same quality. Most of our communication goes through (PGP-encrypted) emails. An instant messenger can help a great deal when answers are needed quickly. The real challenge is in hiring the right people who can control the project regardless of their physical location. While employing people for our company, we look at both technical and project management skills. According to Jason Fried and Davis Heinemeier Hansson, 37 Signal co-founders, you shouldn’t hire people you don’t trust (Remote). We totally agree with this statement.

The obvious fact is that it is easier to learn when a colleague is physically in the same office and not on the other side of the screen, but we have learned to deal with this problem. Part of our organizational culture is a “screen sharing session” where two people working on the same project analyze source code and look for vulnerabilities together. During our weekly meetings, we also organize a session called “best bugs” where we all share the most interesting findings from a given week.

If a person is not able to organize his/her work day properly, it is easy to drag out the work day from early in the morning to midnight instead of completing everything within the expected eight hours. Self discipline and iterative improvements are the key solutions for an effective day. Work/life balance is important, but who said that forcing a 9am-5pm schedule is the best way to work? Wouldn’t it be better to visit a grocery store or a gym in the middle of the day when no one is around and finish work in the evening?

Healthy remote companies rely on trust. If they didn’t then they wouldn’t offer remote work opportunities in the first place. People working at home carry out their duties like everyone else. In fact, planning activities such as gym-workouts, family time, and hobbies is much easier thanks to the flexible schedule. You can freely organize your work day around important family matters or other responsibilities if necessary.

Companies should be focused on having better hiring processes and ensuring long-term retention instead of being over concerned about the risk of “remote slacking”. In fact, our experience in the past four years would actually suggest that it is more challenging to ensure a healthy work/life balance since our researchers are sufficiently motivated and love what they do.

It should be understood that not all remote work is the same. If you work in customer service and receive regular calls from customers, for example, you might be working from a confined space in a separate room at home. Remote work means working outside the employer’s office. It can mean working in a co-working space, cafeteria, hotel or any other place where you have a good Internet connection.

This one is a bit tricky since it’s technically true and false. It’s true that you usually sit at home and work alone, but in our security work we’re constantly exchanging information via e-mails, Mattermost, Signal, etc. We also have Hangouts video meetings where we can sync up. If someone feels personally isolated, we always recommend signing up for some activities like a gym, book club or other options where like-minded people associate. Lonely individuals are less productive over the long run. Compared to the traditional office model, remote work requires looking for friends and colleagues outside the company - which isn’t a bad thing after all.

We strongly believe that there are people who will still prefer an onsite job. Some individuals need constant contact with others. They also prefer the standard 9am-5pm work schedule. There is nothing wrong with that. People that are working remotely have to make more decisions on their own and need stronger self-discipline. Since they are unable to engage in direct consultation with co-workers, a reduction of direct communication occurs. Nevertheless, remote work will become something “normal” for an increasing number of people, especially for the Y and Z generation.

The Wi-Fi Direct specification (a.k.a. “peer-to-peer” or “P2P” Wi-Fi) turned 10 years old this past April. This 802.11 extension has been available since Android 4.0 through a dedicated API that interfaces with a devices’ built-in hardware which directly connects to each other via Wi-Fi without an intermediate access point. Multiple mobile vendors and early adopters of this technology quickly leveraged the standard to provide their products with a fast and reliable file transfer solution.

After almost a decade, a huge majority of mobile OEMs still rely on custom locked-in implementations for file transfer, even if large cross-vendors alliances (e.g. the “Peer-to-Peer Transmission Alliance”) and big players like Google (with the recent “Nearby Share” feature) are moving to change this in the near future.

During our research, three popular P2P file transfer implementations were studied (namely Huawei Share, LG SmartShare Beam, Xiaomi Mi Share) and all of them were found to be vulnerable due to an insecure shared design. While some groundbreaking research work attacking the protocol layer has already been presented by Andrés Blanco during Black Hat EU 2018, we decided to focus on the application layer of this particular class of custom UPnP service.

This blog post will cover the following topics:

On the majority of OEMs solutions, mobile file transfer applications will spawn two servers:

These two services are used for device discovery, pairing and sessions, authorization requests, and file transport functions. Usually they are implemented as classes of a shared parent application which orchestrate the entire transfer. These components are responsible for:

/description.xml), and events subscriptionAn important consideration for the following abuses is that after a P2P Wi-Fi connection is established, its network interface (p2p-wlan0-0) is available to every application running on the user’s device having android.permission.INTERNET. Because of this, local apps can interact with the FTS and FTC services spawned by the file sharing applications on the local or remote device clients, opening the door to a multitude of attacks.

Smartshare is a stock LG solution to connect their phones to other devices using Wi-Fi (DLNA, Miracast) or Bluetooth (A2DP, OPP). The Beam feature is used for file transfer among LG devices.

Just like other similar applications, an FTS ( FileTransferTransmitter in com.lge.wfds.service.send.tx) and an FTC (FileTransferReceiver in com.lge.wfds.service.send.rx) are spawned and listening on ports 54003 and 55003.

As a way of example, the following HTTP requests demonstrate the FTC and the FTS in action whenever a file transfer session between two parties is requested. First, the FTS performs a CreateSendSession SOAP action:

POST /FileTransfer/control.xml HTTP/1.1

Connection: Keep-Alive

HOST: 192.168.49.1:55003

Content-Type: text/xml; charset="utf-8"

Content-Length: 1025

SOAPACTION: "urn:schemas-wifialliance-org:service:FileTransfer:1#CreateSendSession"

<?xml version="1.0" encoding="UTF-8"?>

<s:Envelope

xmlns:s="http://schemas.xmlsoap.org/soap/envelope/" s:encodingStyle="http://schemas.xmlsoap.org/soap/encoding/">

<s:Body>

<u:CreateSendSession

xmlns:u="urn:schemas-wifialliance-org:service:FileTransfer:1">

<Transmitter>Doyensec LG G6 Phone</Transmitter>

<SessionInformation><?xml version="1.0" encoding="UTF-8"?><MetaInfo

xmlns="urn:wfa:filetransfer"

xmlns:xsd="http://www.w3.org/2001/XMLSchema"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="urn:wfa:filetransfer http://www.wi-fi.org/specifications/wifidirectservices/filetransfer.xsd"><Note>1 and 4292012bytes File Transfer</Note><Size>4292012</Size><NoofItems>1</NoofItems><Item><Name>CuteCat.jpg</Name><Size>4292012</Size><Type>image/jpeg</Type></Item></MetaInfo>

</SessionInformation>

</u:CreateSendSession>

</s:Body>

</s:Envelope>

The SessionInformation node embeds an entity-escaped standard Wi-Fi Alliance schema, urn:wfa:filetransfer, transmitting a CuteCat.jpg picture.

The file name (MetaInfo/Item/Name) is displayed in the file transfer prompt to show to the final recipient the name of the transmitted file. By design, after the recipient’s confirmation, a CreateSendSessionResponse SOAP response will be returned:

HTTP/1.1 200 OK

Date: Sun, 01 Jun 2020 12:00:00 GMT

Connection: Keep-Alive

Content-Type: text/xml; charset="utf-8"

Content-Length: 404

EXT:

SERVER: UPnPServer/1.0 UPnP/1.0 Mobile/1.0

<?xml version="1.0" encoding="UTF-8"?>

<s:Envelope

xmlns:s="http://schemas.xmlsoap.org/soap/envelope/" s:encodingStyle="http://schemas.xmlsoap.org/soap/encoding/">

<s:Body>

<u:CreateSendSessionResponse

xmlns:u="urn:schemas-wifialliance-org:service:FileTransfer:1">

<SendSessionID>33</SendSessionID>

<TransportInfo>tcp:55432</TransportInfo>

</u:CreateSendSessionResponse>

</s:Body>

</s:Envelope>

This will contain the TransportInfo destination port that will be used for the final transfer:

PUT /CuteCat.jpeg HTTP/1.1

User-Agent: LGMobile

Host: 192.168.49.1:55432

Content-Length: 4292012

Connection: Keep-Alive

Content-Type: image/jpeg

.... .Exif..MM ...<redacted>

Unfortunately this design suffers many issues, such as:

CreateSendSessionResponse is issued, no authentication is required to push a file to the opened RX port. Since the DEFAULT_HTTPSERVER_PORT for the receiver is hardcoded to be 55432, any application running on the sender’s or recipient’s device can hijack the transfer and push an arbitrary file to the victim’s storage, just by issuing a valid PUT request. On top of that, the current Session IDs are easily guessable, since they are randomly chosen from a small pool (WfdsUtil.randInt(1, 100));PUT request path to an arbitrary value.DEFAULT_HTTPSERVER_PORT) is opened, it is possible for an attacker to send multiple files in a single transaction, without prompting any notification to the recipient.Because of the above design issues, any malicious third-party application installed on one of the peers’ devices may influence or take over any communication initiated by the legit LG SmartShare applications, potentially hijacking legit file transfers. A wormable malicious application could abuse this insecure design to flood the local or remote victim waiting for a file transfer, effectively propagating its malicious APK without user interaction required. An attacker could also abuse this design to implant arbitrary files or evidence on a victim’s device.

Huawei Share is another file sharing solution included in Huawei’s EMUI operating system, supporting both Huawei terminals and those of its second brand, Honor.

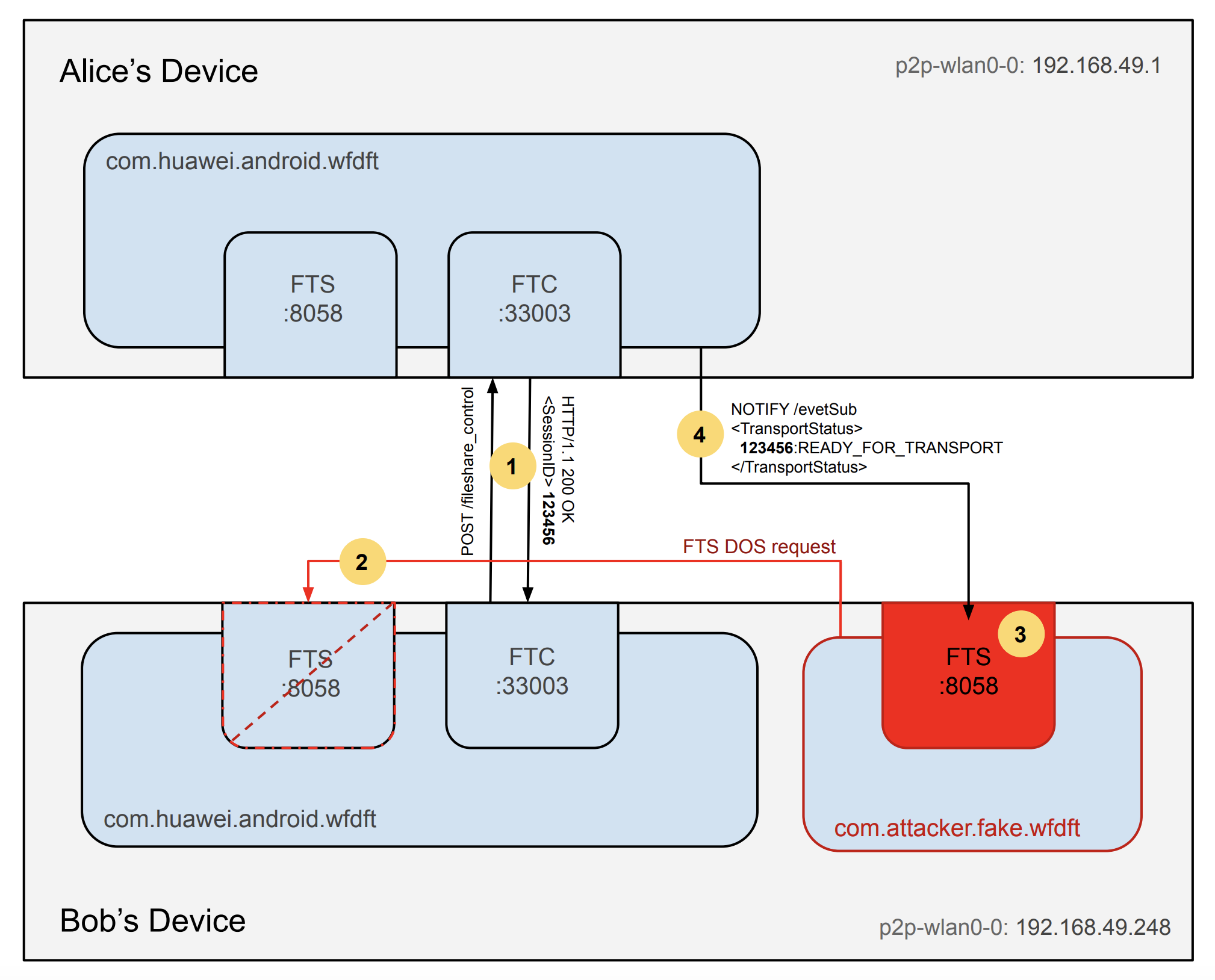

In Huawei Share, an FTS (FTSService in com.huawei.android.wfdft.fts) and an FTC (FTCService in com.huawei.android.wfdft.ftc) are spawned and listening on ports 8058 and 33003.

On a high level, the Share protocol resembles the LG SmartShare Beam mechanism, but without the same design flaws.

Unfortunately, the stumbling block for Huawei Share is the stability of the services: multiple HTTP requests that could respectively crash the FTCService or FTSService were identified. Since the crashes could be triggered by any third-party application installed on the user’s device and because of the UPnP General Event Notification Architecture (GENA) design itself, an attacker can still take over any communication initiated by the legit Huawei Share applications, stealing Session IDs and hijacking file transfers.

In the replicated attack scenario, Alice and Bob’s devices are connected and paired on a Direct Wi-Fi connection. Bob also unwittingly runs a malicious application with little or no privileges on his device.

In this scenario, Bob initiates a file share through Huawei Share 1. His legit application will, therefore, send a CreateSession SOAP action through a POST request to Alice’s FTCService to get a valid SessionID, which will be used as an authorization token for the rest of the transaction. During a standard exchange, after Alice accepts the transfer on her device, a file share event notification (NOTIFY /evetSub) will fire to Bob’s FTSService. The FTSService will then be used to serve the intended file.

NOTIFY /evetSub HTTP/1.1

Content-Type: text/xml; charset="utf-8"

HOST: 192.168.49.1

NT: upnp:event

NTS: upnp:propchange

SID: uuid:e9400170-a170-15bd-802e-165F9431D43F

SEQ: 1

Content-Length: 218

Connection: close

<?xml version="1.0" encoding="utf-8"?>

<e:propertyset xmlns:e="urn:schemas-upnp-org:event-1-0">

<e:property>

<TransportStatus>1924435235:READY_FOR_TRANSPORT</TransportStatus>

</e:property>

</e:propertyset>

Since an inherent time span exists between the manual acceptance of the transfer by Alice and its start, the malicious application could perform a request with an ad-hoc payload to trigger a crash of FTSService 2 and subsequently bind to the same port its own FTSService 3. Because of the UPnP event subscription and notification protocol design, the NOTIFY event including the SessionID (1924435235 in the example above) can now be intercepted by the fake FTSService 4 and used by the malicious application to serve arbitrary files.

The crashes are undetectable both to the device’s user and to the file recipient. Multiple crash vectors using malformed requests were identified, making the service systemically weak and exploitable.

Introduced with MIUI 11, Xiaomi’s MiShare offers AirDrop-like file transfer features between Mi and Redmi phones. Recently this feature was extended to be compatible with devices produced by the “Peer-to-Peer Transmission Alliance” (including vendors with over 400M users such as Xiaomi, OPPO, Vivo, Realme, Meizu).

Due to this transition, MiShare internally features two different sets of APIs:

The websocket-based API is currently used by default for transfers between Xiaomi Devices and this is the one we assessed. As in other P2P solutions, several minor design and implementation bugs were identified:

The JSON-encoded parcel sent via WSS specifying the file properties is trusted and its fileSize parameter is used to check if there is available space on the device left. Since this is the sender’s declared file size, a Denial of Service (DoS) exhausting the remaining space is possible.

Session tokens (taskId) are 19-digits long and a weak source of entropy (java.util.Random) is used to generate them.

Just like the other presented vendor solutions, any third-party application installed on the user’s device can meddle with MiShare’s exchange. While several DoS payloads crashing MiShare are also available, for this vendor the file transfer service is restarted very quickly, making the window of opportunity for an attack very limited.

On a brighter note, the Mi Share protocol design was hardened using per-session TLS certificates when communicating through WSS and HTTPS, limiting the exploitability of many security issues.

Some of the attacks described can be easily replicated in other existing mobile file transfer solutions. While the core technology has always been there, OEMs still struggle to defend their own P2P sharing flavors. Other common vulnerabilities found in the past include similar improper access control issues, path traversals, XML External Entity (XXE), improper file management, and monkey-in-the-middle (MITM) of the connection.

All vulnerabilities briefly described in this post were responsibly disclosed to the respective OEM security teams between April and June 2020.

We’re very happy to announce that a new major release of InQL is now available on our Release Page.

If you’re not familiar, InQL is a security testing tool for GraphQL technology. It can be used as a stand-alone script or as a Burp Suite extension.

By combining InQL v3 features with the ability to send query templates to Burp’s Repeater, we’ve made it very easy to exploit vulnerabilities in GraphQL queries and mutations. This drastically lowers the bar for security research against GraphQL tech stacks.

Here’s a short intro for major features that have been implemented in version 3.0:

InQL now leverages an internal introspection intermediate representation (IIR) to use details obtained from type introspection and generate arbitrarily nested queries with support for any scalar types, enumerations, arrays, and objects. IIR enables seamless “Send to Repeater” functionality from the Scanner to the other tool components (Repeater and GraphQL console).

The new IIR allows us to inspect cycles in defined Graphql schemas by simply using access to graphql introspection-enabled endpoints. In this context, a cycle is a path in the Graphql schema that uses recursive objects in a way that leads to unlimited nesting. The detection of cycles is incredibly useful and automates tedious testing procedures by employing graph solving algorithms. In some of our past client engagements, this tool was able to find millions of cycles in a matter of minutes.

InQL 3.0.0 has an integrated Query Timer. This Query Timer is a reimagination of Request Timer, which can filter for query name and body. The Query Timer is enabled by default and is especially useful in conjunction with the Cycles detector. A tester can switch between graphql-editor modes (Repeater and GraphIQL) to identify DoS queries. Query Timer demonstrates the ability to attack such vulnerable graphql endpoints by counting each query’s execution time.

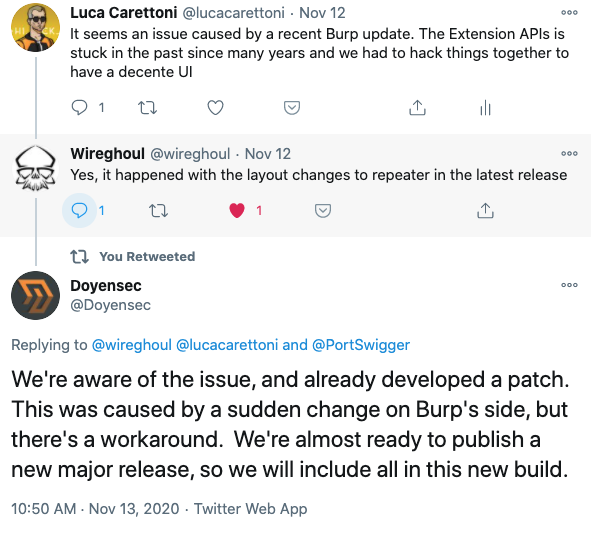

We’re really thankful to all of you for reporting issues in our previous releases. We have implemented various fixes for functional and UX bugs, including a tricky bug caused by a sudden Burp Suite change in the latest 2020.11 update.

We’re excited to see the community embracing InQL as the “go-to” standard for GraphQL security testing. More features to come, so keep your requests and bug reports coming via our Github’s Issue Page. Your feedback is much appreciated!

This project was made with love in the Doyensec Research Island.

As part of my research at Doyensec, I spent some time trying to understand current fuzzing techniques, which could be leveraged against the popular JavaScript engines (JSE) with a focus on V8. Note that I did not have any prior experience with fuzzing JSEs before starting this journey.

My experimentation started with a context-free grammar (CFG) generator: Dharma. I quickly realized that the grammar rules for generating valid JavaScript code that does something interesting are too complicated. Type confusion and JIT engine bugs were my primary focus, however, most of the generated code was syntactically incorrect. Every statement was wrapped in a try/catch block to deal with the incorrect code. After a few days of fuzzing, I was only able to find out-of-memory (OOM) bugs. If you want to read more about V8 JIT and Dharma, I recommend this thoughtful research.

Dharma allows you to specify three sections for various purposes. The first one is called variable and enables you the definition of variables later used in the value section. The last one, variance is commonly used to specify the starting symbol for expanding the CFG tree.

The linkage is implemented inside the value and a nice feature of Dharma is that here you only define the assignment rules or function invocations, and the variables are automatically created when needed. However, if we assign a variable of type A to one with the different type B, we have to include all the type A rules inside the type B object.

Here is an example of such rule:

try { !TYPEDARRAY! = !ARRAYBUFFER!.slice(!ANY_FUNCTION!, !ANY_FUNCTION!) } catch (e) {};

As you can imagine, without writing an additional library, the code quickly becomes complicated and clumsy.

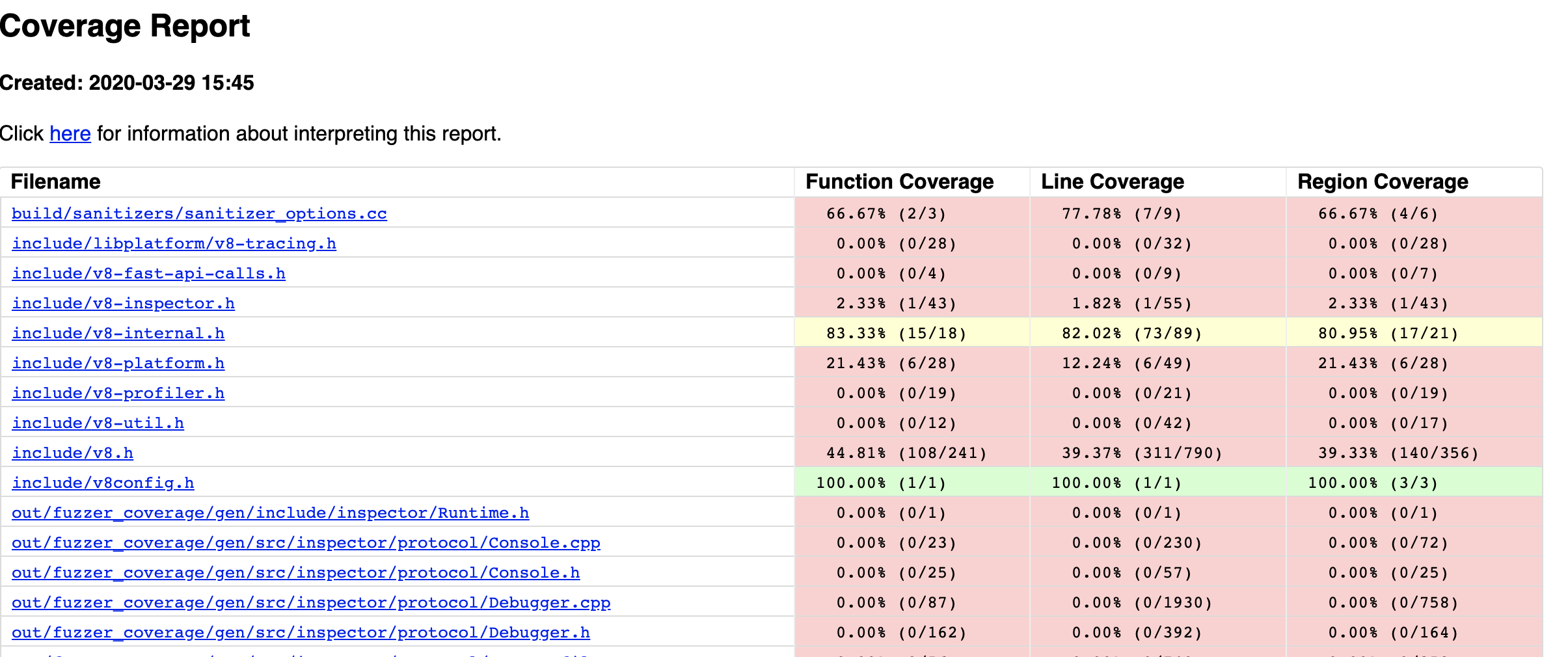

Fuzzing with coverage is mandatory when targeting popular software as a pure blackbox approach only scratches the attack surface. Coverage could be easily obtained when the binary is compiled with a specific Clang (compiler frontend, part of the LLVM infrastructure) flag. Part of the output could be seen in the picture below. In my case, it was only useful for the manual code review and grammar adjustment, as there was no convenient way how to implement the mutator on the JavaScript source code.

As an alternative approach, I started to play with Fuzzilli, which I think is incredible and still a very underrated fuzzer, implemented by Samuel Groß (aka Saelo). Fuzzilli uses an intermediate representation (IR) language called FuzzIL, which is perfectly suitable for mutating. Moreover, any program in FuzzIL could always be converted (lifted) to a valid JavaScript code.

At that time, the supported targets were V8, SpiderMonkey, and JavaScriptCore. As these engines continuously undergo widespread fuzzing, I instead decided to implement support for a different JavaScript Engine. I was also interested in the communication protocol between the fuzzer and the engine, so I considered expanding this fuzzer to be an excellent exercise.

I decided to add support for JerryScript. In the past years, numerous security issues have been discovered on this target by Fuzzinator, which uses the ANTLR v4 testcase generator Grammarinator. Those bugs were investigated and fixed, so I wanted to see if Fuzzilli could find something new.

The best available high-level documentation about Fuzzilli is Samuel’s Masters Thesis, where it was introduced, and I strongly recommend reading it as this article summarizes some of the novel ideas.

Many modern fuzzer architectures use Forkserver. The idea behind it is to run the program until the initialization is complete, but before it processes any input. Right after that, the input from the fuzzer is read and passed to a newly forked child. The overhead is low since the initialization possibly only occurs once, or when a restart is needed (e.g. in the case of continuous memory leaks).

Fuzzilli uses the REPRL approach, which saves the overhead caused by fork() and the measured execution per sample could be ~7 times faster. The JSE engine is modified to read the input from the fuzzer, and after it executes the sample, it obtains the coverage. The crucial part is to reset the state, which is normally (obviously) not done, as the engine uses the context of the already defined variables. In contrast with the Forkserver, we need a rudimentary knowledge of the engine. It is useful to know how the engine’s string representation is internally implemented to feed the input or add additional commands.

LLVM gives a convenient way to obtain the edge coverage. Providing the -fsanitize-coverage=trace-pc-guard compiler flag to Clang, we can receive a pointer to the start and end of the regions, which are initialized by the guard number, as can be read in the llvm documentation:

extern "C" void __sanitizer_cov_trace_pc_guard_init(uint32_t *start,

uint32_t *stop) {

static uint64_t N; // Counter for the guards.

if (start == stop || *start) return; // Initialize only once.

printf("INIT: %p %p\n", start, stop);

for (uint32_t *x = start; x < stop; x++)

*x = ++N; // Guards should start from 1.

}

The guard regions are included in the JSE target. This means that the JavaScript engine must be modified to accommodate these changes. Whenever a branch is executed, the __sanitizer_cov_trace_pc_guard callback is called. Fuzzilli uses a POSIX shared memory object (shmem) to avoid the overhead when passing the data to the parent process. Shmem represents a bitmap, where the visited edge is set and, after each JavaScript input pass, the edge guards are reinitialized.

We are not going to repeat the program generation algorithms, as they are closely described in the thesis. The surprising fact is that all the programs stem from this simple JavaScript by cleverly applying multiple mutators:

Object()

To add a new target, several modifications for Fuzzilli should be implemented. From a high level, the REPRL pseudocode is described here.

As we already mentioned, the JavaScript engine must be modified to conform to Fuzzilli’s protocol. To keep the same code standards and logic, we recommend adding a custom command line parameter to the engine. If we decide to run the interpreter without it, it will run normally. Otherwise, it uses the hardcoded descriptor numbers to make the parent knows that the interpreter is ready to process our input.

Fuzzilli internally uses a custom command, by default called fuzzilli, which the interpreter should also implement. The first parameter represents the operator - it could be FUZZILLI_CRASH or FUZZILLI_PRINT. The former is used to check if we can intercept the segmentation faults, while the latter (optional) is used to print the output passed as an argument. By design, the fuzzer prevents execution when some checks fail, e.g., the operation FUZZILLI_CRASH is not implemented.

The code is very similar between different targets, as you can see in the patch for JerryScript that we submitted.

For a basic setup, one needs to write a short profile file stored in Sources/FuzzilliCli/Profiles/. Here we can specify additional builtins specific to the engine, arguments, or thanks to the recent contribution from WilliamParks also the ECMAScriptVersion.

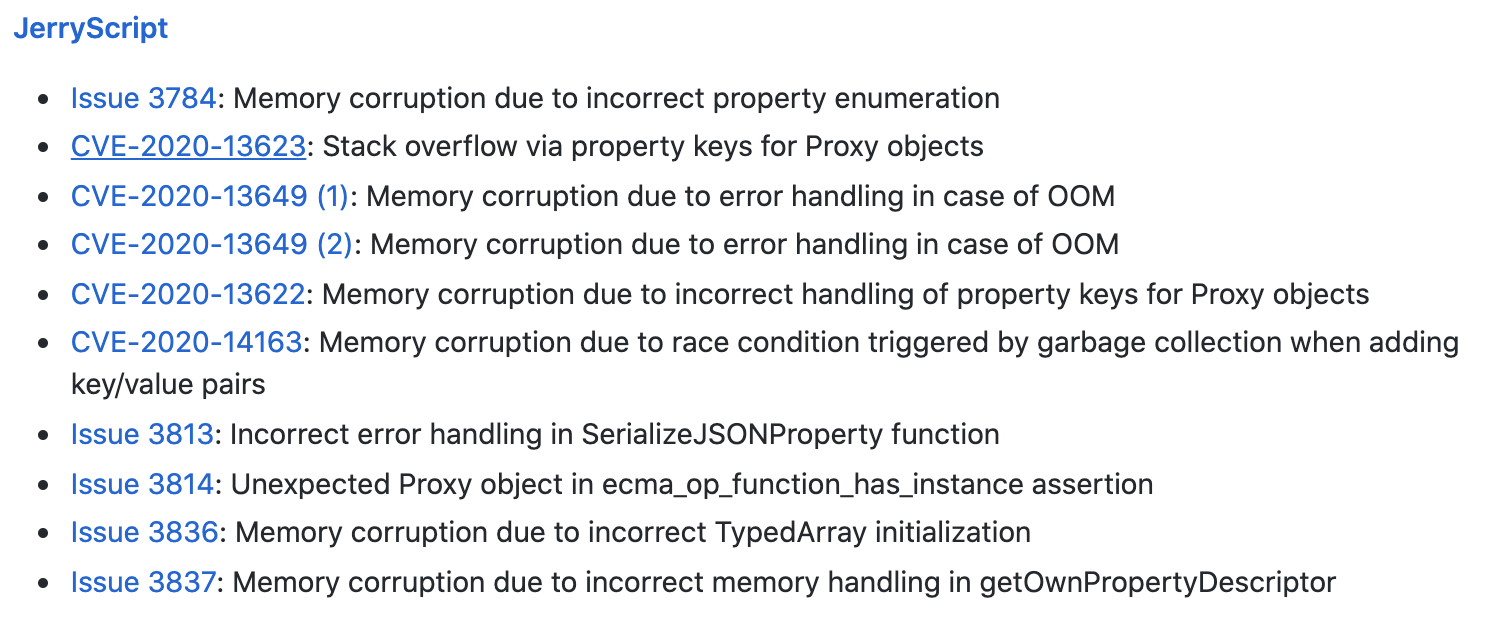

By integrating Fuzzilli with JerryScript, Doyensec was able to identify multiple bugs reported over the course of four weeks through GitHub. All of these issues were fixed.

All issues were also added to the Fuzzilli Bug Showcase:

Fuzzilli is by design efficient against targets with JIT compilers. It can abuse the non-linear execution flow by generating nested callbacks, Prototypes or Proxy objects, where the state of a different object could be modified. Samples produced by Fuzzilli are specifically generated to incorporate these properties, as required for the discovery of type confusion bugs.

This behavior could be easily seen in the Issue #3836. As in most cases, the proof of concept generated by Fuzzilli is very simple:

function main() {

var v3 = new Float64Array(6);

var v4 = v3.buffer;

v4.constructor = Uint8Array;

var v5 = new Float64Array(v3);

}

main();

This could be rewritten without changing the semantics to an even simpler code:

var v1 = new Float64Array(6);

v1.buffer.constructor = Uint8Array;

new Float64Array(v1);

The root cause of this issue is described in the fix.

In JavaScript when a typed array like Float64Array is created, a raw binary data buffer could be accessed via the buffer property, represented by the ArrayBuffer type. However, the type was later altered to typed array view Uint8Array. During the initialization, the engine was expecting an ArrayBuffer instead of the typed array. When calling the ecma_arraybuffer_get_buffer function, the typed array pointer was cast to ArrayBuffer. Note that this is possible since the production build’s asserts are removed. This caused the type confusion bug on line 196.

Consequently, the destination buffer dst_buf_p contained an incorrect pointer, as we can see the memory corruption from the triage via gdb:

Program received signal SIGSEGV, Segmentation fault.

ecma_typedarray_create_object_with_typedarray (typedarray_id=ECMA_FLOAT64_ARRAY, element_size_shift=<optimized out>, proto_p=<optimized out>, typedarray_p=0x5555556bd408 <jerry_global_heap+480>)

at /home/jerryscript/jerry-core/ecma/operations/ecma-typedarray-object.c:655

655 memcpy (dst_buf_p, src_buf_p, array_length << element_size_shift);

(gdb) x/i $rip

=> 0x55555557654e <ecma_op_create_typedarray+346>: rep movsb %ds:(%rsi),%es:(%rdi)

(gdb) i r rdi

rdi 0x3004100020008 844704103137288

Some of the issues, including the one mentioned above, could be probably escalated from Denial of Service to Code Execution. Because of the time constraints and little added value, we have not tried to implement a working exploit.

I want to thank Saelo for including my JerryScript patch into Fuzzilli. And many thanks to Doyensec for the funded 25% research time, which made this project possible.