Fuzzing TLS certificates from their ASN.1 grammar

14 May 2020 - Posted by Filippo CremoneseA good part of my research time at Doyensec was devoted to building a flexible ASN.1 grammar-based fuzzer for testing TLS certificate parsers. I learned a lot in the process, but I often struggled to find good resources on these topics. In this blogpost I want to give a high-level overview of the problem, the approach I’m taking, and some pointers which might hopefully save a little time for other fellow security researchers.

Let’s start with some basics.

What is a TLS certificate?

A TLS certificate is a DER-encoded object conforming to the ASN.1 grammar and constraints defined in RFC 5280, which is based on the ITU X.509 standard.

That’s a lot of information to unpack, let’s take it one piece at a time.

ASN.1

ASN.1 (Abstract Syntax Notation One) is a grammar used to define abstract objects. You can think of it as a much older and more complicated version of Protocol Buffers. ASN.1 however does not define an encoding, which is left to other standards. This language was designed by ITU and it is extremely powerful and general purpose.

This is how a message in a chat protocol might be defined:

Message ::= SEQUENCE {

senderId INTEGER,

recipientId INTEGER,

message UTF8String,

sendTime GeneralizedTime,

...

}

At this first sight, ASN.1 might even seem quite simple and intuitive. But don’t be fooled! ASN.1 contains a lot of vestigial and complex features. For a start, it has ~13 string types. Constraints can be placed on fields, for instance, integers and the string sizes can be restricted to an acceptable range.

The real complexity beasts however are information objects, parametrization and tabular constraints. Information objects allows the definition of templates for data types and a grammar to declare instances of that template (oh yeah…defining a grammar within a grammar!).

This is how a template for different message types could be defined:

-- Definition of the MESSAGE-CLASS information object class

MESSAGE-CLASS ::= CLASS {

messageTypeId INTEGER UNIQUE

&payload [1] OPTIONAL,

...

}

WITH SYNTAX {

MESSAGE-TYPE-ID &messageTypeId

[PAYLOAD &payload]

}

-- Definition of some message types

TextMessageKinds MESSAGE-CLASS ::= {

-- Text message

{MESSAGE-TYPE-ID 0, PAYLOAD UTF8String}

-- Read ACK (no payload)

| {MESSAGE-TYPE-ID 1, PAYLOAD Sequence { ToMessageId INTEGER } }

}

MediaMessageKinds MESSAGE-CLASS ::= {

-- JPEG

{MESSAGE-TYPE-ID 2, PAYLOAD OctetString}

}

Parametrization allows the introduction of parameters in the specification of a type:

Message {MESSAGE-CLASS : MessageClass} ::= SEQUENCE {

messageId INTEGER,

senderId INTEGER,

recipientId INTEGER,

sendTime GeneralizedTime,

messageTypeId MESSAGE-CLASS.&messageTypeId ({MessageClass}),

payload MESSAGE-CLASS.&payload ({MessageClass} {@messageTypeId})

}

While a complete overview of the format is not within the scope of this post, a very good entry-level, but quite comprehensive, resource I found is this ASN1 Survival guide. The nitty-gritty details can be found in the ITU standards X.680 to X.683.

Powerful as it may be, ASN.1 suffers from a large practical problem - it lacks a wide choice of compilers (parser generators), especially non-commercial ones. Most of them do not implement advanced features like information objects. This means that more often than not, data structures defined using ASN.1 are serialized and unserialized by handcrafted code instead of an autogenerated parser. This is also true for many libraries handling TLS certificates.

DER

DER (Distinguished Encoding Rules) is an encoding used to translate an ASN.1 object into bytes. It is a simple Tag-Length-Value format: each element is encoded by appending its type (tag), the length of the payload, and the payload itself. Its rules ensure there is only one valid representation for any given object, a useful property when dealing with digital certificates that must be signed and checked for anomalies.

The details of how DER works are not relevant to this post. A good place to start is here.

RFC 5280 and X.509

The format of the digital certificates used in TLS is defined in some RFCs, most importantly RFC 5280 (and then in RFC 5912, updated for ASN.1 2002). The specification is based on the ITU X.509 standard.

This is what the outermost layer of a TLS certificate contains:

Certificate ::= SEQUENCE {

tbsCertificate TBSCertificate,

signatureAlgorithm AlgorithmIdentifier,

signature BIT STRING

}

TBSCertificate ::= SEQUENCE {

version [0] Version DEFAULT v1,

serialNumber CertificateSerialNumber,

signature AlgorithmIdentifier,

issuer Name,

validity Validity,

subject Name,

subjectPublicKeyInfo SubjectPublicKeyInfo,

issuerUniqueID [1] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

subjectUniqueID [2] IMPLICIT UniqueIdentifier OPTIONAL,

-- If present, version MUST be v2 or v3

extensions [3] Extensions OPTIONAL

-- If present, version MUST be v3 --

}

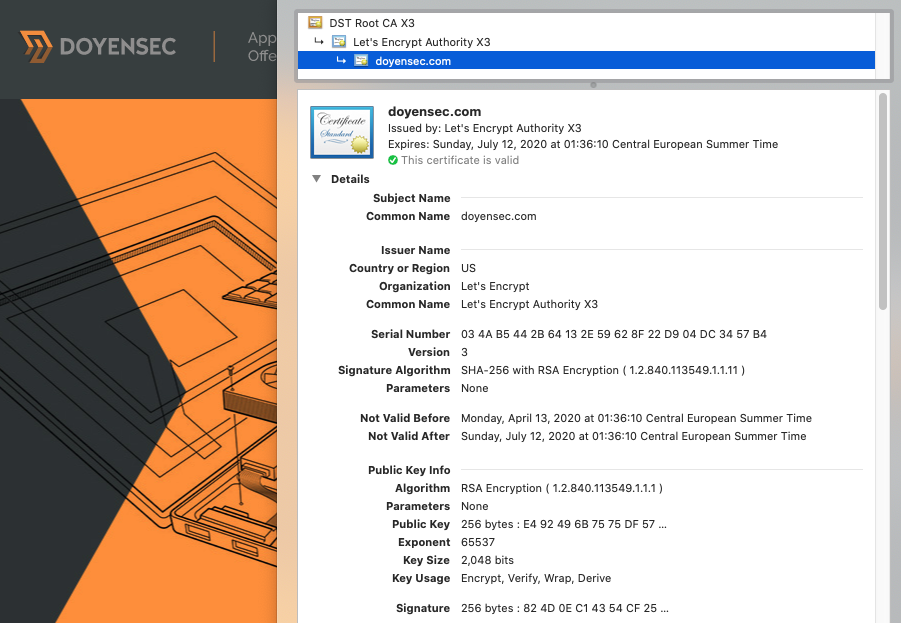

You may recognize some of these fields from inspecting a certificate using a browser integrated viewer.

Finding out what exactly should go inside a TLS certificate and how it should be interpreted was not an easy task - specifications were scattered inside a lot of RFCs and other standards, sometimes with partial or even conflicting information. Some documents from the recent past offer a good insight into the number of contradictory interpretations. Nowadays there seems to be more convergence, and a good place to start when looking for how a TLS certificate should be handled is the RFCs together with a couple of widely used TLS libraries.

Fuzzing TLS starting from ASN.1

Previous work

All the high profile TLS libraries include some fuzzing harnesses directly in their source tree and most are even continuously fuzzed (like LibreSSL which is now included in oss-fuzz thanks to my colleague Andrea). Most libraries use tried-and-tested fuzzers like AFL or libFuzzer, which are not encoding or syntax aware. This very likely means that many cycles are wasted generating and testing inputs which are rejected early by the parsers.

X.509 parsers have been fuzzed using many approaches. Frankencert, for instance, generates certificates by combining parts from existing ones, while CertificateFuzzer uses a hand-coded grammar. Some fuzzing efforts are more targeted towards discovering memory-corruption types of bugs, while others are more geared towards discovering logic bugs, often comparing the behavior of multiple parsers side by side to detect inconsistencies.

ASN1Fuzz

I wanted a tool capable of generating valid inputs from an ASN.1 grammar, so that I can slightly break them and hopefully find some vulnerabilities. I couldn’t find any tool accepting ASN.1 grammars, so I decided to build one myself.

After a lot of experimentation and three full rewrites, I have a pipeline that generates valid X509 certificates which looks like this

+-------+

| ASN.1 |

+---+---+

|

pycrate

|

+-------v--------+ +--------------+

| Python classes | | User Hooks |

+-------+--------+ +-------+------+

| |

+-----------+-------------+

|

Generator

|

|

+---v---+

| |

| AST |

| |

+---+---+

|

Encoder

|

+-----v------+

| |

| Output |

| |

+------------+

First, I compile the ASN.1 grammar using pycrate, one of the few FOSS compilers that support most of the advanced features of ASN.1.

The output of the compiler is fed into the Generator. With a lot of introspection inside the pycrate classes, this component generates random ASTs conforming to the input grammar.

The ASTs can be fed to an encoder (e.g. DER) to create a binary output suitable for being tested with the target application.

Certificates produced like this would not be valid, because many constraints are not encoded in the syntax. Moreover, I wanted to give the user total freedom to manipulate the generator behavior. To solve this problem I developed a handy hooking system which allows overrides at any point in the generator:

from pycrate_asn1dir.X509_2016 import AuthenticationFramework

from generator import Generator

spec = AuthenticationFramework.Certificate

cert_generator = Generator(spec)

@cert_generator.value_hook("Certificate/toBeSigned/validity/notBefore/.*")

def generate_notBefore(generator: Generator, node):

now = int(time.time())

start = now - 10 * 365 * 24 * 60 * 60 # 10 years ago

return random.randint(start, now)

@cert_generator.node_hook("Certificate/toBeSigned/extensions/_item_[^/]*/" \

"extnValue/ExtnType/_cont_ExtnType/keyIdentifier")

def force_akid_generation(generator: Generator, node):

# keyIdentifier should be present unless the certificate is self-signed

return generator.generate_node(node, ignore_hooks=True)

@cert_generator.value_hook("Certificate/signature")

def generate_signature(generator: Generator, node):

# (... compute signature ...)

return (sig, siglen)

The AST generated by this pipeline can be already used for differential testing. For instance, if a library accepts the certificate while others don’t, there may be a problem that requires manual investigation.

In addition, the ASTs can be mutated using a custom mutator for AFL++ which performs random operations on the tree.

ASN1Fuzz is currently research-quality code, but I do aim at open sourcing it at some point in the future. Since the generation starts from ASN.1 grammars, the tool is not limited to generating TLS certificates, and it could be leveraged in fuzzing a plethora of other protocols.

Stay tuned for the next blog post where I will present the results from this research!